Welcome to our second blog post! In this blog, we would like to post our progress and findings so far.

Over the years, Convolutional Neural Networks, ConvNets, or CNNs have been the top choice for image processing and recognition applications. The main advantage of CNNs compared to other image classification algorithms was that the filters that were hand-engineered in traditional algorithms were learnt by the CNNs themselves, saving a lot of effort and time.

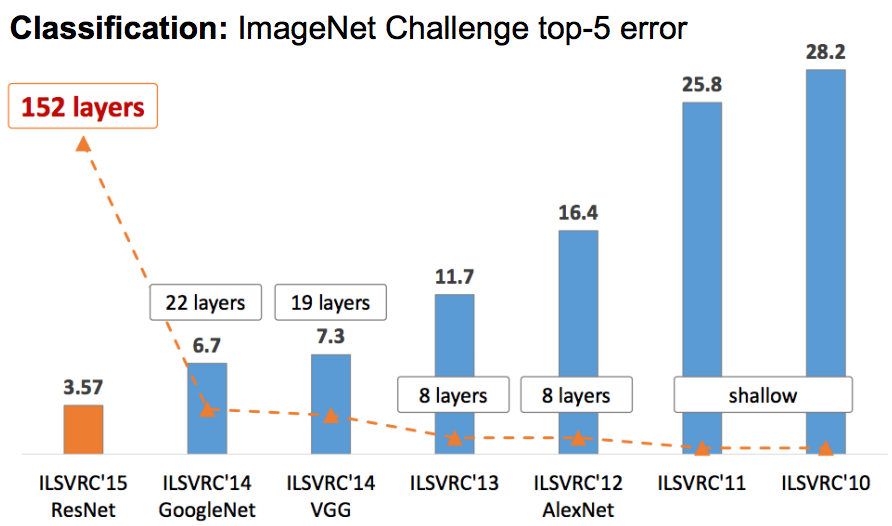

Since other models benefited by increasing the number of layers and large computational power is readily accessible today, it's no wonder that people started to make much deeper, more complex neural networks. This, however, led to a problem: The deeper a ConvNet, the more difficult it is to train it, in return for marginal improvements in accuracy. In some cases, complexification of a ConvNet can lead to reduced accuracy.

Deep residual networks was released by Microsoft, for the ImageNet and COCO 2015 competitions, which had object detection, image classification, and semantic segmentation problems. It came 1st in all the main events of those competitions, and one of the reasons why it did that is because it has a special property- it has shortcut connections, i.e. feeding the input of nth layer to an (n+x)th layer. It has been proven that doing so makes the network easier to train as well as more accurate.

So, naturally, for our requirement, we turned to one of the most famous resnets available- the ResNet-50. We used the model to predict a few outdoor objects:

As well as some indoor objects:

And it is working as expected.

Once we're done with the simple image classification problem, the next step will be to create fleshed-out sentences based on those objects and their relations. The ultimate aim is to take an image of a surrounding, and give it a caption which describes the image sufficiently. This residual network can potentially be the first stage of our captioning system, which takes an image as input and gives the outputs to a long short-term memory network (LSTM) which will establish the relationships between them.

Thank you for reading this blog. The next blog will be up soon!

Top Comments

-

genebren

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

-

pranjalranjan299

in reply to genebren

-

Cancel

-

Vote Up

+1

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Comment-

pranjalranjan299

in reply to genebren

-

Cancel

-

Vote Up

+1

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Children