Introduction

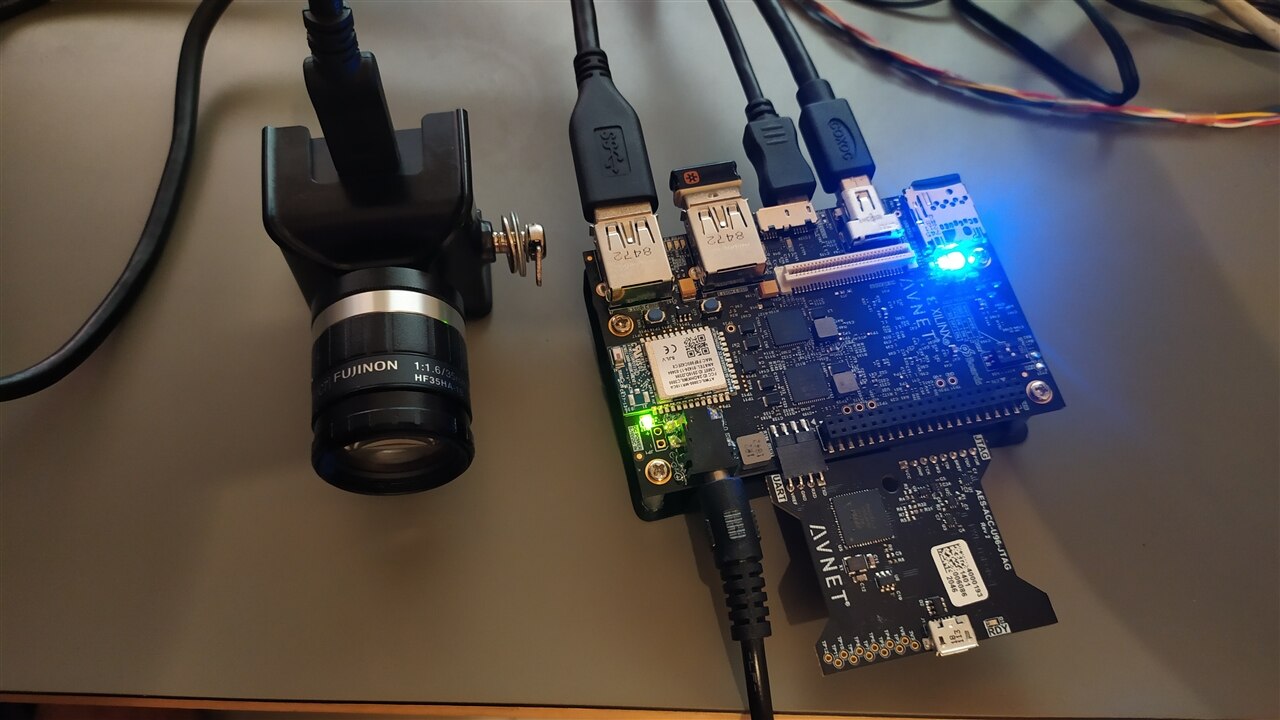

For my path-to-programmable project I have been planning to work on a project that involves live video processing. For this reason, I applied for the Ultra96-v2 FPGA because the ZU3EG FPGA inside has quad A53 cores which are fairly capable "application processors" and so they are much better suited for running these kinds of applications compared to the dual A9 application processors on the Zynq-7000 series FPGAs. Naturally, I have been using this opportunity to explore what different options I have available. One of the options that I have come across and which seems very promising is PYNQ, so I want to explore this further.

Why PYNQ

For me, the main attraction in PYNQ is that it promises ease of use.

Generally speaking, FPGAs promise giving a lot of "power" to the developers to build applications that are otherwise not possible with microcontrollers and general processors. However this usually comes at the cost of increased development effort and time. Especially for developing more complex applications. I have developed some "baremetal" applications in the past, which is a decent option for many simpler applications. However, as the complexity level increases, things get harder and you HAVE to eventually move to linux. For many people, myself included, linux can feel overwhelming.

Enter PYNQ: PYNQ brings Python simplicity to FPGA development, making it easier for developers to unleash FPGA's potential without complex hardware languages (or coding low-level applications). It runs over Linux, but provides a familiar Jupyter-based environment on top of it, which feels more welcoming for many of us! Also, there is a vibrant community around PYNQ, meaning many people are willing to help and also share their own creations. All of this helps when you're learning.

Another thing I am coming to appreciate about PYNQ is that it it is very good for prototyping ideas. For example, if you have an idea for a cool image processing algorithm that you want to try out on the FPGA, you can do that much more easily using PYNQ, in a matter of minutes to hours instead of days to weeks (or months!). Then, once the algorithms shows promise, you identify bottlenecks and look into accelerating the parts taking most time in Programmable logic.

Running PYNQ on Ultra96-v2

This topic is covered very nicely by the getting started guide provided on the PYNQ page, so I don't want to replicate the information again. The link to the page I used is:

Getting Started — Ultra96-PYNQ v2.6 documentation

The page says "Ultra96" instead of "Ultra96-v2", however, the information is still valid for the Ultra96v2 also, except for some minor changes like the changed position of the power button on the Ultra96v2.

In my case I managed to access the board in 3 ways:

- From the host computer. This is possible because the board appears as a network adapter on the computer and then can be accessed from a browser from the host computer

- Over Wifi

- Using USB mouse/keyboard and a monitor connected directly to the display port

The details for how to connect using all these 3 methods is given on the getting started page shared above. Overall, I found the process smooth and easy. It took me less than an hour from start until I was up and running and had starting to explore the example notebooks.

Exploring Examples

The basic example notebooks are very useful for getting started and give you the boilerplate code for many starter applications. I went through them all and learnt many useful things.

Since I am interested in, above all else, image/video processing on the Ultra96, I found a few notebooks particularly useful. This is because I am using a USB camera "See3CAM_CU30_CHL_TX_BX from e-con systems for capturing images/video, for which the usb_webcam.ipynb helped me get started in no time. And the display_port_introduction.ipynb notebooks helped display the captured /processed images directly on to the connected screen (which had speed advantages). Infact, I reused some of the code in these notebooks in my subsequent experiments.

Adding OpenCV to PYNQ

As I mentioned before, I was, in particular, interested in notebooks related to image and video processing.

Luckily, I found a project called "PYNQ - Computer Vision" that looked promising for this. This project is maintained by Xilinx (now AMD) itself. Best of all, it's open source and all the code is available on github.

https://github.com/Xilinx/PYNQ-ComputerVision

Installing this was easy. I opened a terminal in PYNQ (On the top-right part of the jupyter main page, click "New" -> "Terminal")

Then type the following command in the terminal:

sudo pip3 install --upgrade git+https://github.com/Xilinx/PYNQ-ComputerVision.git

The process took only a few minutes to complete.

Once it completed, I saw a new folder inside my PYNQ main page

Inside the folder I found several very interesting notebooks:

Out of these example, I was able to run some, but not all. In particular, I could not run the (3a) stereo block matching in hardware, since it said that the blob was not found. I encountered the same issue with the Optical flow example.

I tried to dig further into this, and so far the only thing I am able to find is that it's possibly because the OpenCV-for-PYNQ was last compiled for Vitis 2018.3 which is more than 4 years old, while I am using PYNQ v3.0.1. I believe I will need to recompile the OpenCV-for-PYNQ overlays for the current version of Vitis that v3.0.1 is compiled against to get them to work again. I will look more into how to accomplish this.

Next Steps

The github page gives a guide on how to compile your own PYNQ "overlays" which is what I am most interested in.

However, it also mentions that it only support building with 2018.3 tools, so I will need to look into how to accomplish the same with the latest version of tools instead. I do hope the OpenCV for Xilinx is maintained more actively so that it can deliver the promise of convenience for everyone.

If any of the readers managed to make the Opencv accelerated overlays work, please leave a comment below. I will be interesting in knowing how.

Thanks for reading. I hope to share more on this as I learn more!

Top Comments