Hi all,

By applying the model we tried to follow the guide on arduino for image classifaction with Edge impuls.

And as usual in engineering we found some challenges

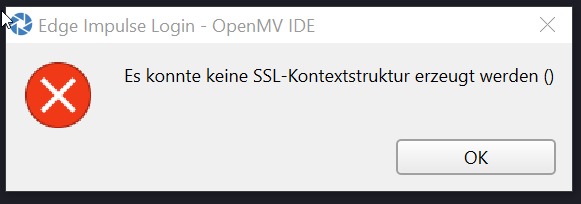

The first one was uploading pictures with the IDE:

But do not worry if you cannot login to the Edge account from the IDE, there is already a ticket open to get it fixed.

Might just be an outdated version of OpenSSL.

Error Message looks like that, but the good thing is we do not need the feature

You can alternatively upload the data via the web browser.

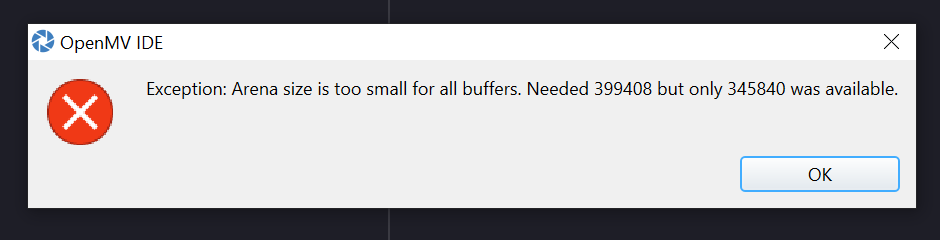

The 2nd issue is about memory:

Our screenshots from the trained model, showed before, had a tiny issue which we missed We totally forgot about memory:

As it turns out the model needs in peak 2.5MB RAM which we do not have have We have access to 1MB of RAM.

Within that we should fit the model and also the picture to analyse. So back to teaching the model with lower resolution so we can run it on the Nicla Board.

The result was a tiny bit worse detection rate but no crash on the openMV IDE

We also forked the openMV IDE via Github to include the trained model and build our own firmware.

And digging around on the Edge implus platform, we would not have to go this extra step, you can directly download the binary file on the website.

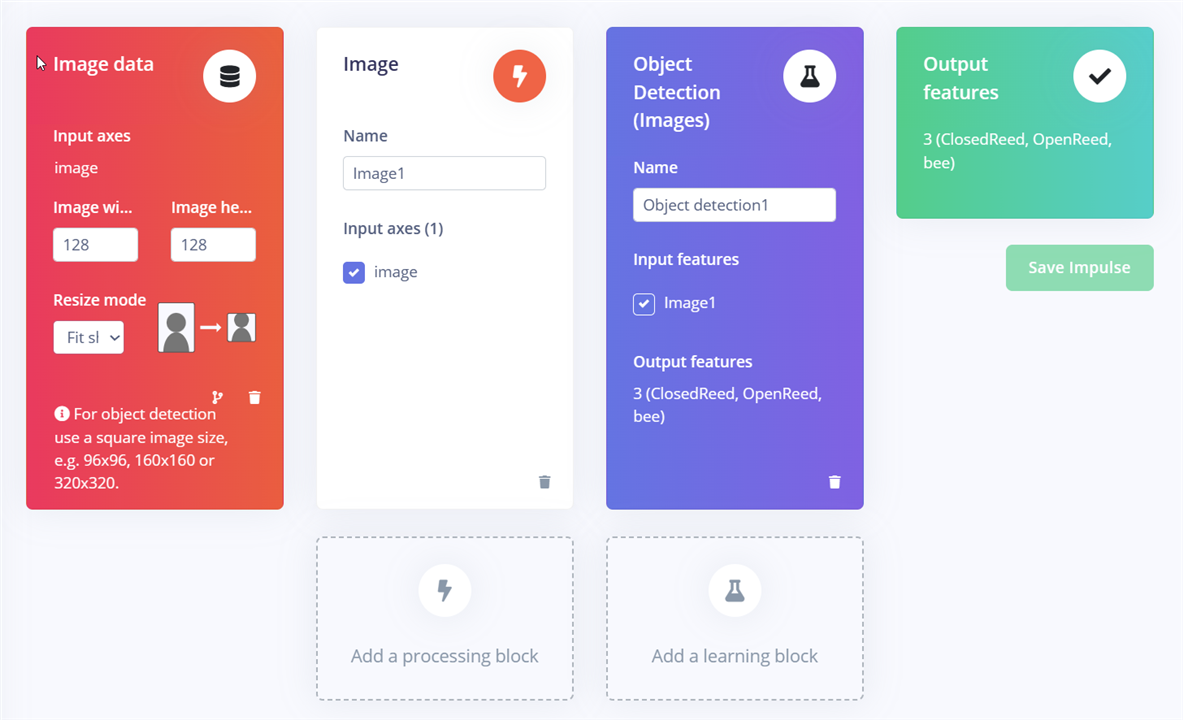

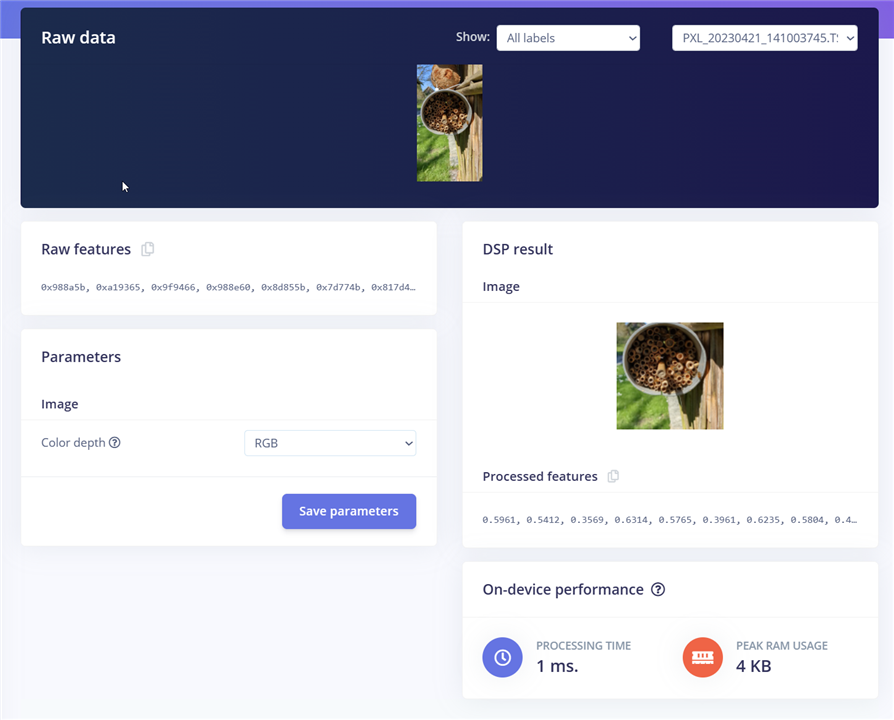

So here is the image data setup:

with the RGB color profile:

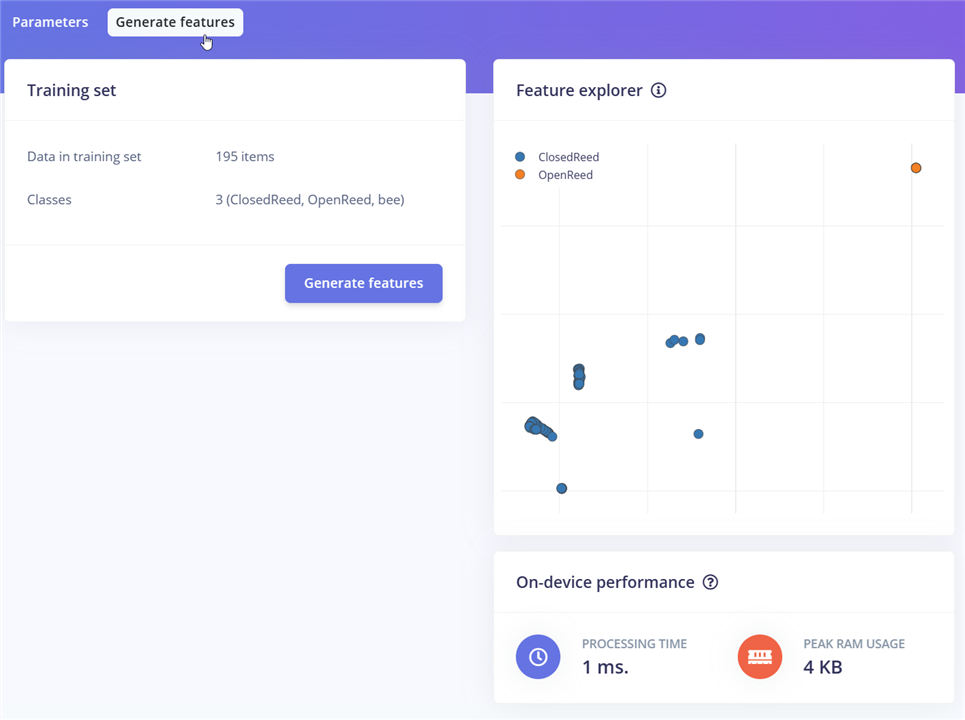

Feature generated several times:

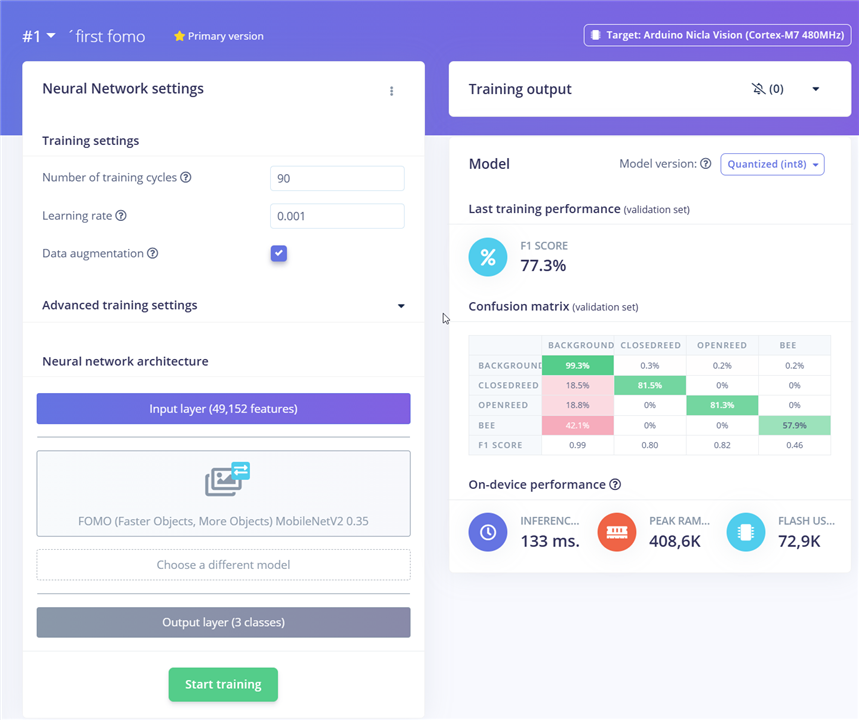

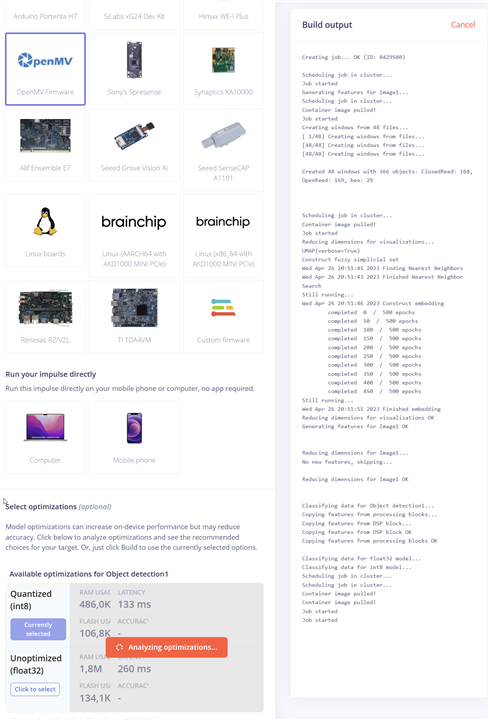

And finally an model that fits with the RAM even during peak RAM needs:

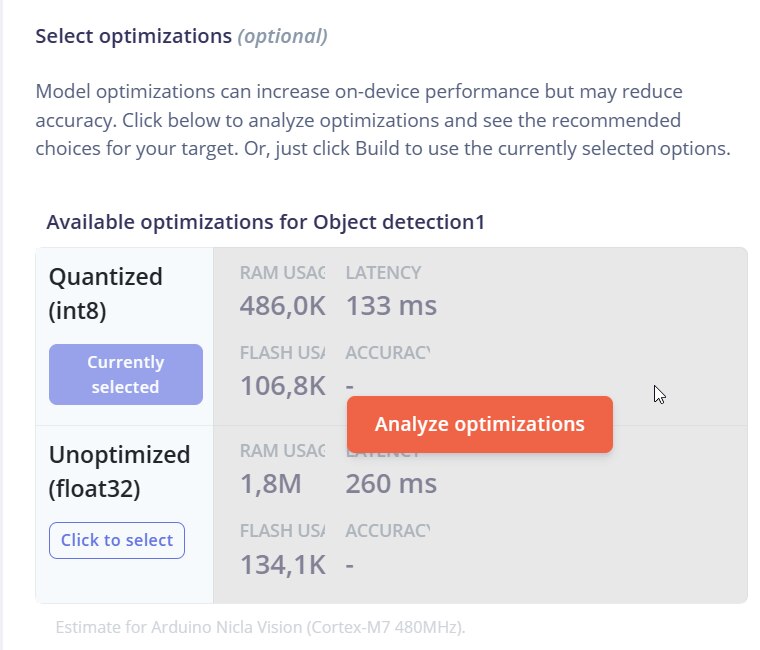

The solution can be optimized which we went for :

And then we selected openMV as export:

The export can be found here: in case i am not allowed to share it i will remove it

It would be great if the export contains how the model was generated.

8372.dumo-project-1-open-mv-fw-v13_latest.zip

We also tried a model with grey scale to reduce the RAM usage because of the three colors with each 8 Bit. But we did not see great results with it

Here is the code:

# Edge Impulse - OpenMV Object Detection Example

import sensor, image, time, os, tf, math, uos, gc

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.B128X128) # Set frame size to QVGA (320x240)

sensor.set_windowing((160, 160)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

net = None

labels = None

min_confidence = 0.9

try:

# Load built in model

labels, net = tf.load_builtin_model('trained')

except Exception as e:

raise Exception(e)

colors = [ # Add more colors if you are detecting more than 7 types of classes at once.

(255, 0, 0),

( 0, 255, 0),

(255, 255, 0),

( 0, 0, 255),

(255, 0, 255),

( 0, 255, 255),

(255, 255, 255),

]

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

thetime = time.localtime()

# detect() returns all objects found in the image (splitted out per class already)

# we skip class index 0, as that is the background, and then draw circles of the center

# of our objects

ClosedReed=0

OpenReed=0

bee=0

for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])):

if (i == 0): continue # background class

if (len(detection_list) == 0): continue # no detections for this class?

print("%s are here:" % labels[i])

for d in detection_list:

if( labels[i] == 'ClosedReed'):

ClosedReed = ClosedReed + 1

[x, y, w, h] = d.rect()

center_x = math.floor(x + (w / 2))

center_y = math.floor(y + (h / 2))

print('x %d\ty %d' % (center_x, center_y))

img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2)

if( labels[i] == 'bee'):

bee = bee + 1

[x, y, w, h] = d.rect()

center_x = math.floor(x + (w / 2))

center_y = math.floor(y + (h / 2))

print('x %d\ty %d' % (center_x, center_y))

img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2)

if( labels[i] == 'OpenReed'):

OpenReed = OpenReed + 1

[x, y, w, h] = d.rect()

center_x = math.floor(x + (w / 2))

center_y = math.floor(y + (h / 2))

print('x %d\ty %d' % (center_x, center_y))

img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2)

if(ClosedReed > 0 or OpenReed > 0 or bee > 0): print(thetime)

if(ClosedReed > 0): print('ClosedReed:' , ClosedReed)

if(OpenReed > 0): print('OpenReed:' , OpenReed)

if(bee > 0): print('bee:' , bee)

EXCITING NEWS:

Now to the good part - We are able to detect closed reeds and distinguish them from open ones with quite a bit of reliability

If the LoRa network would be connected we would send the count of the closed reeds over to the MKR board and give the biologists or bee researchers the information whether it is worth to drive out to the remote site and pickup the nest with the filled reeds or not.

Video snipped of the model running

Here is the Terminal output when what has been detected

(2000, 1, 1, 2, 33, 42, 0, 1) ClosedReed: 1 OpenReed: 1 OpenReed are here: x 44 y 28 x 92 y 100 bee are here: x 92 y 68 (2000, 1, 1, 2, 33, 42, 0, 1) OpenReed: 2 bee: 1 bee are here: x 68 y 84 (2000, 1, 1, 2, 33, 42, 0, 1) bee: 1 ClosedReed are here: x 92 y 92

With that model detection algorithm the power consumption went up quite a bit ( round about a factor of three compared to the stock firmware):

148mA @5V = ~760mW

For detecting closed reeds it would be good enough to wake up the board every 15minutes and evaluate the situation.

With that model we get about 7fps (in the IDE) which might be too slow for detecting bees because they seem to be quite fast.

Thanks for reading so far This project has been really exciting and a bit challenging, but we are actually happy with our output.

Given that we are very close to the contest deadline, in the next blog tomorrow we will discuss this result a bit further, summarize our findings and set new steps for the future.

Bye