Up to this point, I haven't had much luck properly identifying my ducks. Mostly my issues have come from random other things being classified as the objects I'm looking for. In my first attempt it was spotting unicorns everywhere, which I think is because I had not trained it to know about the background existing. For the second try I included pictures of the background, and it was much more successful at identifying my rubber duckies, but whenever it saw anything else it thought it had to be a duck.

With a lot more reading and going through various examples (none of them seem very complete), I started to suspect that I might be using the wrong kind of base model for my project.

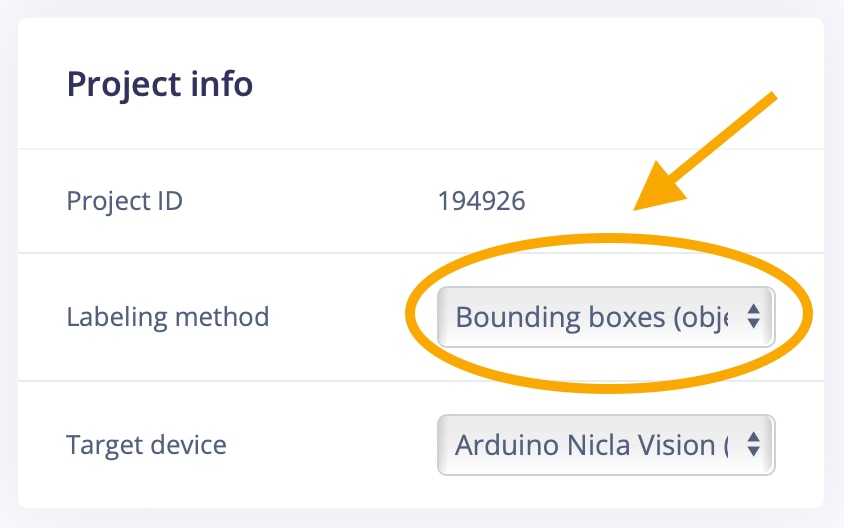

For this try, I decided to use the bounding box method, because I saw this in several of the examples I’ve been desperately reviewing after my first failed attempts. This can be done on the dashboard, it's on the right side of the screen:

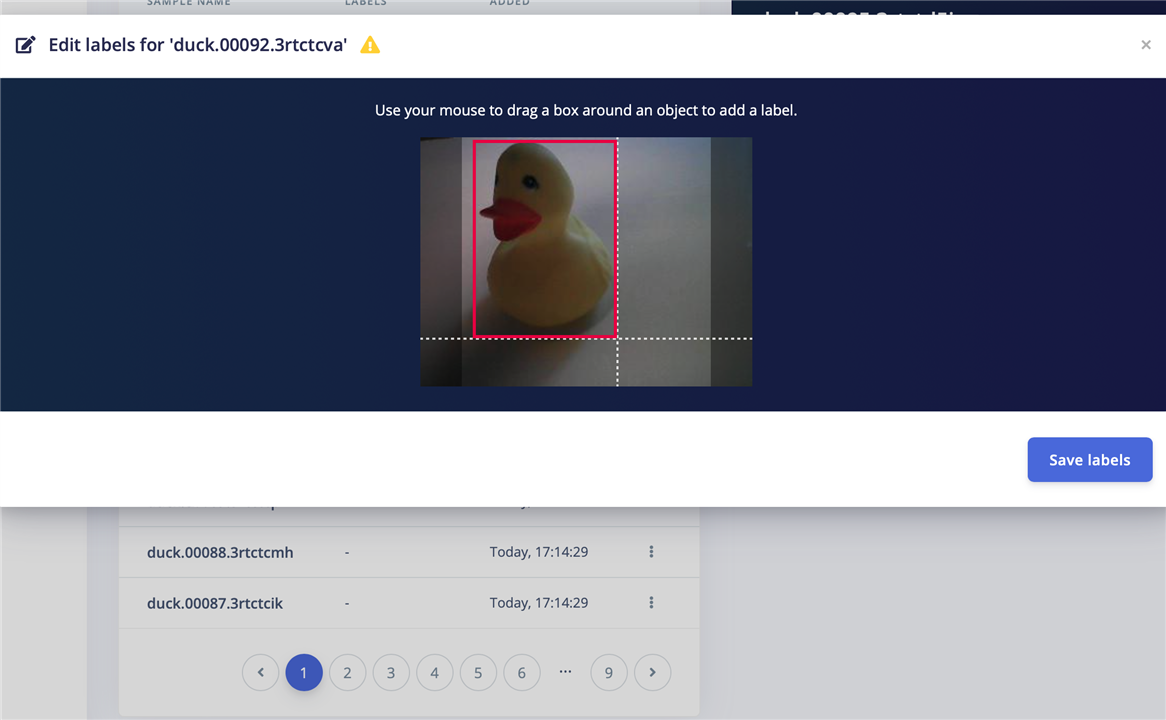

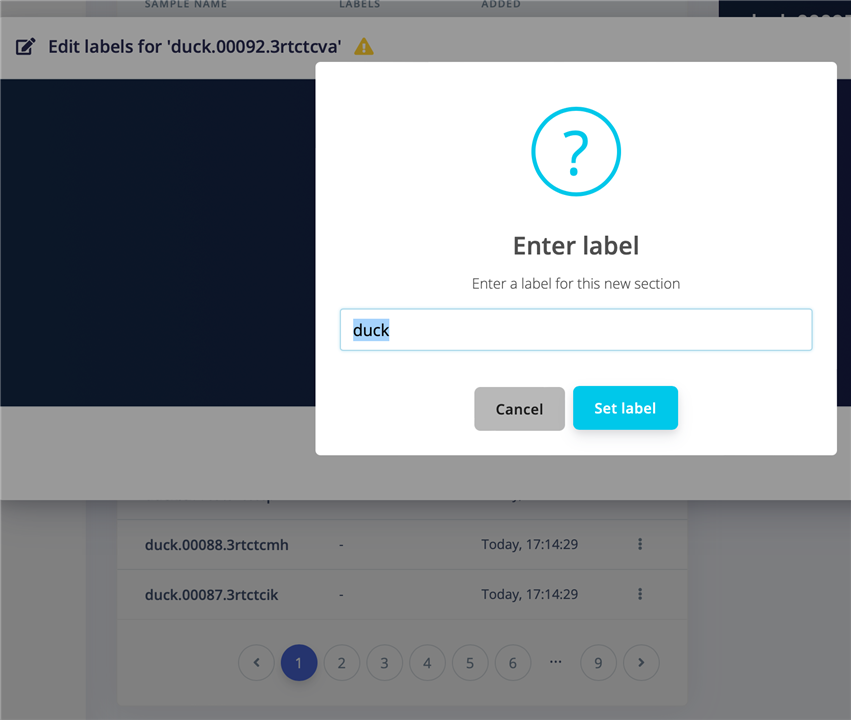

Of course, it could never just be that easy... and indeed, choosing this new method meant having to manually draw boxes around the ducks in every single duck picture.

I removed the background set, as I didn’t think that was needed for this method. That turned out to be a lucky good guess. Like I half mentioned before - none of the examples I looked at explained much of it. I may be too new at this stuff, and missing that base level of knowledge

That bounding box approach seemed like a good idea, so I spent some painful minutes risking repetitive stress injuries while drawing boxes around ducks.

Notice all the page dots on that picture? That was the original set of 100 pictures... and I quickly realized that would hurt a lot, especially if my guess about using this new model turned out to be wrong! I reduced the image set down to just 30 to keep it under control.

Thankfully, it remembers the label so it's easy to re-use on the next duck. and the next duck... and the next duck....

This really feels like something that should be automated to just assume the blob of stuff on the otherwise uniform background is the item, doesn't it? That would reduce the manual labour down to just tweaks here and there. I could've sworn I've seen examples that do that for machine vision, but just not in a way that outputs useful data for this ML learning model.

Anyhow, after a while I got all the ducks in their little boxes and I carried on with the next steps of training the model and all that.

However, when I got to the Transfer Learning section, I got this error message in Edge Impulse:

Failed to start job: Your labeling method is set to "Bounding boxes (Object detection)", but you're trying to train a non-object detection model. Either change the labeling method (on **Dashboard**), or remove this learn block and add an 'Object detection' block under **Create impulse**.

It took me some time to figure out what it was, as I didn’t see any other options in the list of models there. The error message does give the correct hint, but I wasn't familiar enough with Edge Impulse to know where to look yet. It made sense once I figured it out. Learning curve! yay!

Turns out, to fix this I had to go back to “Create Impulse” and delete the purple learning block, and re-add a new learning block, which then automatically showed me the correct one to pick for the bounded box method. Maybe a warning indicator on that block would have been helpful - Edge Impulse seems to know it was wrong, but just didn't show it.

Then when I went back to Object Detection, it showed me a whole new set of models to choose from. That was encouraging

I chose FOMO MobileNetV2 0.1, to match the MobileNetV2 0.1 we had in my earlier attempts. And because I feared I might accidentally choose the wrong model and miss out

Here is one of the examples that kind of helped me figure that out: https://docs.edgeimpulse.com/docs/tutorials/object-detection

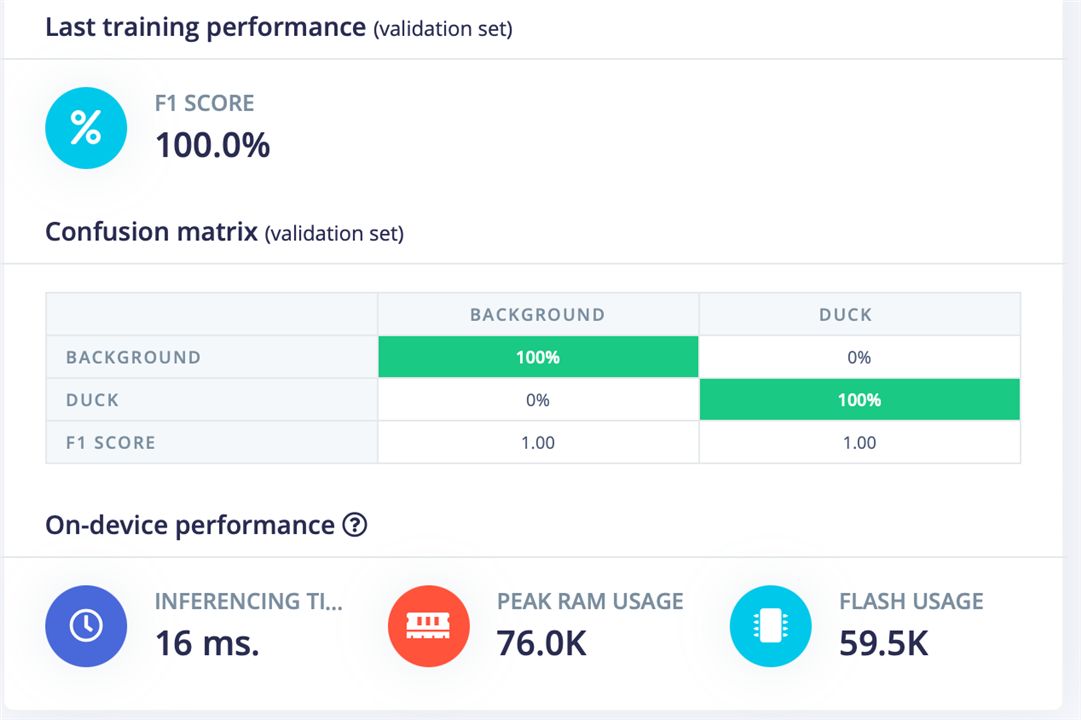

The training results looked really promising this time, with a 100% success score and it has a result for background too, which I’m guessing it derived from the parts of the images I didn’t select.

Once again, it was back to Deployment to build the firmware and test it out. This time I decided to use the script that comes with the Edge Impulse download, because I think I might need it for the bounding boxes to show up properly.

I'm thinking that the crash in my very first attempt may have been random, not due to the script. I've run into it a few more times afterwards with the other script.

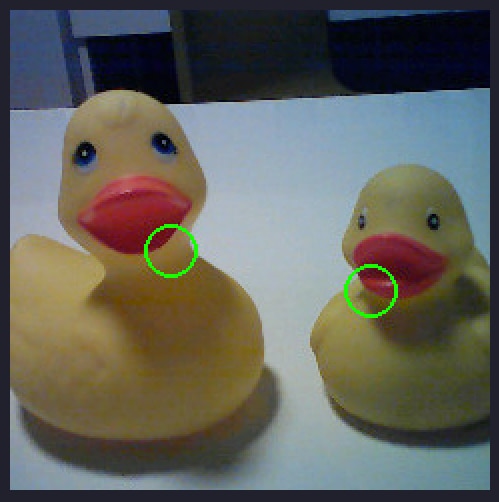

And Hooray! Success!!

Check it out! Two ducks!

It now also puts the markers on the ducks - in my previous attempt it seemed to just put the marker in the centre, just indicating that a duck was somewhere in the picture. So that's an encouraging step in the right direction too

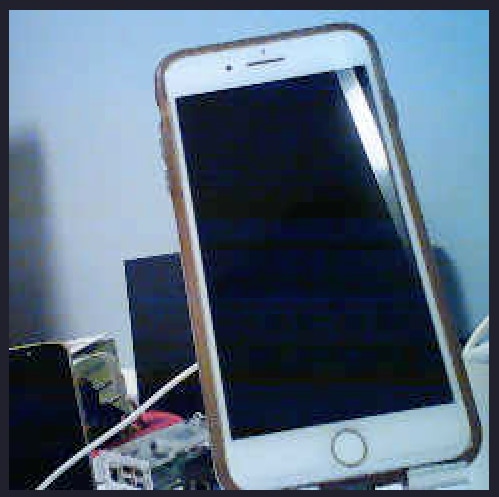

And check this out:

My iPhone is not a duck anymore!

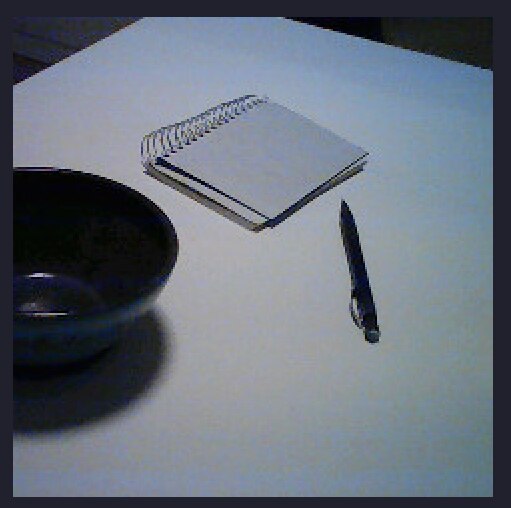

Also no ducks here:

It didn’t give me the rectangles I had hoped and expected, but it showed the little identification circles properly on the ducks, and my iPhone is no longer a duck! And neither is the rest of the random stuff that’s on my desk! It does have the occasional hiccup where it thinks something is too duck-like to ignore, but I can live with that.

I’m calling this a win!

Next step: Bees! and a mean hornet or two

ps, while digging through the examples I also noticed that the bounding box method is kind of required when you want to count the number of objects that are in a picture - this is something we’ll probably need if we are counting multiple bees on a blossom.

pps, I’m not quite sure yet how to avoid counting a single bee multiple times.