After my failed attempts at trying to make a LoraWan connection to The Things Network, I thought I'd trying failing at something new next

So I pulled out the Nicla Vision board and decided to give it a try!

The Nicla Vision board is made by Arduino and available from the element14 stores.

It has a lot of interesting capabilities built into a board not much bigger than the size of a Canadian quarter (which is also the size of a US quarter ), but for this project we intend to focus on just using the camera, together with the processing power, to do some image processing to try and identify objects that come into view - in our case that will be bees and hornets.

This also means we won’t need do worry about any pinouts or external hardware, as everything we need (and more) is already built onto the board!

I just noticed the board also includes a nice little RGB LED, so we will obviously have to use that just because it’s there

This is the first time I’ve ever used a Nicla Vision board, so I decided that the best place to get started with it is to, well, follow the “Getting Started” guide

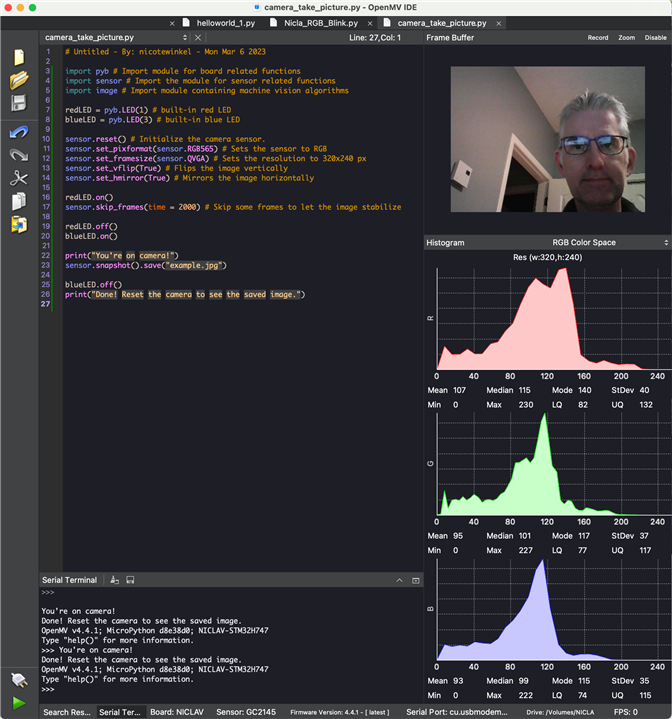

This Getting Started guide shows how to get set up with OpenMV for “simple machine vision tasks using a camera sensor”, which I hope includes spotting bees

It looks promising though, and their overview includes some reassuring words: “The Nicla Vision has OpenMV firmware on the board by default, making it easy to connect to the OpenMV IDE.”

I’ve never before worked with machine vision, so it’s nice to have this level of guidance to help me get started.

Looking through the examples I already see all the building blocks that will be needed for the rest of this project, from MQTT via WiFi (since I still can’t get LoraWan connected), to setting up object detection models.

One of the examples shows how to use the camera, through OpenMV:

Saving the picture is also very easy, although I think the board is probably quite limited as to how many pictures it can hold.

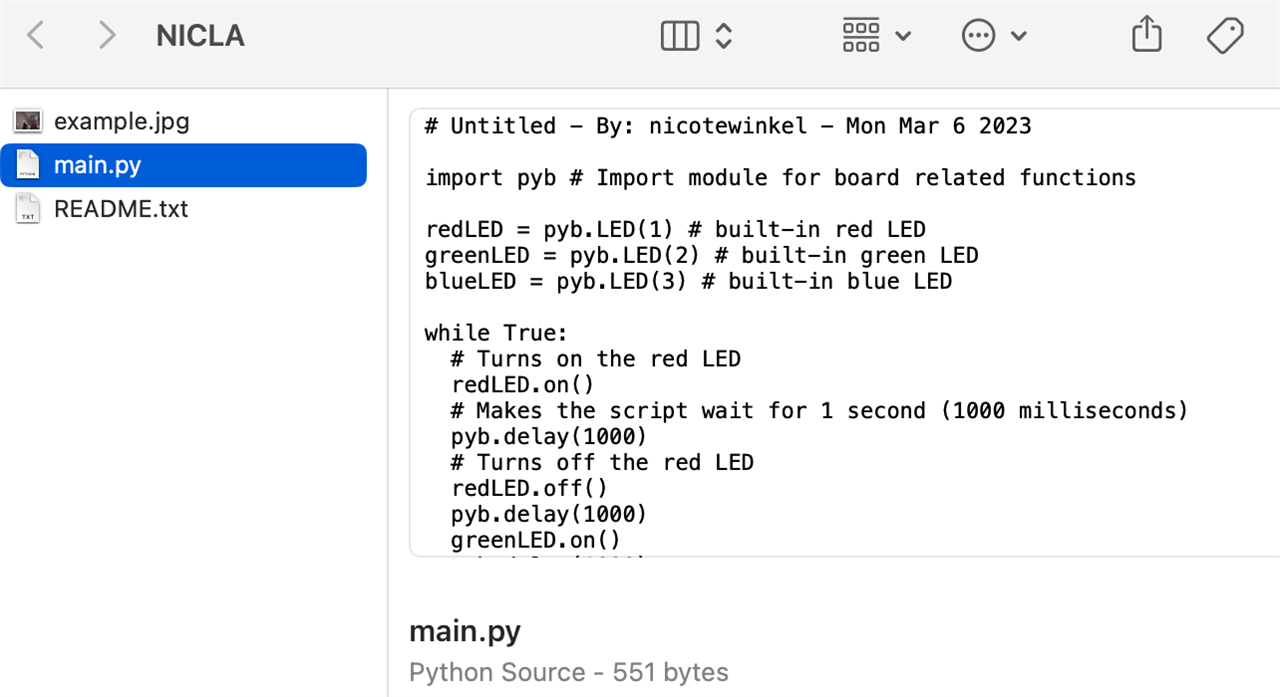

But as I said, it's nice and easy: the drive mounts automatically. I renamed it from "no name", but that was a waste of time as every time you re-flash the firmware it resets the name

And then, from the drive you can just copy the picture by dragging and dropping:

If you want your own code to run automatically when the board starts up, you simply have to name it "main.py" on that same drive. Nice and easy!

When I first looked for examples, I noticed mention of OpenMV as well as Edge Impulse, and TensorFlow, thinking they were separate things, but as I started digging a bit deeper for examples on how to train the model for my own images, I see that they all work together.

Checking out this example brought it all together.

“This tutorial teaches you how to train a custom machine learning model with Edge Impulse® and to do image classification on the Arduino Nicla Vision. The Machine Learning (ML) model will use the TensorFlow Lite format and the classification example will run on OpenMV.”

All the buzzwords in one sentence! This feels like a giant leap for this humble little hobbyist, pretty exciting actually as it breaks down several of the first steps hurdles that have been keeping me from exploring this kind of functionality in the past.

First, I needed to get all of my ducks in a row, with a few unicorns thrown in:

Turns out I had more rubber duckies, so I didn't get ALL of my ducks in row... maybe that explains the problems I ran into later...

One of the first things needed is an Edge Impulse account. Thankfully, it is “Free for individual developers”.

The Arduino tutorial mentioned above will step you through everything needed to get things set up, however, they seem to be outdated at the point where the ML model gets deployed, under the heading “Deploy”. After choosing “build firmware”, you don’t actually need to follow all of the next steps involving GitHub, as choosing “build firmware” will actually build the proper bin file for you. The rest of the steps appear to be based on having made the choice to build a library (as the image does show).

Instead, after exporting the ML model to your computer and unzipping it, follow the instructions from Edge Impulse.

Basically, you will be installing the file “edge_impulse_firmware_arduino_nicla_vision.bin” onto the Nicla Vision board using the OpenMV IDE by clicking “Tools”-“Run Bootloader(Load Firmware). All the other bin files are for other boards, and can be ignored or deleted.

Note that if you had only exported to a library, to load the .tflite file from the filesystem, as is shown in the face detection sample, then the board will likely run out of memory. In my case with only 45 pictures, it was just shy of fitting in memory. So it has to be done as a firmware update that includes the ML model.

The next message will say the firmware is out of date, but just ignore that and leave the “remind me again” unchecked so you can easily revert back to the general firmware if desired.

After updating the firmware like that, DO NOT use the ei_image_classification.py python script that was part of the downloaded zip file (still in OpenMV). At least, in my case, it just kept crashing. Instead, I used the tf_object_detection_examply.py that’s in the OpenMV examples list, and changed the line that loads the model to use the model named “trained”. You can find the name of your ML model in the python script that Edge Impulse created, but as far as I can tell it seems to default to “trained”.

labels, net = tf.load_builtin_model("trained")

As they’ve mentioned a few times before in the tutorials, if you haven’t already done this, add these two lines to the script to flip the image to make it look normal when holding the Nicla Sense board with the usb cord under it.

sensor.set_vflip(True) # Flips the image vertically

sensor.set_hmirror(True) # Mirrors the image horizontally

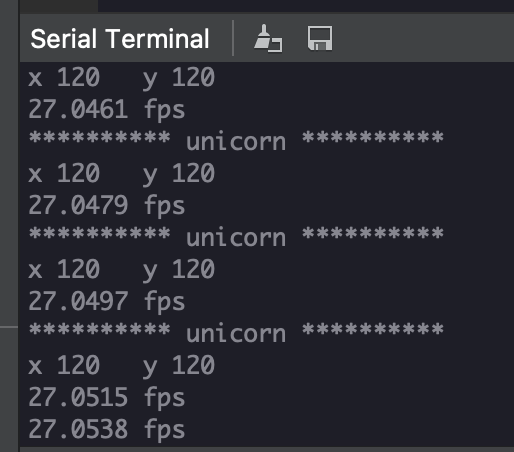

This worked really quite nicely for me, with the minor exception that EVERYTHING is being identified as a unicorn!!

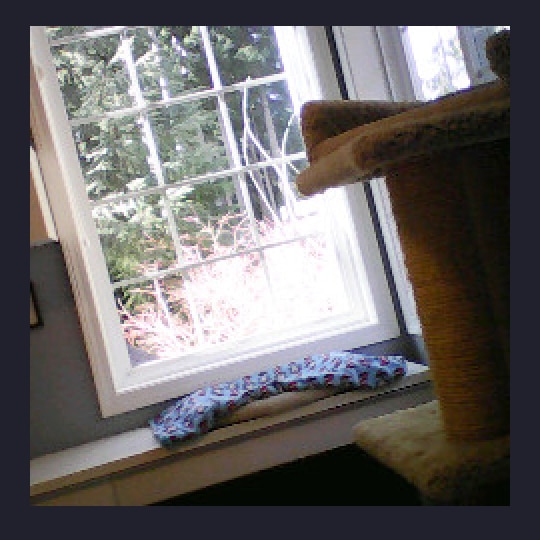

Does this look like a unicorn to you?

Now, it’s entirely possible that I am surrounded by magical unicorns in an overlayed extra dimension that I can’t see, but I have a hunch that I may have missed something important when building the model… I think I read something about needing an extra class for when there’s nothing to identify. Even the example code says this in a comment: “we skip class index 0, as that is the background”.

I think I’ll have to try building a new ML model. Maybe I’ll just focus on rubber duckies. The unicorns I have look too much like ducks :D