Table of contents

Abstract

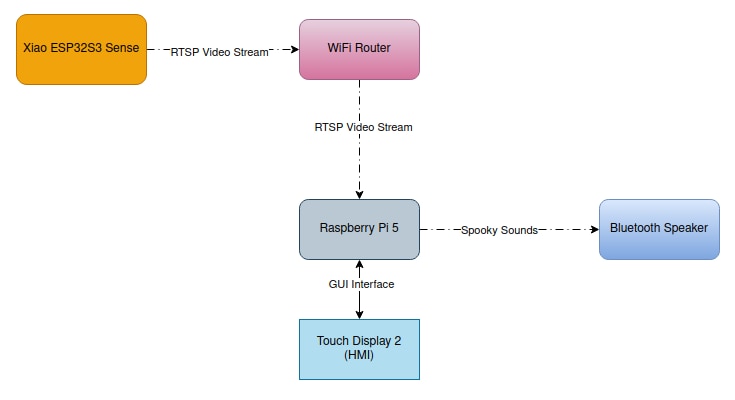

This project is an automated scare system for Halloween. An ESP32-CAM streams video to a Raspberry Pi. The Raspberry Pi uses computer vision to detect victims and triggers terrifying sounds from a hidden Bluetooth speaker for a perfectly timed fright.

Project

Introduction

Walking through a dark hallway on Halloween night, completely unaware that you're being watched, a blood-curdling scream suddenly pierces the silence. This is the experience I aimed to create with my Motion-Activated Scare System. It’s a fully automated Halloween prank that uses computer vision to detect unsuspecting victims and trigger terrifying sounds at just the right moment.

Halloween has always been about surprise and the thrill of unexpected scares.My project takes this timeless tradition and improves it with computer vision, creating an haunting system that reacts without much human help.Unlike static decorations or simple motion sensors, this system uses advanced computer vision to tell different types of movement apart, ensuring scares happen only when a real person enters the detection zone.

The beauty of this project lies in its hidden design. There is no visible sensor waiting on the wall and no obvious speaker giving away the trick. The camera hides within Halloween decorations—perhaps inside a carved pumpkin or behind artificial cobwebs—while the Bluetooth speaker conceals itself nearby. For victims, it seems as if the house itself has come alive, watching their every move and responding with perfectly timed terror. This creates an authentic haunted house atmosphere where the technology disappears, leaving only the experience behind.

Hardware for this Spooky Build

At the edge of this scare system is the Xiao ESP32S3 Sense, a compact camera with built-in WiFi that provides an RTSP video stream wirelessly and is small enough to hide inside decorations. Its low power consumption allows it to run on a USB power bank for hours, eliminating the need for visible power cables that might give away its location.Remember to attach a heatskink to it as it gets really hot while streaming the video.

For processing the video stream and running the motion detection algorithms, I chose a Raspberry Pi 5 with 4GB of RAM with the Touch Display 2 attached for portability.

While this might seem excessive for a Halloween decoration, the Raspberry Pi provides the computing power needed to run OpenCV and perform real-time computer vision. It can process 25-30 frames per second while managing the GUI interface and triggering audio playback at the same time. The Pi's Bluetooth capabilities also allow it to connect wirelessly to the speaker, keeping the entire system hidden.

The audio delivery system consists of a portable Bluetooth speaker that can be placed anywhere within 30 feet of the Raspberry Pi. This wireless connection allows the speaker to hide behind victims or beside the walking path, maximizing the surprise factor. I selected a speaker with good bass response to deliver those deep, resonant screams that make people jump. The ability to run on battery power means the entire system—camera, processor, and speaker—can operate completely wirelessly if needed.

Setting up for the Scare

The initial configuration began with flashing the default camera streaming firmware in Xiao ESP32S3 by updating the WiFi Credentials in the Arduino sketch so that it can connect to stream the video feed.The serial terminal provides the IP address of the camera.Configuring the camera settings proved crucial for optimal performance. Through the web interface accessible via the IP Address provided through the serial terminal, I set the resolution to 640x480 pixels—a balance between image quality and processing speed. Higher resolutions would bog down the Raspberry Pi's processor, while lower resolutions might miss subtle movements. I adjusted the frame rate to 20-25 fps and fine-tuned the brightness for the dimly lit Halloween environment. The camera placement itself required experimentation, eventually settling on a height of 4-6 feet with a slight downward angle to capture full-body movement in the detection zone.

Setting up the Raspberry Pi involved installing the operating system and all necessary dependencies. After flashing Raspberry Pi OS to a microSD card and completing the initial boot configuration, I installed OpenCV for computer vision, Pygame for audio playback, and Tkinter for the GUI interface. The Bluetooth setup required installing PulseAudio modules and using the bluetoothctl command-line tool to pair with the speaker. This process involved putting the speaker in pairing mode, scanning for available Bluetooth devices, pairing with the speaker's MAC address, and configuring PulseAudio to use Bluetooth as the default audio output.

Implementing Motion Detection

The motion detection algorithm uses a technique called background subtraction, specifically the MOG2 (Mixture of Gaussian 2) algorithm available in OpenCV. This approach builds a model of what the background should look like when nothing is moving, then identifies pixels that deviate from this model.

Unlike simple frame differencing, MOG2 adapts to changing conditions like shifting shadows or gradual lighting changes. I configured it with a history of 100 frames to build the background model and a variance threshold of 16 to control sensitivity to pixel changes.

self.bg_subtractor = cv2.createBackgroundSubtractorMOG2(

history=100,

varThreshold=16,

detectShadows=False # disabled shadow detection for latency

)

Each frame from the ESP32 camera undergoes several processing steps. First, the background subtractor analyzes the frame and generates a foreground mask highlighting areas of motion.

fg_mask = self.bg_subtractor.apply(frame, learningRate=0.01) _, fg_mask = cv2.threshold(fg_mask, 250, 255, cv2.THRESH_BINARY)

This raw mask often contains noise from compression artifacts or minor lighting fluctuations, so I apply morphological operations—specifically opening with an elliptical kernel—to remove small specks while preserving larger motion areas.

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (3, 3)) fg_mask = cv2.morphologyEx(fg_mask, cv2.MORPH_OPEN, kernel, iterations=1)

The cleaned mask then feeds into contour detection, which identifies distinct regions of movement.

contours_result = cv2.findContours(

fg_mask,

cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE

)

contours = contours_result[0] if len(contours_result) == 2 else contours_result[1]

Not all detected motion should trigger a scare. A falling leaf or a small animal shouldn't activate the system, so I implemented size-based filtering. By calculating the area of each detected contour and comparing it against a minimum threshold (typically 500 pixels), the system can distinguish between insignificant movement and human-sized motion.

motion_detected = False

total_motion_area = 0

motion_contours = []

for contour in contours:

area = cv2.contourArea(contour)

if area > self.min_motion_area: # default by 500 pixels

motion_contours.append(contour)

total_motion_area += area

motion_detected = True

return motion_detected, fg_mask, motion_contours, total_motion_area

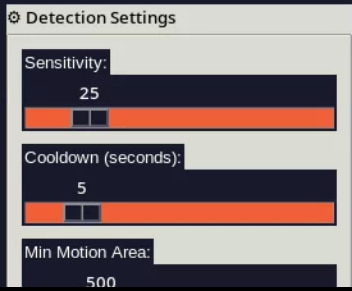

This threshold is adjustable through the GUI, allowing calibration for different environments and camera distances.The triggering logic includes a critical cooldown mechanism. Without it, the system would continuously trigger scares as long as someone remained in the detection zone, quickly becoming annoying rather than frightening. I implemented a timer that prevents retriggering for a configurable period (typically 5 seconds) after each scare.

motion_detected, fg_mask, motion_contours, motion_area = self.detect_motion(frame)

current_time = time.time()

time_since_last_scare = current_time - self.last_scare_time

# only trigger if motion detected AND cooldown period has elapsed

if motion_detected and time_since_last_scare >= self.cooldown_seconds:

# play sound in separate thread to avoid blocking

threading.Thread(target=self.play_scare_sound, daemon=True).start()

self.last_scare_time = current_time

self.detection_count += 1

self.last_detection_time = datetime.now().strftime('%H:%M:%S')

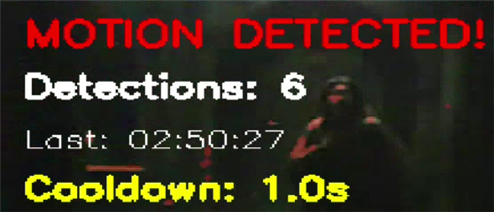

This ensures each person gets one good scare as they pass through, then the system resets for the next victim.The system tracks statistics including total detections, successful triggers, and false positives, providing valuable data for tuning sensitivity.

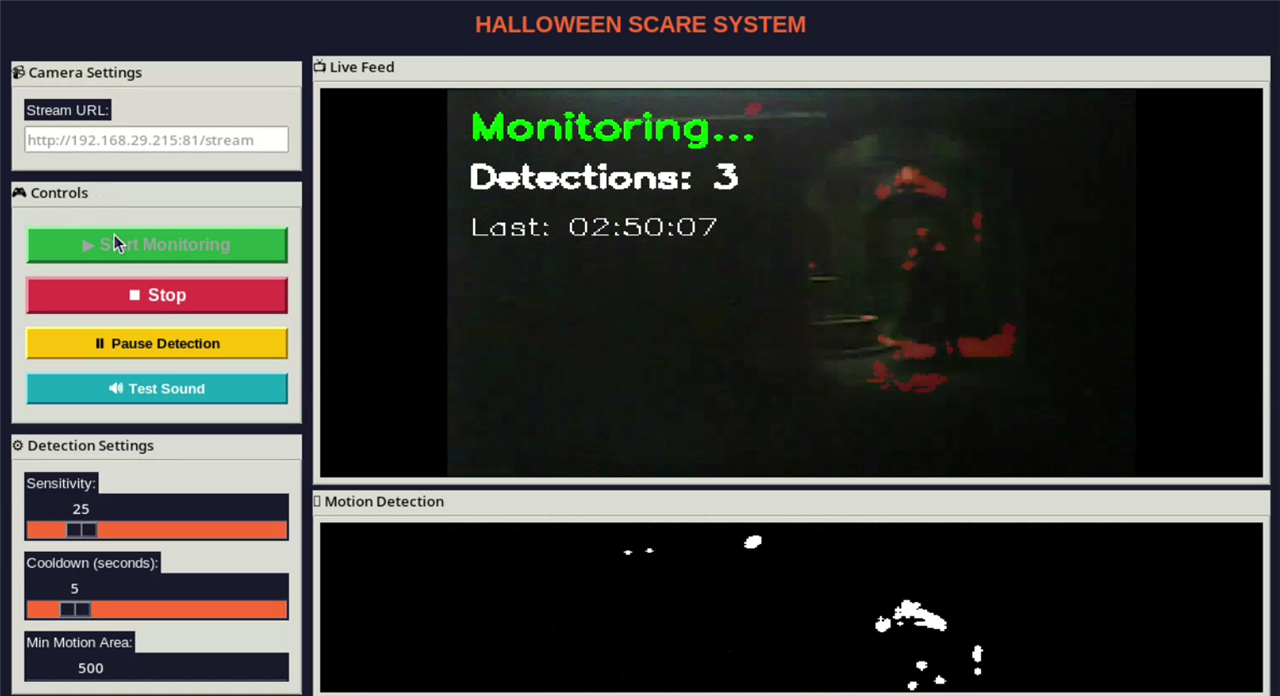

User Interface

A simple GUI transforms this project from a headless script into an accessible system anyone can operate. Built with Tkinter, the interface displays the live camera feed in real-time, overlaying detected motion with red bounding boxes. A separate window shows the background subtraction mask, providing visual feedback about what the algorithm sees. This dual-view approach proved invaluable during calibration, allowing me to understand exactly why the system triggered or failed to trigger.

The control panel includes sliders for adjusting sensitivity, minimum motion area, and cooldown duration—all without restarting the program. This real-time adjustment capability allowed me to fine-tune the system during actual Halloween night operation. If too many false positives occurred, I could increase the minimum area threshold on the fly. If lighting conditions changed, sensitivity adjustments compensated immediately.

A statistics display tracks the number of detections, successful scares, and the current system status.

Solving the Technical Challenges

Network latency was the first major challenge. RTSP video streaming has built-in delays, and processing time adds to this. The total delay from movement to sound needed to stay under 300 milliseconds to keep the feeling of real-time response. I optimized OpenCV's video capture buffer to hold just one frame, which stops old frames from piling up. The background subtraction algorithm uses simple morphological operations for quicker processing, and I turned off shadow detection since it increases processing time without providing a significant benefit for this task.

Bluetooth audio introduced its own latency of 100-200 milliseconds. While this delay can't be completely removed, I made up for it by pre-loading all sound files into memory instead of reading them from disk when they were triggered. Pygame's audio system starts with a small buffer size to reduce any extra delay. I also adjusted PulseAudio settings to focus on low latency rather than audio quality, since having perfectly synced timing is more important than high-fidelity sound for sudden scares.

False positives were tricky, especially when the camera was aimed at trees, curtains, or other things that move in the wind. The morphological filtering helps, but the real fix came from careful camera placement and tuning the thresholds. I learned to keep the camera away from known problem areas, to increase the minimum motion area when it was windy, and to let the background model warm up for enough time before starting the triggering. Testing in different conditions showed that 30 seconds of initialization creates a stable background model.

Halloween with Automated Scares

This project perfectly captures the spirit of Halloween by introducing an element of surprise that defines the holiday. Traditional haunted houses depend on human actors who hide in the dark, waiting for guests and timing their scares by hand. This system replicates that experience while eliminating the need for a human operator. It creates an independent haunting presence that doesn’t tire, doesn’t miss a scare, and keeps a consistent pace throughout the evening.

The hidden nature of the technology improves the eerie atmosphere. Guests feel watched without seeing who is observing them, stirring up deep fears of unseen dangers. When a scream suddenly comes from an empty spot, it creates confusion about where the sound originates. The absence of visible speakers or controllers makes the experience truly feel otherworldly, as if the decorations themselves have come to life and are aware.

Demo Link : https://youtu.be/wSLPUGluTZg

GitHub Repository : https://github.com/veluv01/H.M.D.S.S (Setup for this project are included in the readme.md file)

Python Code

#!/usr/bin/env python3

import cv2

import numpy as np

import pygame

import time

import random

from datetime import datetime

import threading

import tkinter as tk

from tkinter import ttk, messagebox, filedialog

import os

from pathlib import Path

from PIL import Image, ImageTk

class HalloweenScareSystem:

def __init__(self, stream_url="http://192.168.29.215:81/stream"):

self.stream_url = stream_url

self.running = False

self.paused = False

# Motion detection parameters

self.sensitivity = 25

self.min_motion_area = 500

self.cooldown_seconds = 5

self.last_scare_time = 0

# Background subtraction - optimized for lower latency

self.bg_subtractor = None

# Statistics

self.detection_count = 0

self.last_detection_time = None

# Video capture

self.cap = None

self.current_frame = None

self.display_frame = None

self.motion_mask = None

# Threading for async processing

self.processing_lock = threading.Lock()

# Initialize pygame for audio

pygame.mixer.init(frequency=22050, size=-16, channels=2, buffer=512)

self.audio_files = []

self.load_scary_sounds()

def load_scary_sounds(self):

"""Load all audio files from scary_sounds folder"""

self.audio_files = []

sounds_dir = Path("scary_sounds")

if not sounds_dir.exists():

print("Error! 'scary_sounds' folder not found, creating it...")

sounds_dir.mkdir(exist_ok=True)

print(" Please add MP3/WAV files to the 'scary_sounds' folder")

return

# Supported audio formats

audio_extensions = ['.mp3', '.wav', '.ogg']

for ext in audio_extensions:

for audio_file in sounds_dir.glob(f'*{ext}'):

try:

sound = pygame.mixer.Sound(str(audio_file))

self.audio_files.append(sound)

print(f"Loaded: {audio_file.name}")

except Exception as e:

print(f"Error : Failed to load {audio_file.name}: {e}")

if not self.audio_files:

print("Warning: No audio files found in 'scary_sounds' folder")

print(" Using default beep sound")

self.create_default_sound()

else:

print(f"Loaded {len(self.audio_files)} scary sound(s)")

def create_default_sound(self):

"""Create a default scary beep if no audio files found"""

sample_rate = 22050

duration = 1.0

frequency = 200

t = np.linspace(0, duration, int(sample_rate * duration))

tone = np.sin(2 * np.pi * frequency * t)

tremolo = np.sin(2 * np.pi * 6 * t)

scary_tone = tone * (0.5 + 0.5 * tremolo)

scary_tone = (scary_tone * 32767).astype(np.int16)

stereo_tone = np.column_stack((scary_tone, scary_tone))

self.audio_files = [pygame.sndarray.make_sound(stereo_tone)]

def play_scare_sound(self):

"""Play a random scary sound"""

try:

if self.audio_files:

sound = random.choice(self.audio_files)

sound.set_volume(1.0)

sound.play()

return True

except Exception as e:

print(f"Audio error: {e}")

return False

def detect_motion(self, frame):

"""Detect motion using background subtraction - optimized for speed"""

if self.bg_subtractor is None:

return False, None, [], 0

fg_mask = self.bg_subtractor.apply(frame, learningRate=0.01) # Faster learning

_, fg_mask = cv2.threshold(fg_mask, 250, 255, cv2.THRESH_BINARY)

# Simplified morphological operations for speed

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (3, 3)) # Smaller kernel

fg_mask = cv2.morphologyEx(fg_mask, cv2.MORPH_OPEN, kernel, iterations=1)

contours_result = cv2.findContours(

fg_mask,

cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE

)

contours = contours_result[0] if len(contours_result) == 2 else contours_result[1]

motion_detected = False

total_motion_area = 0

motion_contours = []

for contour in contours:

area = cv2.contourArea(contour)

if area > self.min_motion_area:

motion_contours.append(contour)

total_motion_area += area

motion_detected = True

return motion_detected, fg_mask, motion_contours, total_motion_area

def draw_overlay(self, frame, fg_mask, motion_contours, motion_detected):

"""Draw motion detection overlay on frame"""

display_frame = frame.copy()

if fg_mask is not None:

motion_overlay = cv2.cvtColor(fg_mask, cv2.COLOR_GRAY2BGR)

motion_overlay[:, :, 0] = 0

motion_overlay[:, :, 1] = 0

display_frame = cv2.addWeighted(display_frame, 0.7, motion_overlay, 0.3, 0)

for contour in motion_contours:

x, y, w, h = cv2.boundingRect(contour)

cv2.rectangle(display_frame, (x, y), (x + w, y + h), (0, 0, 255), 2)

status_color = (0, 0, 255) if motion_detected else (0, 255, 0)

status_text = "MOTION DETECTED!" if motion_detected else "Monitoring..."

cv2.putText(display_frame, status_text, (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, status_color, 2)

cv2.putText(display_frame, f"Detections: {self.detection_count}", (10, 60),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 255, 255), 2)

if self.last_detection_time:

cv2.putText(display_frame, f"Last: {self.last_detection_time}", (10, 90),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 1)

current_time = time.time()

time_since_scare = current_time - self.last_scare_time

if time_since_scare < self.cooldown_seconds:

cooldown_remaining = self.cooldown_seconds - time_since_scare

cv2.putText(display_frame, f"Cooldown: {cooldown_remaining:.1f}s", (10, 120),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 255, 255), 2)

return display_frame

def connect_stream(self):

"""Connect to video stream"""

if self.cap is not None:

self.cap.release()

self.cap = cv2.VideoCapture(self.stream_url)

if not self.cap.isOpened():

return False

# Optimize capture settings for lower latency

self.cap.set(cv2.CAP_PROP_FRAME_WIDTH, 640)

self.cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 480)

self.cap.set(cv2.CAP_PROP_BUFFERSIZE, 1) # Minimize buffer

self.cap.set(cv2.CAP_PROP_FPS, 30)

# Initialize background subtractor with faster parameters

self.bg_subtractor = cv2.createBackgroundSubtractorMOG2(

history=100, # Reduced from 500 for faster adaptation

varThreshold=16,

detectShadows=False # Disable shadow detection for speed

)

# Quick warm up - reduced from 30 to 10 frames

for _ in range(10):

ret, frame = self.cap.read()

if ret:

self.bg_subtractor.apply(frame)

return True

def process_frame(self):

"""Process a single frame - optimized for low latency"""

if self.cap is None or not self.running:

return None, None

ret, frame = self.cap.read()

if not ret:

return None, None

with self.processing_lock:

self.current_frame = frame

if self.paused:

return frame, None

motion_detected, fg_mask, motion_contours, motion_area = self.detect_motion(frame)

current_time = time.time()

time_since_last_scare = current_time - self.last_scare_time

if motion_detected and time_since_last_scare >= self.cooldown_seconds:

# Play sound in separate thread to avoid blocking

threading.Thread(target=self.play_scare_sound, daemon=True).start()

self.last_scare_time = current_time

self.detection_count += 1

self.last_detection_time = datetime.now().strftime('%H:%M:%S')

self.display_frame = self.draw_overlay(frame, fg_mask, motion_contours, motion_detected)

self.motion_mask = fg_mask

return self.display_frame, fg_mask

def stop(self):

"""Stop the system"""

self.running = False

if self.cap is not None:

self.cap.release()

self.cap = None

self.bg_subtractor = None

class HalloweenGUI:

def __init__(self, root):

self.root = root

self.root.title("Halloween Scare System")

self.root.geometry("1200x750")

self.root.configure(bg='#1a1a2e')

# Style configuration

style = ttk.Style()

style.theme_use('clam')

style.configure('TFrame', background='#1a1a2e')

style.configure('TLabel', background='#1a1a2e', foreground='#ffffff', font=('Arial', 10))

style.configure('Title.TLabel', font=('Arial', 16, 'bold'), foreground='#ff6b35')

style.configure('TButton', font=('Arial', 10, 'bold'))

style.map('TButton', background=[('active', '#ff6b35')])

self.scare_system = HalloweenScareSystem()

self.update_thread = None

self.is_updating = False

self.setup_gui()

self.update_stats()

def setup_gui(self):

"""Setup the GUI layout"""

# Title

title_frame = ttk.Frame(self.root)

title_frame.pack(pady=10, fill='x')

title_label = ttk.Label(

title_frame,

text="HALLOWEEN SCARE SYSTEM",

style='Title.TLabel'

)

title_label.pack()

# Main container

main_container = ttk.Frame(self.root)

main_container.pack(fill='both', expand=True, padx=10, pady=5)

# Left panel - Controls

left_panel = ttk.Frame(main_container)

left_panel.pack(side='left', fill='both', padx=5)

# Connection settings

conn_frame = ttk.LabelFrame(left_panel, text="Camera Settings", padding=10)

conn_frame.pack(fill='x', pady=5)

ttk.Label(conn_frame, text="Stream URL:").pack(anchor='w')

self.url_entry = ttk.Entry(conn_frame, width=30)

self.url_entry.insert(0, self.scare_system.stream_url)

self.url_entry.pack(fill='x', pady=5)

# Control buttons

control_frame = ttk.LabelFrame(left_panel, text="Controls", padding=10)

control_frame.pack(fill='x', pady=5)

self.start_btn = tk.Button(

control_frame,

text="Start Monitoring",

command=self.start_monitoring,

bg='#28a745',

fg='white',

font=('Arial', 12, 'bold'),

relief='raised',

bd=3

)

self.start_btn.pack(fill='x', pady=5)

self.stop_btn = tk.Button(

control_frame,

text="Stop",

command=self.stop_monitoring,

bg='#dc3545',

fg='white',

font=('Arial', 12, 'bold'),

relief='raised',

bd=3,

state='disabled'

)

self.stop_btn.pack(fill='x', pady=5)

self.pause_btn = tk.Button(

control_frame,

text="Pause Detection",

command=self.toggle_pause,

bg='#ffc107',

fg='black',

font=('Arial', 10, 'bold'),

relief='raised',

bd=2,

state='disabled'

)

self.pause_btn.pack(fill='x', pady=5)

self.test_btn = tk.Button(

control_frame,

text="Test Sound",

command=self.test_sound,

bg='#17a2b8',

fg='white',

font=('Arial', 10, 'bold'),

relief='raised',

bd=2

)

self.test_btn.pack(fill='x', pady=5)

# Settings

settings_frame = ttk.LabelFrame(left_panel, text="Detection Settings", padding=10)

settings_frame.pack(fill='x', pady=5)

ttk.Label(settings_frame, text="Sensitivity:").pack(anchor='w')

self.sensitivity_scale = tk.Scale(

settings_frame,

from_=10,

to=100,

orient='horizontal',

bg='#1a1a2e',

fg='white',

highlightbackground='#1a1a2e',

troughcolor='#ff6b35',

command=self.update_sensitivity

)

self.sensitivity_scale.set(self.scare_system.sensitivity)

self.sensitivity_scale.pack(fill='x')

ttk.Label(settings_frame, text="Cooldown (seconds):").pack(anchor='w', pady=(10, 0))

self.cooldown_scale = tk.Scale(

settings_frame,

from_=1,

to=30,

orient='horizontal',

bg='#1a1a2e',

fg='white',

highlightbackground='#1a1a2e',

troughcolor='#ff6b35',

command=self.update_cooldown

)

self.cooldown_scale.set(self.scare_system.cooldown_seconds)

self.cooldown_scale.pack(fill='x')

ttk.Label(settings_frame, text="Min Motion Area:").pack(anchor='w', pady=(10, 0))

self.area_scale = tk.Scale(

settings_frame,

from_=100,

to=2000,

orient='horizontal',

bg='#1a1a2e',

fg='white',

highlightbackground='#1a1a2e',

troughcolor='#ff6b35',

command=self.update_area

)

self.area_scale.set(self.scare_system.min_motion_area)

self.area_scale.pack(fill='x')

# Statistics

stats_frame = ttk.LabelFrame(left_panel, text="Statistics", padding=10)

stats_frame.pack(fill='x', pady=5)

self.stats_label = ttk.Label(stats_frame, text="", justify='left')

self.stats_label.pack(anchor='w')

# Audio info

audio_frame = ttk.LabelFrame(left_panel, text="Audio Files", padding=10)

audio_frame.pack(fill='x', pady=5)

audio_count = len(self.scare_system.audio_files)

audio_text = f"Loaded: {audio_count} sound(s)"

if audio_count == 0:

audio_text += "\n Warning: Add files to 'scary_sounds' folder"

ttk.Label(audio_frame, text=audio_text, justify='left').pack(anchor='w')

reload_btn = tk.Button(

audio_frame,

text="Reload Sounds",

command=self.reload_sounds,

bg='#6c757d',

fg='white',

font=('Arial', 9)

)

reload_btn.pack(fill='x', pady=5)

# Right panel - Video feeds

right_panel = ttk.Frame(main_container)

right_panel.pack(side='right', fill='both', expand=True, padx=5)

# Main video feed

video_frame = ttk.LabelFrame(right_panel, text="Live Feed", padding=5)

video_frame.pack(fill='both', expand=True, pady=(0, 5))

self.video_label = tk.Label(video_frame, bg='black')

self.video_label.pack(fill='both', expand=True)

# Motion detection feed

motion_frame = ttk.LabelFrame(right_panel, text="Motion Detection", padding=5)

motion_frame.pack(fill='both', expand=True)

self.motion_label = tk.Label(motion_frame, bg='black')

self.motion_label.pack(fill='both', expand=True)

# Status bar

self.status_label = ttk.Label(

self.root,

text="Status: Idle",

relief='sunken',

anchor='w'

)

self.status_label.pack(side='bottom', fill='x')

def update_sensitivity(self, value):

self.scare_system.sensitivity = int(float(value))

def update_cooldown(self, value):

self.scare_system.cooldown_seconds = int(float(value))

def update_area(self, value):

self.scare_system.min_motion_area = int(float(value))

def test_sound(self):

"""Test playing a scare sound"""

if self.scare_system.play_scare_sound():

self.status_label.config(text="Status: Playing test sound...")

self.root.after(1000, lambda: self.status_label.config(text="Status: Ready"))

else:

messagebox.showerror("Error", "Failed to play sound")

def reload_sounds(self):

"""Reload audio files from scary_sounds folder"""

self.scare_system.load_scary_sounds()

audio_count = len(self.scare_system.audio_files)

messagebox.showinfo("Audio Reloaded", f"Loaded {audio_count} sound file(s)")

self.setup_gui() # Refresh the GUI to update audio count

def start_monitoring(self):

"""Start the monitoring system"""

url = self.url_entry.get().strip()

if not url:

messagebox.showerror("Error", "Please enter a stream URL")

return

self.scare_system.stream_url = url

self.status_label.config(text="Status: Connecting to stream...")

self.root.update()

if not self.scare_system.connect_stream():

messagebox.showerror("Error", "Failed to connect to stream.\nCheck the URL and network connection.")

self.status_label.config(text="Status: Connection failed")

return

self.scare_system.running = True

self.is_updating = True

self.start_btn.config(state='disabled')

self.stop_btn.config(state='normal')

self.pause_btn.config(state='normal')

self.url_entry.config(state='disabled')

self.status_label.config(text="Status: Monitoring for motion...")

self.update_video()

def stop_monitoring(self):

"""Stop the monitoring system"""

self.is_updating = False

self.scare_system.stop()

self.start_btn.config(state='normal')

self.stop_btn.config(state='disabled')

self.pause_btn.config(state='disabled')

self.url_entry.config(state='normal')

self.video_label.config(image='')

self.status_label.config(text="Status: Stopped")

def toggle_pause(self):

"""Toggle motion detection pause"""

self.scare_system.paused = not self.scare_system.paused

if self.scare_system.paused:

self.pause_btn.config(text="Resume Detection")

self.status_label.config(text="Status: Detection Paused")

else:

self.pause_btn.config(text="Pause Detection")

self.status_label.config(text="Status: Monitoring for motion...")

def update_video(self):

"""Update video feed - optimized for low latency"""

if not self.is_updating:

return

frame, motion_mask = self.scare_system.process_frame()

if frame is not None:

# Update main video feed with reduced processing

frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

img = Image.fromarray(frame_rgb)

# Fixed display size

display_width = 640

display_height = 360

img = img.resize((display_width, display_height), Image.Resampling.NEAREST) # Faster resize

photo = ImageTk.PhotoImage(image=img)

self.video_label.config(image=photo)

self.video_label.image = photo

if motion_mask is not None:

# Update motion detection feed

motion_rgb = cv2.cvtColor(motion_mask, cv2.COLOR_GRAY2RGB)

motion_img = Image.fromarray(motion_rgb)

motion_img = motion_img.resize((display_width, display_height), Image.Resampling.NEAREST)

motion_photo = ImageTk.PhotoImage(image=motion_img)

self.motion_label.config(image=motion_photo)

self.motion_label.image = motion_photo

# Reduced delay for faster updates

self.root.after(16, self.update_video) # ~60fps update rate

def update_stats(self):

"""Update statistics display"""

stats_text = (

f"Total Detections: {self.scare_system.detection_count}\n"

f"Last Detection: {self.scare_system.last_detection_time or 'None'}"

)

self.stats_label.config(text=stats_text)

self.root.after(1000, self.update_stats)

def on_closing(self):

"""Handle window closing"""

if messagebox.askokcancel("Quit", "Do you want to quit?"):

self.is_updating = False

self.scare_system.stop()

pygame.mixer.quit()

self.root.destroy()

def main():

"""Main entry point"""

root = tk.Tk()

app = HalloweenGUI(root)

root.protocol("WM_DELETE_WINDOW", app.on_closing)

root.mainloop()

if __name__ == "__main__":

main()

References

https://wiki.seeedstudio.com/xiao_esp32s3_getting_started/

https://wiki.seeedstudio.com/xiao_esp32s3_camera_usage/