When trying to revive degraded lead-acid battery (that were left without charging for too long and entered deep undercharge condition) old wisdom (from the times when buying new car battery was near impossible so people have gone great lengths to restore even worst cases) suggests using charge cycles interrupted by discharging. Using that principle chargers of old were using unfiltered rectified current and some load connected parallel to charged battery - in that config every cycle cell was charged (when input voltage exceeded cell voltage) and discharged into load (when rectified voltage was below cell's voltage).

Modern chargers are working different way - usually as constant current source with voltage limit.

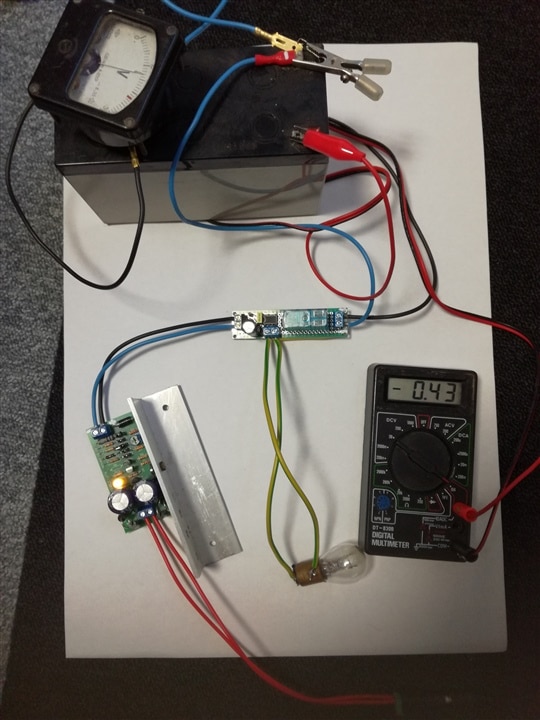

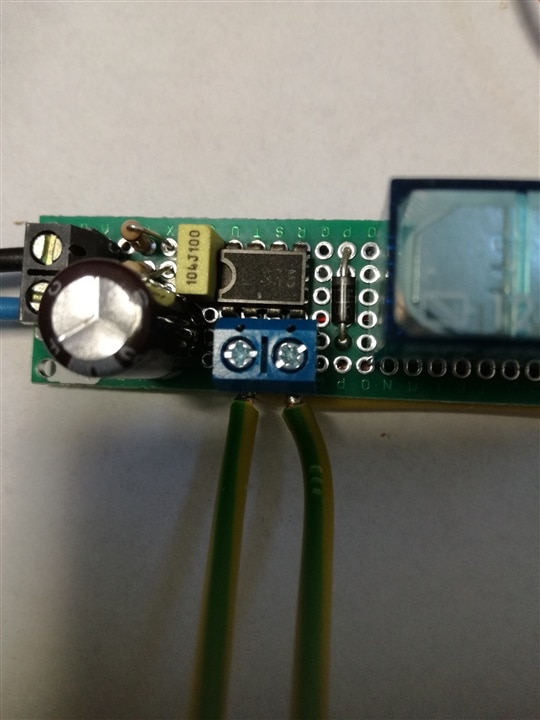

As I have decided to try to revive some old standby battery (left in unpowered equipment for several years) I have learned limits of this method - battery charged somewhat and then stopped. To my surprise, discharging battery using old lamp as load enabled it to consume more energy during next charging cycle - and this experience have led to creation of this circuit. Schematics is very simple and standard:

Every minute or so (555 frequency of 13mHz with duty cycle of 95%) generator disconnects battery from charger and discharges it for several seconds through small car lamp. D2 prevents reverse current flow when charger is disconnected.

It seems that after every discharging event charging current increases a bit - maybe (let's hope) because internal resistance of battery lowers?

Described experiment goes on for some time without dramatic improvements but it is only an experiment - "what happens to otherwise dead battery when treated this way?"

Below some photos of this module connected to DYI (LM317 based) battery charger and proof-of-work movie:

-

AE7HD

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Comment-

AE7HD

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Children