Table of contents

Abstract

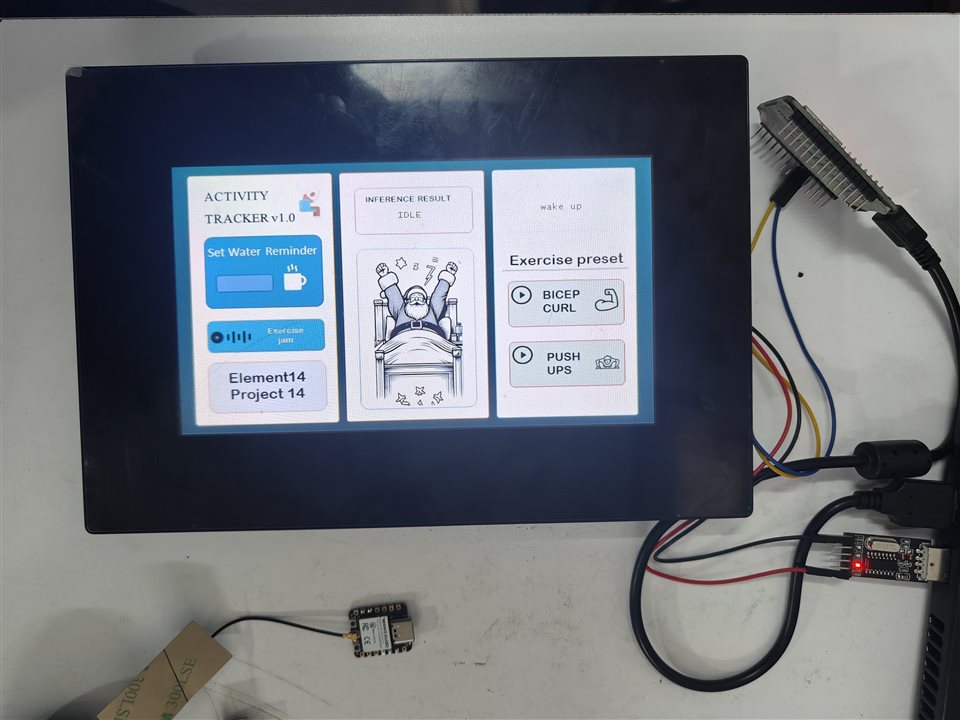

A simple application to predict the activity based on sensor data will be done on edge and the inference result will be mapped into Santa's actions and visualized with a help of a display.

Project

Introducing Our spotter

As someone who works full time on a desk job, I need occasional reminders to move around and do some stretching exercises to keep the joints going. While I could set up an alarm, it is not a fun as creating an alert system myself. In addition to that, I wanted to incorporate some features that provides better self-care.

Now that we have decided to monitor our activity and do some exercise, it still does not feel complete. Working out with a friend can make it more enjoyable, and if you lift weights, having a spotter makes it safer. For this project Santa will accompany us as we try to undo the weight we have gained during the holidays.

i

i

Materials Used

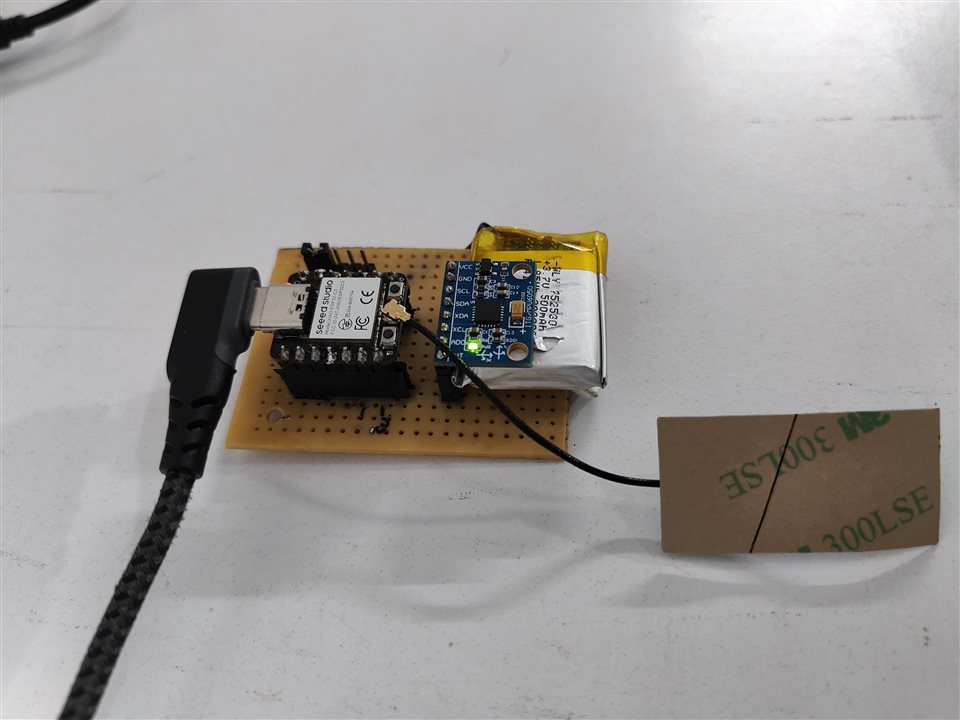

- XIAO ESP32C3 board

- MPU6050

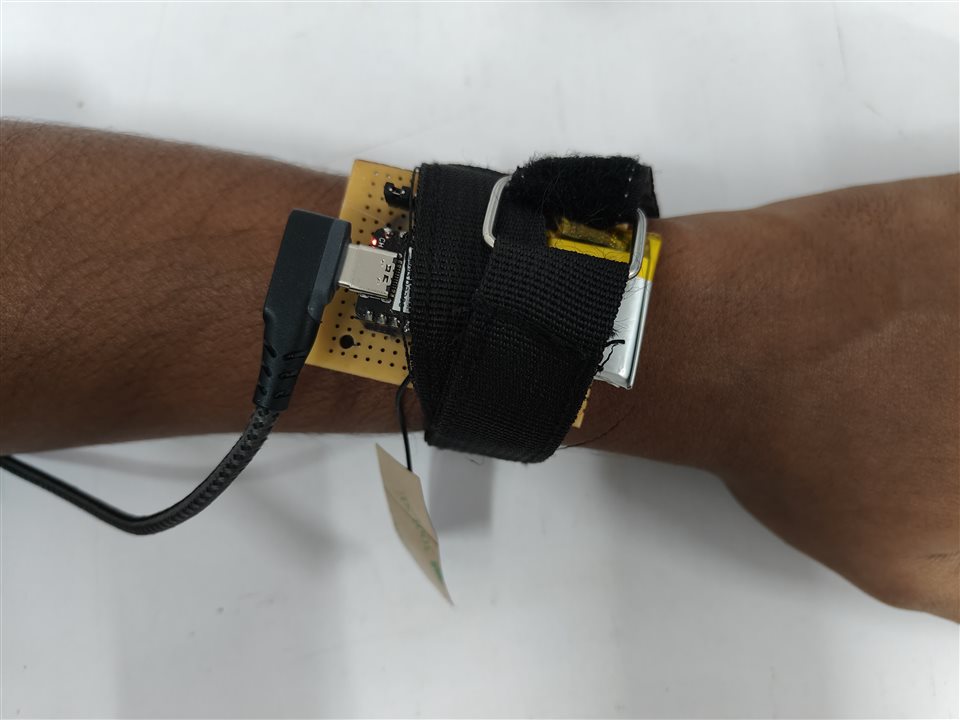

- Strap

- ESP32 Dev kit board

- Rechargeable battery

- Nextion 7" intelligent display

The display is powered up by using a TTL module and the UART lines are connected to ESP32.

The ESP32C3 is paired with MPU6050 using I2C lines, the circuit can be powered by using USB cable or by using battery.

Now all we have to do is to strap ourselves and enjoy the ride.

Implementation

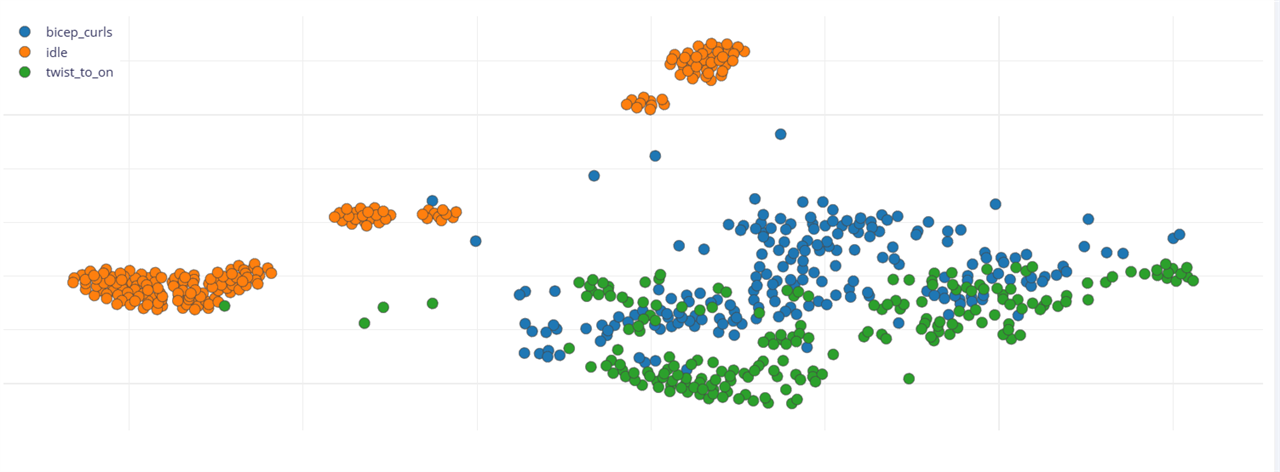

I have collected and trained 3 labels of data among which only one is an actual exercise. While there are several exercises that can be used for this project, I wanted to keep it simple and easily repeatable for anyone. The Edge Impulse repo is publicly available. The labels are as follows,

- Idle

- Wrist Twist

- Bicep curls

For a detailed explanation on how to get the data, creating impulse using Edge impulse, creating and live testing the model, please follow the instructions in this blog.

Display UI

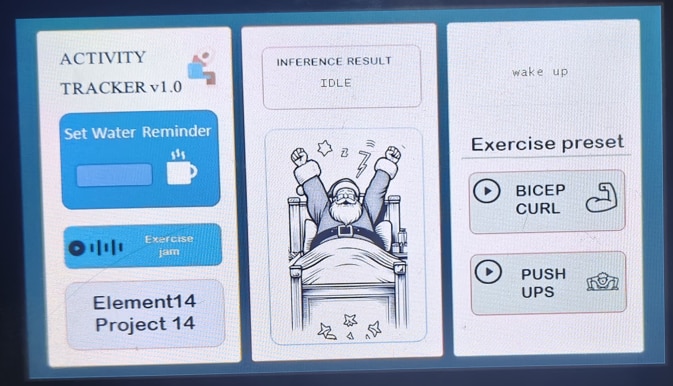

As the steps to design the display UI is out of this scope for this project, I will cover it later sometime in a different post. The final UI will look like this.

Power save - Idle

In smart watches, to conserve the battery power, low power mode will be enabled when there is no activity for a defined duration. The data was collected by placing hands on side and resting on table. Santa will start sleeping if there are no activity for 5s and the screen will dim.

Power on - Twist to On

This is another feature I copied from smart watches, when the display is in idle mode, user have to twist their wrist to turn it back on. Santa will wake up from his nap with much enthusiasm and will wait for further action.

Below video covers both twists to turn on the screen and idle state

Power up - Bicep curl

Bicep curl is the easiest exercise that provided a consistent data for me and a slightly difficult data to work with when I tested it in live classification with someone else. The swinging movement slightly resembles the wrist twist and sometimes produce false value – Bicep curl as Twist to On or Twist to On as Bicep curl.

On getting the correct action, Santa will also mimic doing Bicep curl with us.

Additional feature - Water reminder

On average, we need to consume 8 cups of water every day. For those who exercise frequently, it important to keep the body water level and electrolyte level at correct level to avoid dizziness. I have added a simple reminder in display that will pop up a text reminder to drink water. For video example, I set the reminder to happen 5s after the button is pressed.

Additional feature - Music player

Music not only provides a better ambience; it also helps in focusing on any task. So, one more feature added to this activity tracker is the option to play your favourite music with a play/stop button.

Code explanation

I used Edge Impulse to collect data, train the model and create deployment package. Edge impulse uses data formatted in CSV style printed over the serial lines as sample. The Edge Impulse CLI will receive the CSV data and transmit it to cloud using Edge Impulse CLI. I will post a detailed explanation of all the steps and configuration made to get the desired result later.

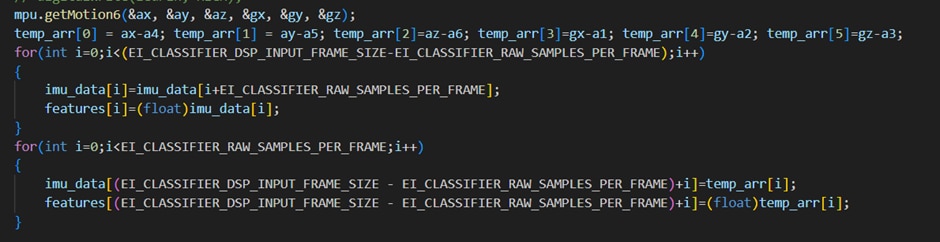

XIAO ESP32C3 acts as our inference runner whereas ESP32 Dev kit acts as our display driver. The communication between ESP32C3 and ESP32 are done in local network using raw UDP protocol. The inference takes in [Number of data per entry]*[Samples per second] which happens to be 6 data points and 100 samples per second for our project.

The data is collected every 10ms and at the end of 10ms, previous data points are shifted by 6 data points and newer data points are inserted at last. The integer array is then converted to float array and passed to inference result function. I tried “memmove” but it crashed the application, so I decided to use the good old for loop.

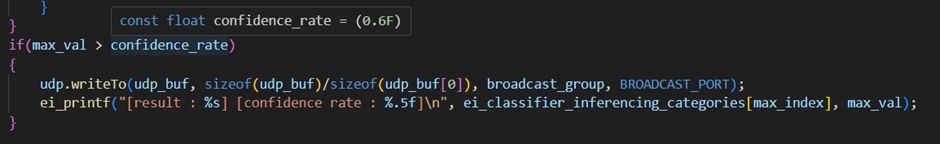

The inference calculation usually takes 120ms which is good enough for now, the output is a confidence rate for each label. In case two labels might have 0.5 confidence rate which I discard by setting the confidence rate threshold as 0.6.

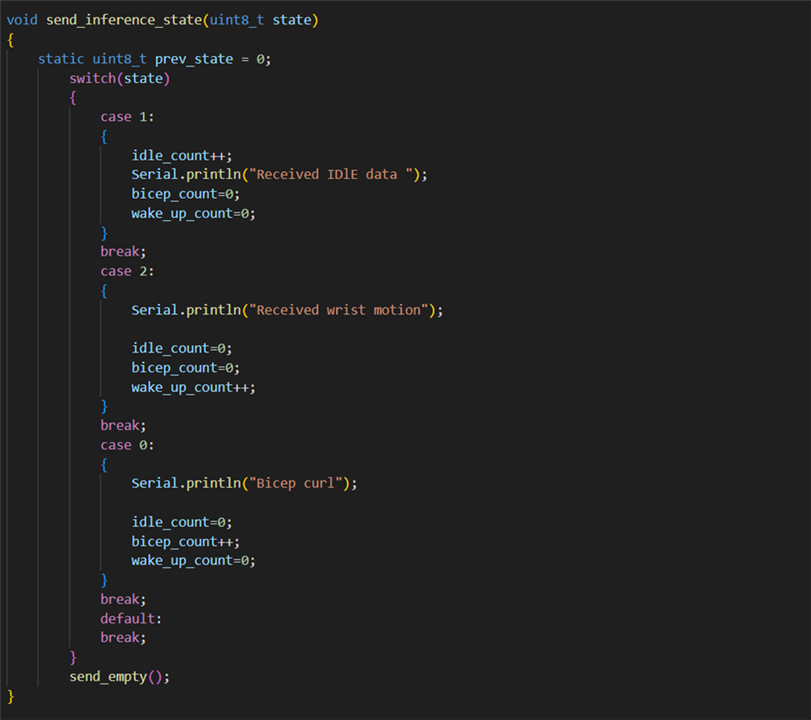

Once a match is found, label number is transmitted over UDP and received by ESP32.

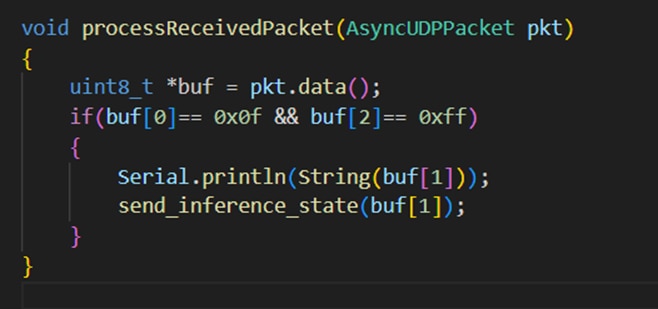

As the IP address of the devices changes every time and I did not want to assign a static IP to each device, I made the data to be transmitted and received in a UDP multicast group.

On receiving side, everytime UDP message is received ESP32 will parse the payload and send suitable String commands to display through UART.

Touch events on display are also transmitted to ESP32, which will also be processed and suitable actions will be taken.

Challenges faced

- To be honest, I wanted to do this project as a part of Rasynboard Road-test. Unfortunately, I am facing a slight issue with the board and decided to opt to use XIAO ESP32C3 for its small form factor.

- Another challenge I faced was using a suitable strap to attach the sensor kit to my arm. While testing with Rasynboard I used a smaller strap that is not quite suitable for the IO board’s size, so I decided to use a tourniquet strap that is nearly twice in length and provided a better grip.

- When twisting my arm, the angle of inclination resembles the initial rising stage of bicep curl of someone else. While it does not affect the project, it just highlights the need for diversity in data – collecting from multiple people and more data for testing/training for a label.

- The last one is using a suitable communication protocol. The inference processing usually takes around 120-130ms on ESP32C3 in addition to that, sending the inference result via BLE took few more milli seconds. Since I don’t have any control over the calculation time, I decided to cut back on wireless communication delay by deploying raw UDP.

Improvements

- Initially I wanted to implement an alert service that will pop up when user makes a gasp or similar sound on getting injured using the mic in Rasynboard. The only other mics I have are PDM mic which are nowhere good enough for a keyword spotting application. Once Rasynboard is up and running, I will try ML on edge using sensor fusion.

- The project can be further improved by collecting data from people who maintain a good form while exercising, in that way we can actually get live feedback of our posture as well. Using this, we can create a preset exercise where we follow along Santa, if the form is good enough count will be incremented else posture correction info will be displayed.

Credits

One of my colleagues whose only crime is showing interest in my project and offering to fix the Rasynboard kit, later I used him to test the accuracy of inference result.

Bing AI – Generating Santa images

Bensound – Audio used in the display.

Flaticon – Icons used in making the display UI.