Orginal article written for IETE SF MEC medium page. https://medium.com/iete-sf-mec/edge-computing-when-computing-comes-to-the-edge-2c938aef117e

This is my first technical writing. Kindly forgive for any errors, language or info. Please feel free to give suggestions, resources and corrections

From “ Alexa, turn off the AC” to the complex infrastructural management of all software industry leaders; cloud computing revolutionized the pace of Fourth Industrial revolution. More than 90% of enterprises in the world run their workload in cloud services and it is nearly impossible to think a world without cloud. Internet of Things emerged as the new life changer. Now nearly every equipment in our home and office are not merely lifeless objects but more lively than we humans in 21st century. IoT made our lives much easier and industries run faster — all made possible by cloud servers running somewhere on the Earth.

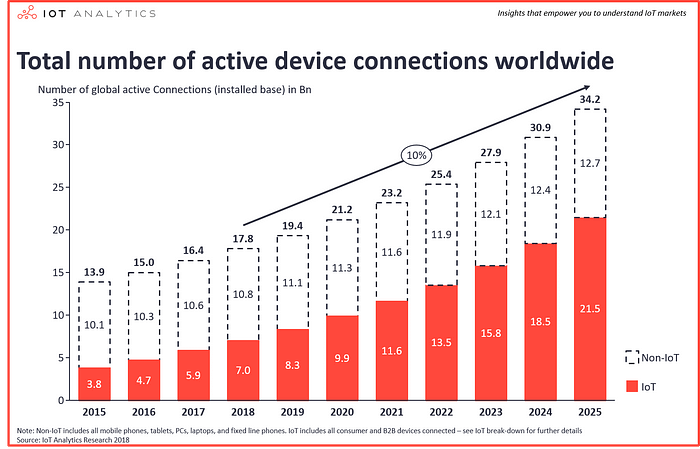

The total installed base of IoT connected devices is projected to amount to 21.5 billion units worldwide by 2025. But the development of hardware infrastructures for cloud services are not in the same pace as of that with the rising processing demand created by these devices. So how this affect us? We can think of slower loading time in websites or we can adjust to the delays with our smart device communications. When more number of devices/sensors of an enterprise connected to the same cloud, it’s obvious that the processing and response time will increase.

When it comes to sensitive data produced by healthcare devices or industrial IoT devices, security is a major concern. How can we account this?

Consider the case of a petroleum plant. Suppose an IoT pressure regulator is used in gas pipelines to maintain pressure levels. When the pressure of the gas passing through the pipeline becomes high, the pressure regulator needs to detect this rising pressure and reduce it at that instant. If due to some increased workload of cloud, the response to reduce the pressure of the pipeline will be delayed by a few seconds, or even minutes. Pressure needs to reduce instantly. Any delays can cause fatal damages to the entire plant. We need to perform such urgent tasks ASAP, even before taking it to the cloud. Here comes our topic of discussion- EDGE COMPUTING.

What is Edge computing?

Karim Arabi, in an IEEE DAC 2014 keynote and subsequently in an invited talk at MIT’s MTL Seminar in 2015 defined edge computing broadly as:

“ All computing outside the cloud, happening at the edge of the network, and more specifically in applications where real-time processing of data is required.”

In his definition, cloud computing operates on big data while edge computing operates on “instant data”, that is, real-time data generated by sensors or users. This processing takes place in embedded devices at the edge of IoT cloud gateway. We may call these devices as local servers.

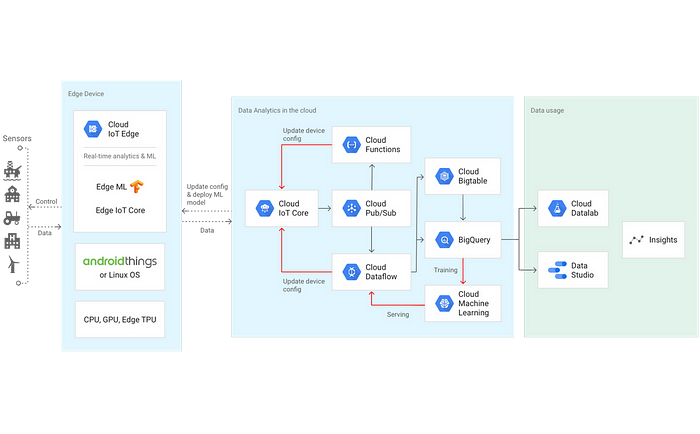

Talking about AI, edge computing will be the best option for industries. The working neural network training will be carried out in cloud. But a copy of this neural network is deployed to the edge devices, where it will be able to process real time data without the need of transmitting it to the cloud. Thus no latency issues.

So in the case of pressure regulator, an edge device at vicinity of the pipelines or a section of pipelines will soon identify the pressure change and control it accordingly. In a more complex perspective, the edge AI analyses the changes and nature of variations over time inside the pipeline through embedded ML algorithms. This can reduce network bandwidth issues and helps in continuous data transmission of sensor data to the cloud.

Edge computing is more or less purely dependent on hardware. All major hardware and chip manufacturers have focused on edge computing device for the past couple of years. Intel Neural Compute Stick adds much better neural processing to SBCs like Raspberry Pi. Even the recent launch of Raspberry Pi Compute Module 4 was aimed at edge computing. System on module(SOM) devices like NVIDIA Jetson TX2 and Google Corel Edge TPU development boards are some of the most efficient edge devices. Google cloud IoT Edge lets you execute ML models trained in Google Cloud on the Edge TPU devices or on GPU- and CPU-based accelerators. It can run on Android or Linux OS-based devices.

Want to try Edge AI ?

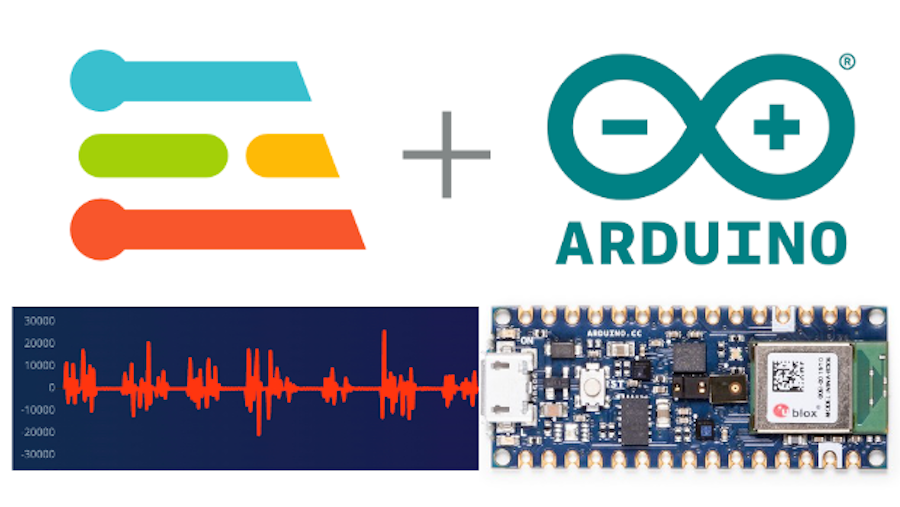

The trend to run ML on microcontrollers is called Embedded ML or TinyML. Edge Impulse is a startup founded in 2019 by Zach Shelby and Jan Jongboom.

“ Designed for software developers, engineers and domain experts to solve real problems using machine learning on edge devices without a PhD in machine learning. ”

They have launched TinyML as a service to enable machine learning for all embedded developers with open source device SDKs. Its freely available on newest 32-bit Arduino devices like the Arduino Nano 33 BLE Sense. Edge Impulse enables the easy collection of real time sensor data, live signal processing from raw data to neural networks, testing and deployment to any target device. In future, support for more devices and frameworks will be available.

Wrap Up

Edge computing is highly reliable and cost effective considering the pay-as-you-go system of all popular cloud services. But additional infrastructural costs arise for setting up Edge device. Some say security risks are distributed and others ask — What if an attacker hacks into the edge device?

Though there are some questions which need to be addressed, the industry is moving to edge computing and demand for better embedded devices are increasing in the market. It is more suited for applications that depend on short and predictable response times. Autonomous vehicles fall under that category.