At Coolest Projects 2018, we showcased the Wia platform with a facial recognition ferris wheel - if you're smiling, the wheel turns, and if you're not smiling, it stops. Today we're going to talk about how we built this project, and maybe you'll be inspired to build something like it!

What we used

- Lego Ferris wheel kit

- Arduino MKR 1000

- L293D motor driver

- High torque DC motor

- Breadboard

- Raspberry Pi Zero W

- Raspberry Pi Camera Module (we used this one)

Getting Started

For those of you who don’t have the time to build the entire ferris wheel, here are tutorials for getting started on Wia for each of the components we used in this project.

- Arduino MKR 1000

- L293D motor driver & High torque DC motor

- Raspberry Pi Zero W

- Raspberry Pi Camera Module

The Lego Ferris wheel

For the Ferris wheel itself, we decided to go with this Lego kit. It took about 7 hours or so to complete. This kit has an optional add-on motor, but we decided to create our own solution.

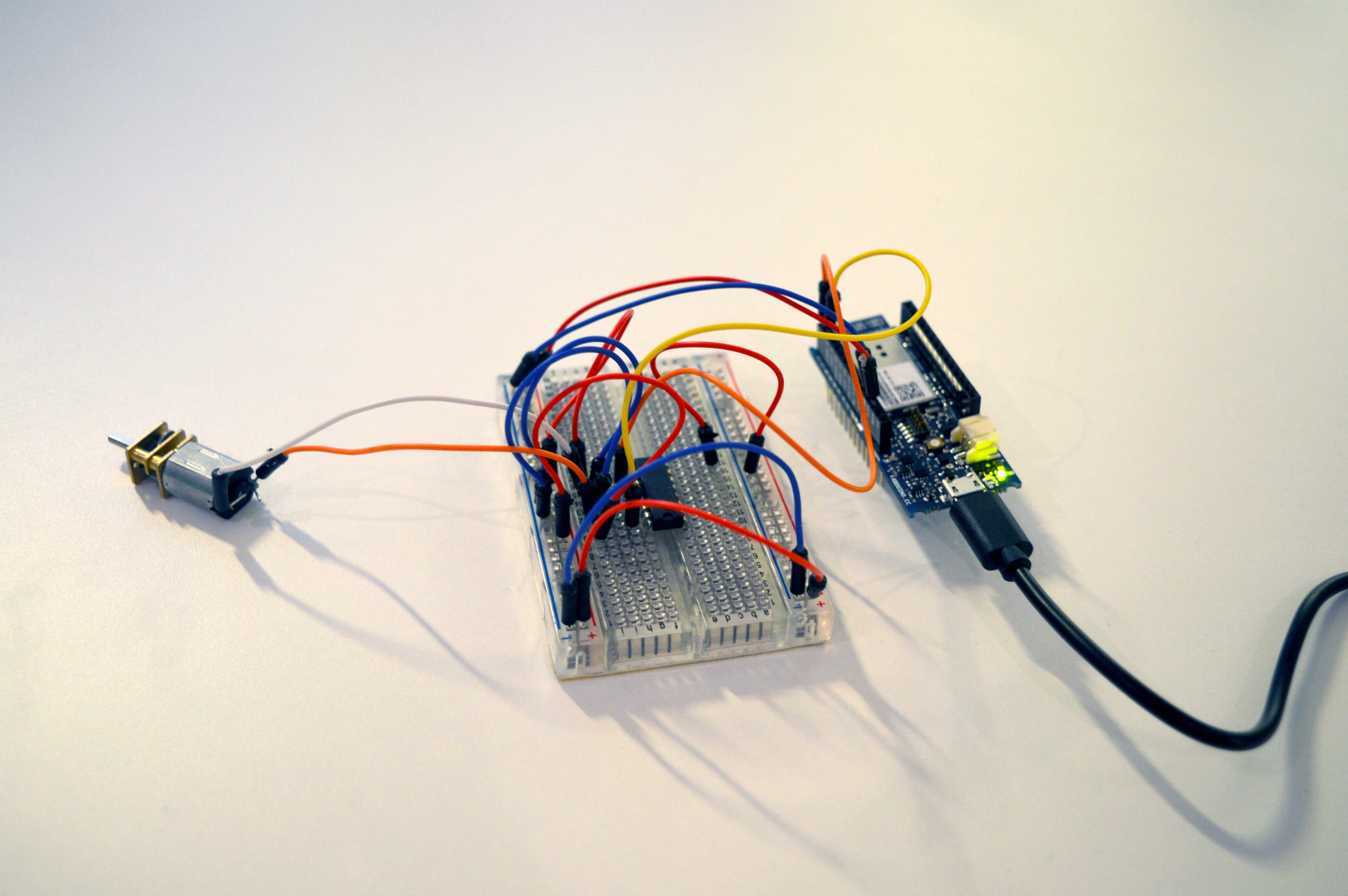

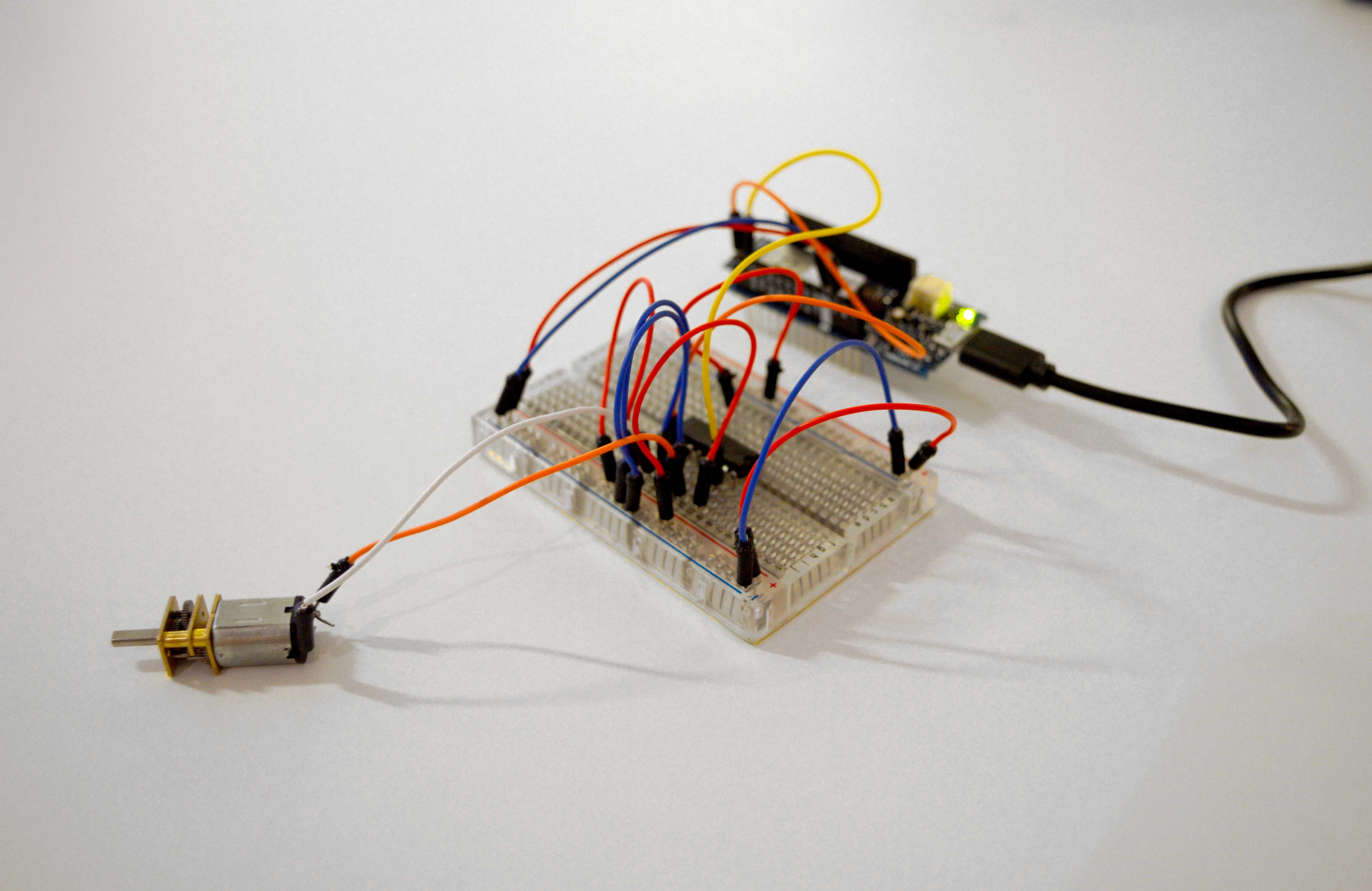

Driving the motor

We used a high torque DC motor to turn the wheel, and the L293D motor driver to drive the motor.

Click here to read in depth about how we set this up, along with a basic code snippet for making the motor turn. We'll build upon this code later in this article, when we add in MQTT functionality to listen for Commands.

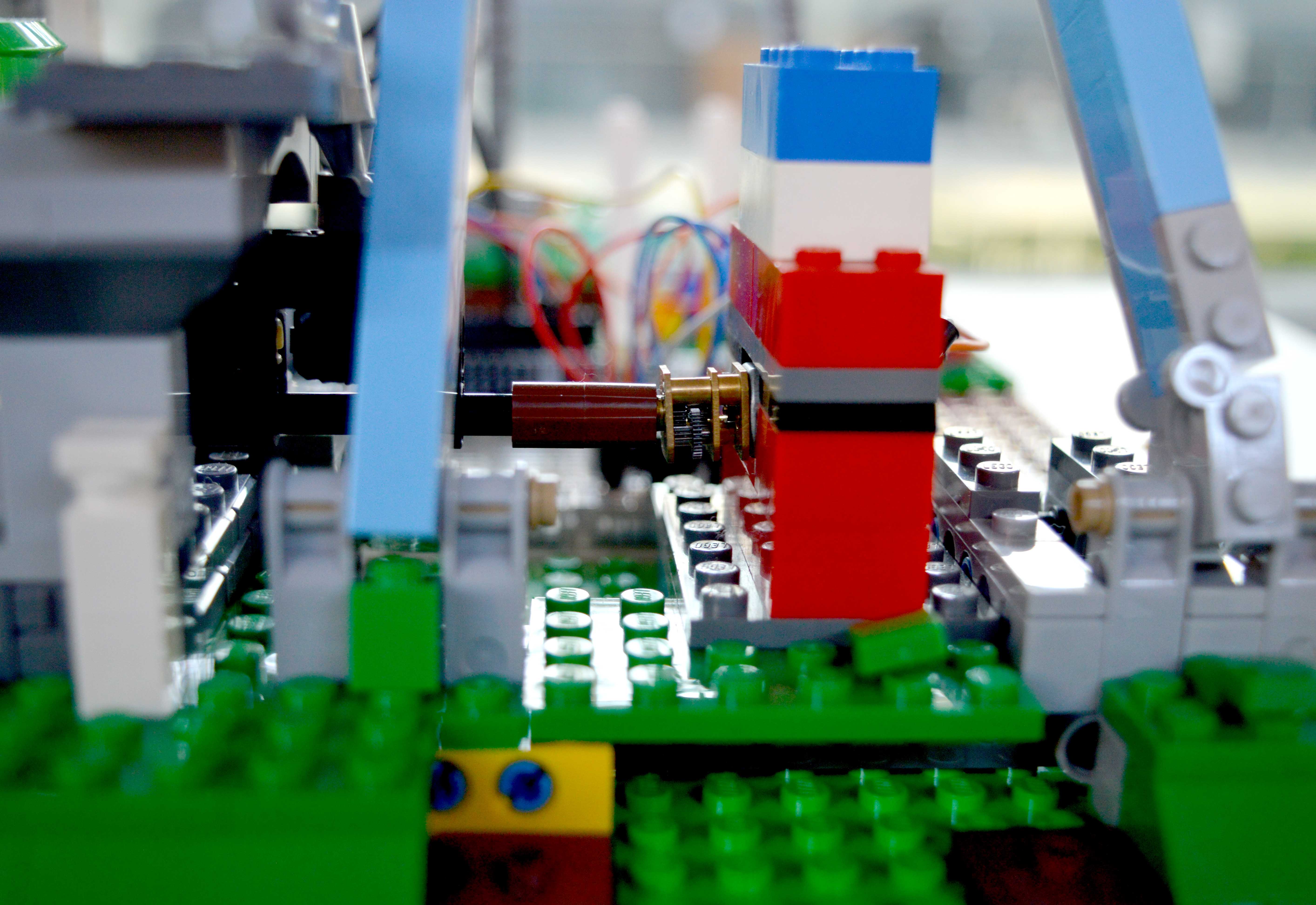

Attaching the motor to the wheel

The next step was to hook up the DC motor to the Ferris wheel mechanism and make it turn. This proved to be quite challenging, and required some dismantling of the lower part of the structure to make space for the components. We found that the motor's shaft fit almost perfectly into one of the Lego pieces, all we had to do was mount the motor in the correct place and make sure it wouldn't move around. So, we built a little Lego enclosure for the motor out of spare pieces, leaving space at the back for connecting the wires to the motor's terminals.

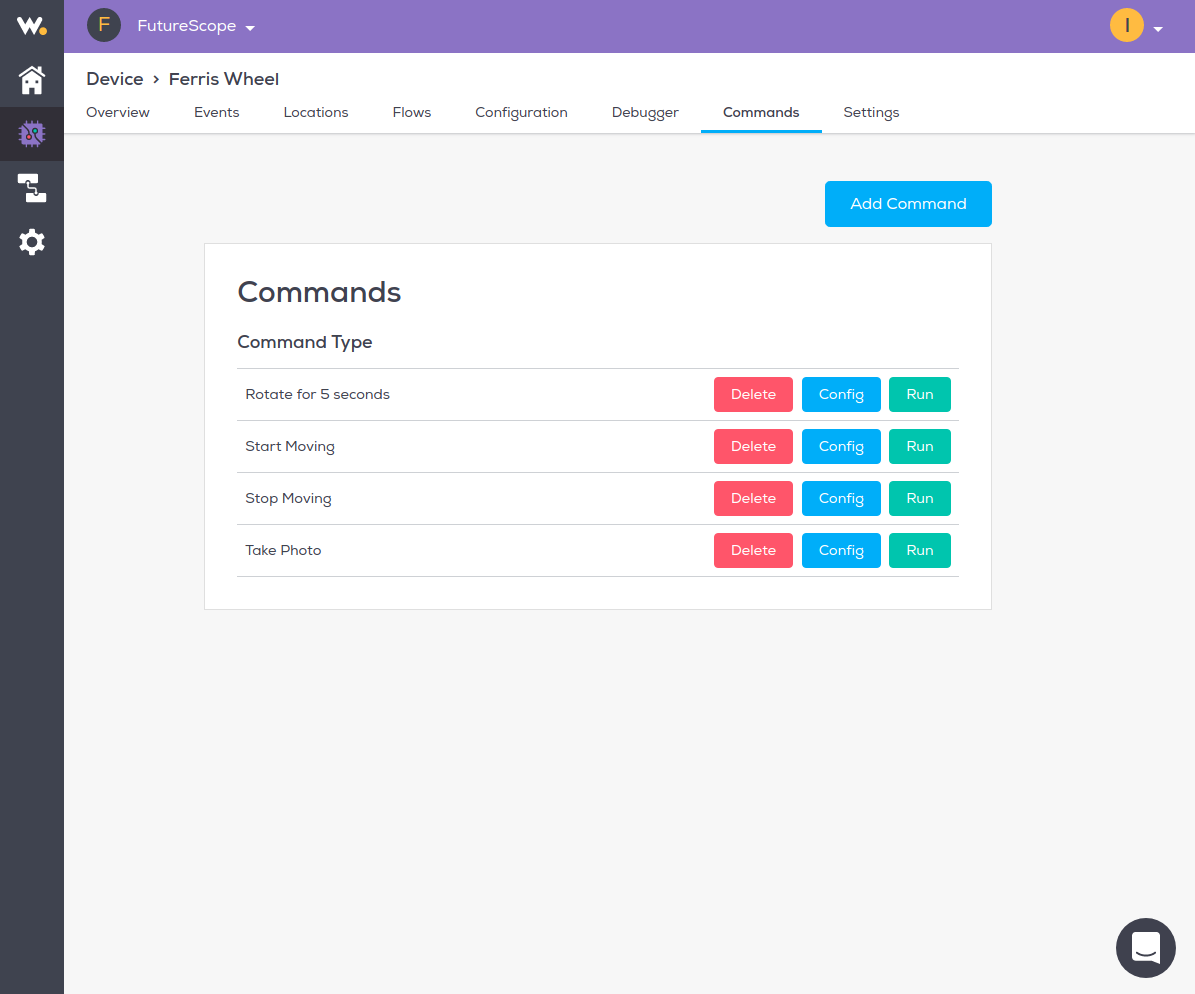

Listening for Commands

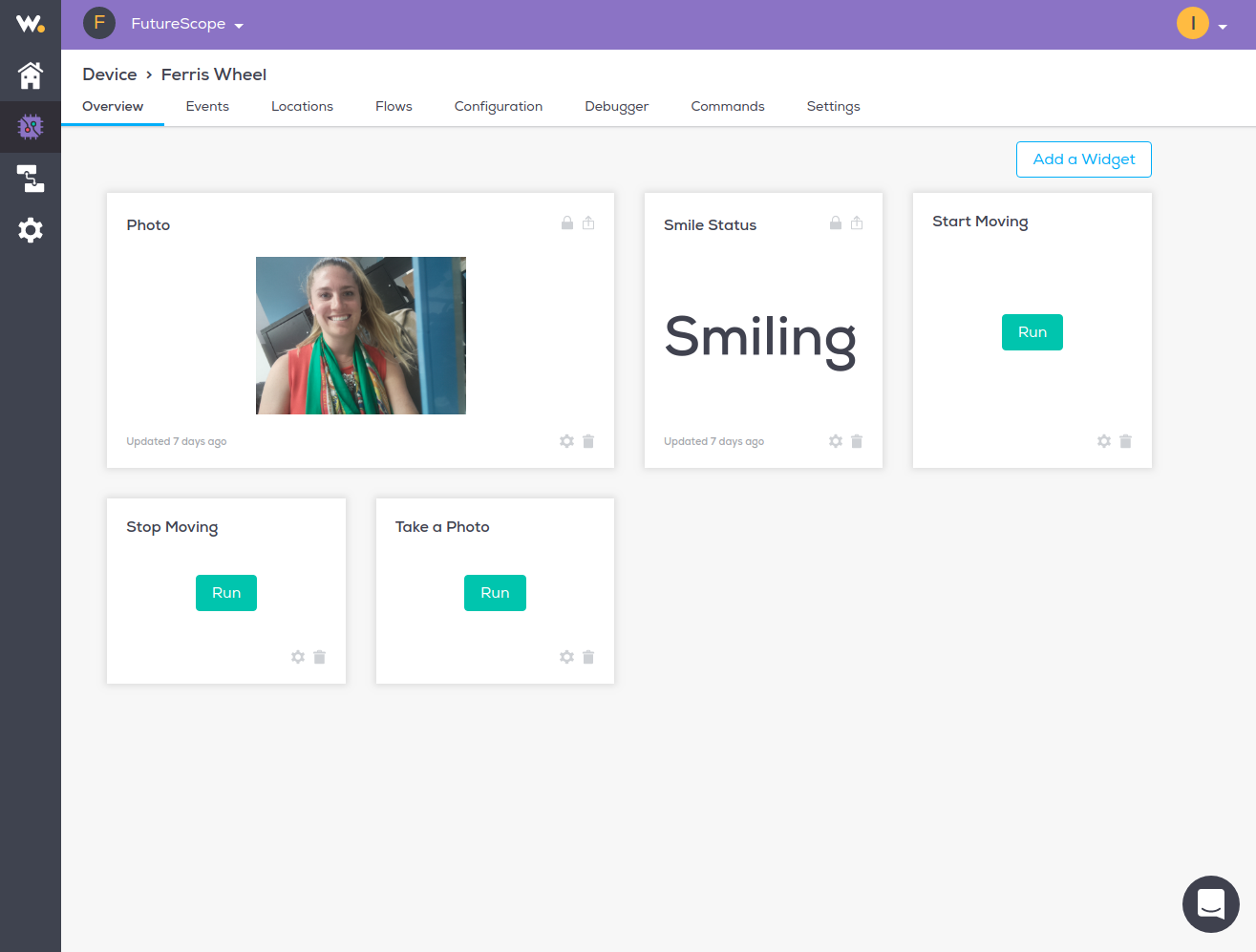

Next, we wanted to create some Commands for the Arduino to listen for, and rotate the motor accordingly. The first step was to create the Commands in the Wia dashboard. As you can see, we created some basic Commands for manually controlling the wheel, as well as a 'take photo' Command, which we'll get to later.

Next, we updated the Arduino code to use MQTT to listen for the Commands. Here's the code:

#include <WiFi101.h>

#include <MQTT.h>

const char WIFI_SSID[] = "wifi-ssid"; // WiFI ssid

const char WIFI_PASS[] = "wifi-password"; //WiFI password

// get this from the wia dashboard. it should start with `d_sk`

const char* device_secret_key = "device-secret-key";

//WiFiSSLClient ipCloudStack;

WiFiClient wifiClient;

MQTTClient mqttClient;

int status = WL_IDLE_STATUS;

// Wia Cloud MQTT params

char mqttCloudServer[] = "api.wia.io";

int mqttCloudPort = 1883;

char mqttCloudUsername[] = device_secret_key;

char mqttCloudPassword[] = " ";

// Wia API parameters

char server[] = "api.wia.io";

const int mqttPort = 1883; // Default MQTT port

const String deviceId = "device-id"; // starts with dev_, found in Wia Dashboard

const String commandName = "take-photo"; // Configured in Wia Dashboard

// Topics

String takePhotoCommandTopic = "devices/" + deviceId + "/commands/take-photo/run";

String rotateForFiveCommandTopic = "devices/" + deviceId + "/commands/rotate-for-5-seconds/run";

String startMovingCommandTopic = "devices/" + deviceId + "/commands/start-moving/run";

String stopMovingCommandTopic = "devices/" + deviceId + "/commands/stop-moving/run";

//L293D

const int motorPin1 = 7;

const int motorPin2 = 6;

void messageReceived(String &topic, String &payload) {

Serial.println("incoming: " + topic + " - " + payload);

if (topic.equals(rotateForFiveCommandTopic)) {

Serial.println("Rotating for 5 seconds.....");

// Start rotating

digitalWrite(motorPin1, HIGH);

digitalWrite(motorPin2, LOW);

// wait 5 seconds for connection:

delay(5000);

// Stop rotating

digitalWrite(motorPin1, LOW);

digitalWrite(motorPin2, LOW);

} else if (topic.equals(startMovingCommandTopic)) {

Serial.println("Start rotating.....");

// Start rotating

digitalWrite(motorPin1, HIGH);

digitalWrite(motorPin2, LOW);

} else if (topic.equals(stopMovingCommandTopic)) {

Serial.println("Stop rotating.....");

// Stop rotating

digitalWrite(motorPin1, LOW);

digitalWrite(motorPin2, LOW);

} else {

Serial.println("Unhandled topic");

}

}

void connect() {

// check for the presence of the shield:

if (WiFi.status() == WL_NO_SHIELD) {

Serial.println("WiFi shield not present");

// don't continue:

while (true);

}

// attempt to connect to WiFi network:

while ( status != WL_CONNECTED) {

Serial.print("Attempting to connect to SSID: ");

Serial.println(WIFI_SSID);

// Connect to WPA/WPA2 network. Change this line if using open or WEP network:

status = WiFi.begin(WIFI_SSID, WIFI_PASS);

// wait 5 seconds for connection:

delay(5000);

}

Serial.print("\nconnecting...");

Serial.println("\nconnected!\n");

Serial.print("\nIP address: ");

Serial.println(WiFi.localIP());

// You need to set the IP address directly.

mqttClient.begin(mqttCloudServer, mqttCloudPort, wifiClient);

Serial.println("start wia connect"); Serial.println();

while (!mqttClient.connect("wiatest", mqttCloudUsername, mqttCloudPassword)) {

Serial.print("*");

delay(500);

}

Serial.println("Connected to MQTT");

mqttClient.onMessage(messageReceived);

mqttClient.subscribe(takePhotoCommandTopic);

mqttClient.subscribe(rotateForFiveCommandTopic);

mqttClient.subscribe(startMovingCommandTopic);

mqttClient.subscribe(stopMovingCommandTopic);

}

void setup() {

Serial.begin(115200);

pinMode(motorPin1, OUTPUT);

pinMode(motorPin2, OUTPUT);

digitalWrite(motorPin1, LOW);

digitalWrite(motorPin2, LOW);

connect();

}

void loop() {

mqttClient.loop();

delay(1000);

if (!wifiClient.connected()) {

connect();

}

}

After uploading that code to the Arduino, we could then simply click 'Run' on the Commands in the Wia dashboard, and the Ferris wheel would turn accordingly.

The facial recognition camera

For the facial recognition camera, we used a Raspberry Pi Zero W with a Raspberry Pi camera module.

Read in depth about how we set this up here. This covers setting up the Raspberry Pi with the camera module, using NodeJS to take a photo and post it as an Event to the Wia platform, and setting up a basic Flow to check if the subject of the photo is smiling or not.

We built upon this setup a bit more, and added the ability for the Raspberry Pi to listen for the 'take photo' Command. Here's the code:

'use strict';

var wia = require('wia')('device-secret-key');

var fs = require('fs');

var RaspiCam = require("raspicam");

wia.events.publish({

name: "started"

});

// Setup the camera

var camera = new RaspiCam({

mode: 'photo',

output: __dirname + '/photo.jpg',

encoding: 'jpg'

});

// Listen for the "start" event triggered when the start method has been successfully initiated

camera.on("start", function(){

console.log("Starting to take photo.");

});

// Listen for the "read" event triggered when each new photo/video is saved

camera.on("read", function(err, timestamp, filename){

console.log("New photo created.", timestamp, filename);

// Publish the photo to Wia

wia.events.publish({

name: 'photo',

file: fs.createReadStream(__dirname + '/' + filename)

});

});

wia.stream.on("connect", function() {

console.log("Connected to stream.");

wia.events.publish({name: "connected"});

});

setTimeout(function() {

wia.commands.subscribe({

slug: 'take-photo'

}, function(err, data) {

wia.events.publish({name:"gotCommand"});

console.log("In command callback. Taking photo.");

// Take a photo

camera.start();

});

}, 12500);

wia.stream.connect();

Building the Flow

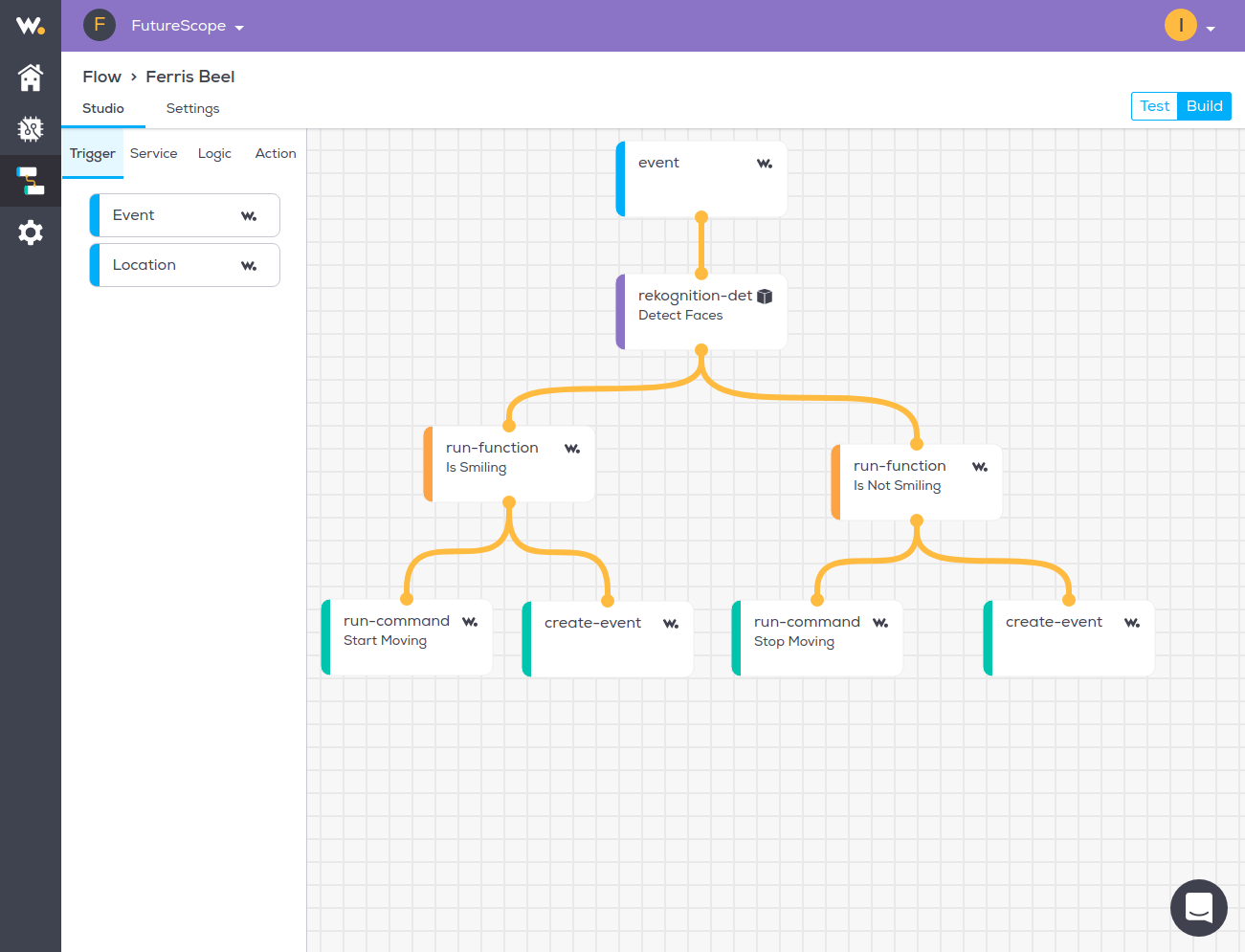

Our next step was to make the Raspberry Pi and Arduino work together over Wia, by creating a Flow.

In the Wia dashboard, we created a new Flow. The trigger is the photo Event created when the 'take photo' Command is run. This then goes through a 'Detect Faces' node, the output of which branches off into two logic nodes - one to output a string 'Smiling' if the subject is smiling, and one to output a string 'Not smiling' if the subject isn't smiling.

Here's the code for the 'smiling' logic node:

if (input.body.faceDetails) {

input.body.faceDetails.forEach(function(face) {

if (face.smile.value == true) {

output.process = true;

output.body.data = "Smiling";

} else {

output.process = false;

}

});

} else {

output.process = false;

}

...and here's the code for the 'not smiling' logic node:

if (input.body.faceDetails) {

input.body.faceDetails.forEach(function(face) {

if (face.smile.value == false) {

output.process = true;

output.body.data = "Not smiling";

} else {

output.process = false;

}

});

} else {

output.process = false;

}

If the subject is smiling, the 'start moving' Command is run, triggering the Arduino to start turning the motor. If the subject isn't smiling, the 'stop moving' Command is run, stopping the motor from turning.

Creating Widgets

You'll notice that we also have 'create event' nodes at the end of the Flow. This is where we use the output.body.data values from the logic nodes - to create Widgets!

Each time a photo is taken, a new Event is created with the data equal to either 'Smiling' or 'Not smiling'. Over on the Device overview page of the dashboard, we were able to create a text Widget that would display this text and auto-update every time a new photo is taken. We also set up handy widgets for running the various Commands, as well as displaying the photo taken by the Raspberry Pi!

Top Comments

-

genebren

-

Cancel

-

Vote Up

+1

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

-

anpivey

in reply to genebren

-

Cancel

-

Vote Up

+1

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Comment-

anpivey

in reply to genebren

-

Cancel

-

Vote Up

+1

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Children