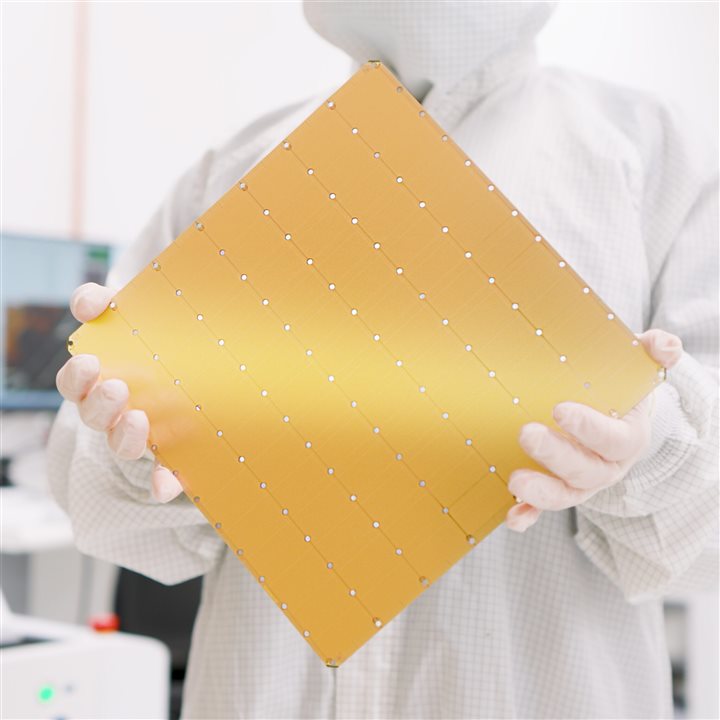

(Image Credit: Cerebras)

Cerebras recently developed an AI inference chip that positions itself as a competitor to NVIDIA's DGX100. It's packed with 44GB of memory—enough for it to work with AI models that have billions or even trillions of parameters. If AI models exceed the memory capacity of one wafer, the Cerebras chip divides them at layer boundaries. Afterward, the chip sends them to several CS-3 systems. One CS-3 system is capable of handling 20 billion parameter models, while four systems can handle 70 billion parameter models.

According to Cerebras, its 16-bit model offers more precision compared to competitors using 8-bit models that suffer from performance loss. Cerebras claims the 16-bit model has a 5% performance boost in math, reasoning, and multi-turn conversations compared to 8-bit models. This helps produce reliable and precise results.

Developers can seamlessly integrate the Cerebras inference platform through the chat and API. It's tailored for those who know how to use OpenAI's Chat Completions format. One of the greatest features of the chip is that it runs Llama3.1 70B models at 450 tokens per second---the only platform to reach high speeds with large-scale models. That performance is important for real-time LLM intelligence and complex AI workflows. This also applies to scaffolding, which uses a lot of tokens.

Cerebras wants to push for adoption, and it's doing this by offering developers a daily limit of one million tokens per day at launch. However, the company says the pricing of larger deployments will undercut popular GPU cloud services. Cerebras launched the platform with support for the Llama3.1 8B and 70B models, and it plans to provide support for larger models like Llama3 405B and Mistral Large 2.

Have a story tip? Message me at: http://twitter.com/Cabe_Atwell