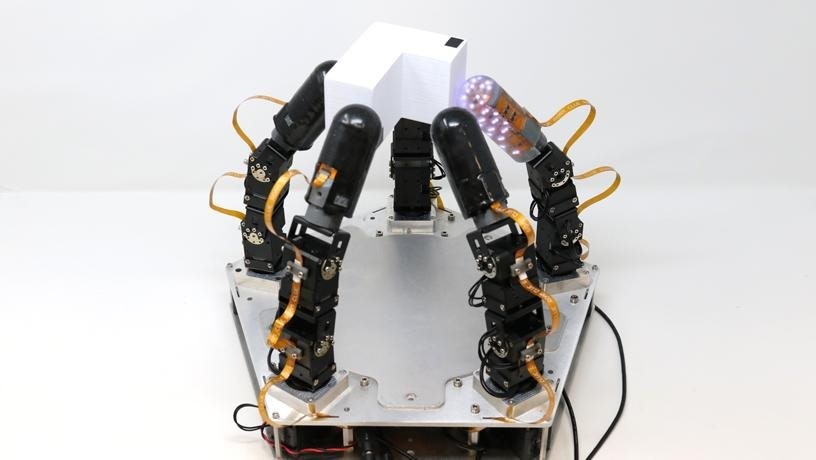

Columbia University's robotic hand can perform complex manipulation tasks. (Image Credit: Columbia University ROAM Lab)

Columbia University engineers developed a visionless prototype robotic hand that manipulates objects in the dark. The team's goal is to make the hand manipulate more complex objects while holding them without using support surfaces and to reposition said objects via hand-sensing technology. However, this isn't easy to accomplish. That's because fingers must be repositioned to make the object stable. In such a case, touch sensing helped carry out that task. This also meant the hand didn't need visual feedback to perform any task, allowing it to work in the darkness.

"While our demonstration was on a proof-of-concept task, meant to illustrate the capabilities of the hand, we believe that this level of dexterity will open up entirely new applications for robotic manipulation in the real world," said Matei Ciocarlie, associate professor at Columbia. "Some of the more immediate uses might be in logistics and material handling, helping ease up supply chain problems like the ones that have plagued our economy in recent years, and in advanced manufacturing and assembly in factories."

This hand features 15 actuated joints and five fingers with touch-sensing technology. The engineers used deep reinforcement learning to test out the hand's capabilities, allowing it to adapt, improve and replicate tasks with varying constraints based on autonomous learning and exploration.

The hand has 5 fingers with touch-sensing technology. (Image Credit: Columbia University ROAM Lab)

The motor learning algorithms are made up of the team's tactile and proprioceptive data without vision. In their trials, the robotic hand performed a year's worth of practice in just a few hours, thanks to the physics simulators and highly parallel processors. Afterward, the researchers applied this trained manipulation skill to the robotic hand, achieving the desired dexterity. Ciocarlie noted that "the directional goal for the field remains assistive robotics in the home, the ultimate proving ground for real dexterity. In this study, we've shown that robot hands can also be highly dexterous based on touch sensing alone. Once we also add visual feedback into the mix along with touch, we hope to be able to achieve even more dexterity and one day start approaching the replication of the human hand."

Next, the team wants to add semantic, abstract, and embodied intelligence to the robot hand's capabilities. Language models, including Google's PALM or OpenAI's GPT4, can provide semantic intelligence.

Have a story tip? Message me at: http://twitter.com/Cabe_Atwell