Table of Contents

Introduction

It has taken me more hours than planned to figure out what’s going on inside the MAX25405 Firmware Framework. At long last I now understand what this framework is capable of and, more importantly, what it is not able to do.

The first thing, which has been highlighted already, is that the MAX25405 Firmware Framework is not the same version as found on the MAX32620FTHR board. This framework code is somewhat dated and I would see it as an early alpha version of the installed binary.

Secondly, the code should definitely be treated more as a framework or as guidance than a complete library. As it stands, the framework, when compiled, is unable to determine or calculate any gesture results, as described in the datasheet.

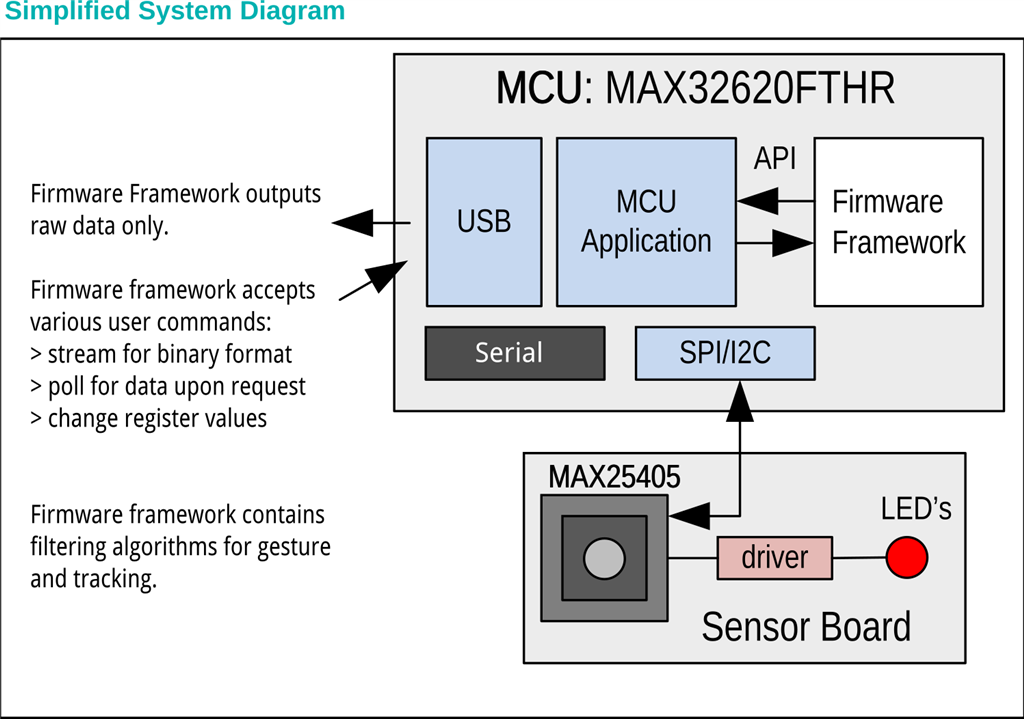

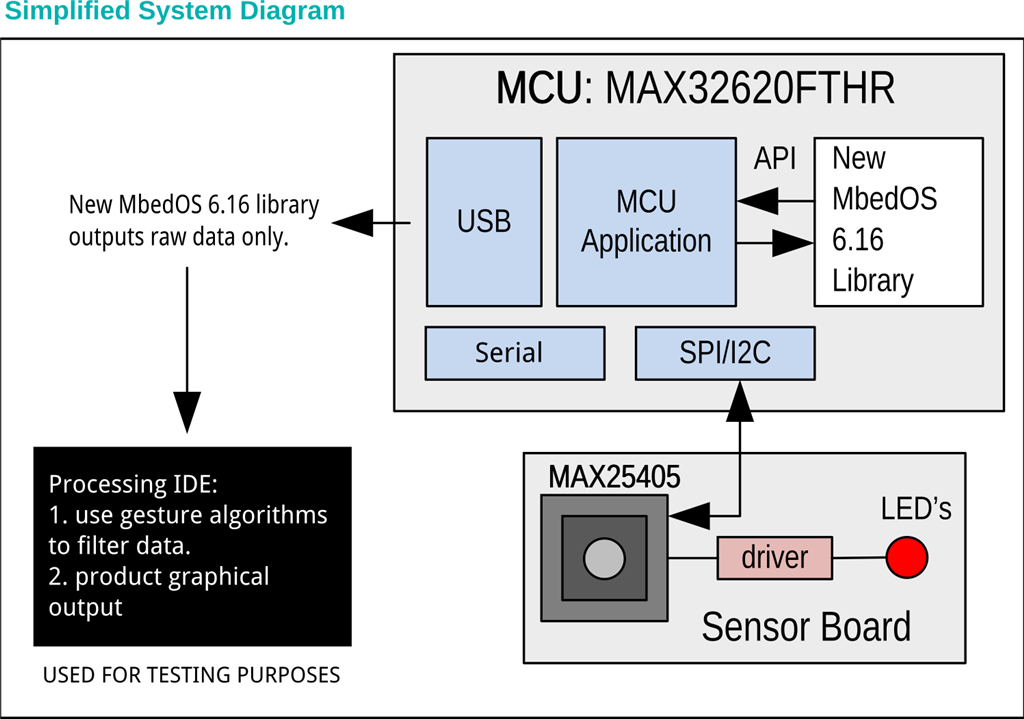

To help describe these two different setups I have taken the Simplified System Diagram provided in the datasheet (page 2) and modified it for each scenario.

So when you receive the Evaluation Kit, the MAX32620FTHR includes embedded firmware, which can be used to identify different gestures, as described in the MAX25405 datasheet. This embedded firmware works with the Windows GUI software and you can send it commands via a USB Serial. You are unable modify the USB Serial port and use RX/TX pins instead.

The firmware framework, on the other hand, is a set of files that contains filtering algorithms and it can read the SPI/I2C bus to extract raw data. It also responds to the defined user commands but does not provide a gesture result. Here the framework files can be compiled using an old version of MbedOS together with the GCC compiler only. You can also modify the serial output port to be either USB Serial or use RX/TX pins (once a bug has been fixed).

As an alternative, I then created some new code of my own using the latest MbedOS 6.16 to extract also the raw values from the MAX25405, via either SPI or I2C bus (You are welcome to use the base framework as it stands, which has been uploaded onto GitHub).

So as it stands, the only way to have gestures identified is via the embedded firmware.

Well I’m not going to let this deter me. This is a design challenge after all.

In fact this is quite a common issue about having trouble using an unknown library to achieve a desired result, and it is a great example of why experimentation is absolutely necessary in this case, as it is of limited value to pause this code framework, during runtime for debugging purposes, when you have 60 pixels changing in real time every couple of milliseconds.

So what does one do?

Well at least there is software that provides raw pixel data. I took that as my starting point.

My next step was to understand how this data is analysed, based on the Firmware Framework code to derive a gesture result. I could clearly see that this framework included a range of filtering techniques but what were they doing exactly and could they really be used to differentiate one gesture from another.

I had to find out as I certainly did not want to get stuck down some rabbit hole.

I also wanted to know whether this methodology is actually the best approach to use to determine gesture results.

Making sense of the Gesture Algorithms

Formulating a revised plan

I started by breaking down the gesture library code into 3 steps, which I could replicate.

I could see that the first step taken was to smooth the data using a noise filtering algorithm (library function: noiseWindow3Filter).

Then the second step taken subtracted out the background values from the actual values to create some normalised values. I refer to these as normalised values as when nothing happens you get each pixel having very similar values (i.e. very small variance).

And then the third step determines if a gesture is in progress or not. For now, I am not sure if this algorithm is complete or not. Following my tests (see later on), I had to make some mods to the logic to make it work better. This step also determined a centre of mass. I also do not know what the purpose of this calculation is as the current firmware framework code doesn’t use the value.

I then had to decide what is the best way to replicate these steps? The key was to try and keep things simple.

Using the Processing IDE

So, after a good bit of head scratching, I realised that the Processing IDE could help me with my experiments as I was very familiar with it and it is targeted at users wanting to develop visualisation projects.

Before jumping straight in, I thought to prepare for this environment. As such, I decided to slow things down a bit in case things crashed due to overloading the serial port with too much data.

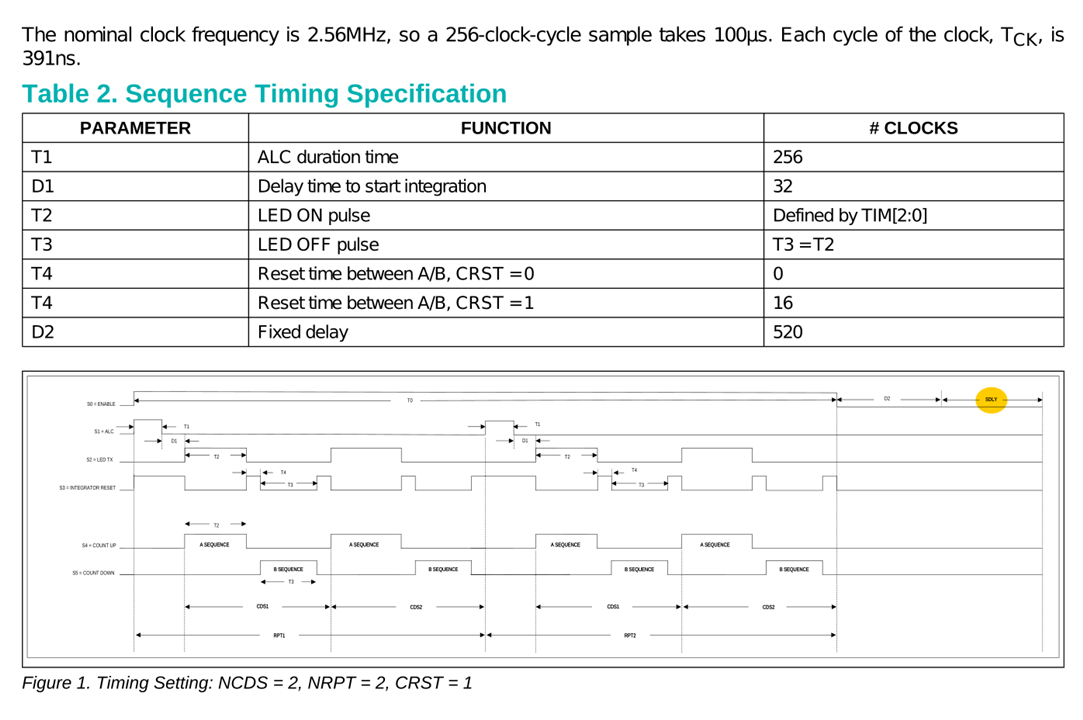

Thankfully, you can do this using one of the configuration registers for the MAX25405, which allows you to define an “End of Conversion Delay”. This can range from 0 delay up to a 1.6 second delay. I decided to use a 200ms delay (SDLY: 1000).

After some tests, this proved good enough for my purposes.

So now I just needed to create some Processing code to replicate the firmware framework gesture logic, with some added visual output to help me see what is going on... and I came up with this:

| {gallery}Visual Output using Processing IDE |

|---|

|

|

|

|

|

|

So now I was able to visualise not only the raw pixel data but also how the different algorithms manipulate the data in real time.

It turns out that the normalised values are really useful.

Hopefully this is captured in my video demos.

Code

/**

* Read Serial Output from MbedOS MAX25405 Gesture Sensor firmware on MAX32620FTHR

* Gesture sensing algorithm replicating Gesture Sensor Firmware Framework version 1.0

*

* Reads data from the serial port and produces graphical output on screen of each pixel value.

* Then applies smoothing and filtering of data and displays this output too to highlight differences

*

* Version 1.1: Added in Gesture in Progress monitoring

*

* Written by C. Gerrish 1 November 2022

*

Permission is hereby granted, free of charge, to any person obtaining a

copy of this software and associated documentation files (the "Software"),

to deal in the Software without restriction, including without limitation

the rights to use, copy, modify, merge, publish, distribute, sublicense,

and/or sell copies of the Software, and to permit persons to whom the

Software is furnished to do so, subject to the following conditions:

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

DEALINGS IN THE SOFTWARE.

**/

import processing.serial.*;

Serial myPort; // Create object from Serial class

final int lf = 10; // Linefeed in ASCII

final int cr = 13; // Carriage Return in ASCII

final static int SENSOR_XRES = 10;

final static int SENSOR_YRES = 6;

final static int NUMBER_SENSOR_PIXELS = 60;

final static float WINDOW_FILTER_ALPHA = 0.5;

final static float BACKGROUND_FILTER_ALPHA = 0.05; /*Changed from 0.10f for 400um device*/

final static float LOW_PASS_FILTER_ALPHA = 1.0;

// Interpolation used in gesture algorithm

final static int INTERP_FACTOR = 4;

final static int INTERP_XRES = ((SENSOR_XRES-1)*INTERP_FACTOR+1);

final static int INTERP_YRES = ((SENSOR_YRES-1)*INTERP_FACTOR+1);

final static int NUM_INTERP_PIXELS = (INTERP_XRES*INTERP_YRES);

final static int ZERO_CLAMP_THRESHOLD_FACTOR = 6;

final static int ZERO_CLAMP_THRESHOLD = 10;

final static int END_DETECTION_THRESHOLD = 50; /*Changed from 250 for 400um device*/

final static float DY_PIXEL_SCALE = 1.42857; /*10.0f/7.0f*/

static int[][] nwin = new int[3][NUMBER_SENSOR_PIXELS];

static int nwStart = 1;

static int[][] pvar = new int[2][NUMBER_SENSOR_PIXELS];

static int startvar = 0;

static float[] foreground_pixels = new float[NUMBER_SENSOR_PIXELS];

static float[] background_pixels = new float[NUMBER_SENSOR_PIXELS];

static int[] interp_pixels = new int[NUM_INTERP_PIXELS];

static String myString = null;

static int GestureInProgress = 0;

void setup()

{

size(700, 600);

// I know that the first port in the serial list on my mac

// is always my FTDI adaptor, so I open Serial.list()[0].

// On Windows machines, this generally opens COM1.

// Open whatever port is the one you're using.

String portName = Serial.list()[0];

try{

myPort = new Serial(this, portName, 115200);

} catch(Exception e) {

println("Error Opening Serial Port");

while (true) {;;;}

}

imageMode(CENTER);

background(0); // Set background to black

}

void draw()

{

if (myPort != null) {

while (myPort.available() > 0) {

myString = myPort.readStringUntil(lf);

if (myString != null) {

// Remove last char which should be carriage return

if (myString.length() > NUMBER_SENSOR_PIXELS) {

// Note that I had placed an additional checksum in my serial output for debugging purposes - hence 12 chars are removed.

// If using the Github files, please amend here as necessary.

myString = myString.substring(0, myString.length()-12);

// The last char should now be a comma

int[] GesturePixels = int(split(myString, ','));

// We process these raw pixels

if (nwStart == 1) {

for (int i = 0; i < NUMBER_SENSOR_PIXELS; i++) {

nwin[0][i] = GesturePixels[i];

nwin[1][i] = GesturePixels[i];

nwin[2][i] = GesturePixels[i];

foreground_pixels[i] = float(GesturePixels[i]); // clear the filter

background_pixels[i] = float(GesturePixels[i]); // clear the filter

}

nwStart = 0;

}

if (GesturePixels.length == NUMBER_SENSOR_PIXELS+1) {

if (GestureInProgress == 1) background(0,90,100);

else background(0); // Set background to black

/************** commented out raw display **********************

strokeWeight(6);

stroke(255); // Set a white color for points

for (int i = 0; i < NUMBER_SENSOR_PIXELS; i++) {

point(i*10+50, map(GesturePixels[i], -32768, 32767, 0, 600));

}

*************************************************************/

// Smooth out the values

int Raw_maxPixel = -99999;

for (int i = 0; i < NUMBER_SENSOR_PIXELS; i++) {

nwin[0][i] = nwin[1][i];

nwin[1][i] = nwin[2][i];

nwin[2][i] = GesturePixels[i];

strokeWeight(5);

stroke(100); // Now set a grey color for points

GesturePixels[i] = int(WINDOW_FILTER_ALPHA * float(nwin[1][i]) + (1-WINDOW_FILTER_ALPHA)*(float(nwin[0][i]) + float(nwin[2][i]))/2.0);

if (GesturePixels[i] > Raw_maxPixel) Raw_maxPixel = GesturePixels[i];

/****************** commented out smoothed display *******************

point(i*10+50, map(GesturePixels[i], -32768, 32767, 0, 600));

*******************************************************************/

}

// Add in some background/foreground filtering on the values

for (int i=0; i< NUMBER_SENSOR_PIXELS; i++) {

background_pixels[i] = (1.0 - BACKGROUND_FILTER_ALPHA) * background_pixels[i] + BACKGROUND_FILTER_ALPHA * GesturePixels[i];

}

for (int i=0; i< NUMBER_SENSOR_PIXELS; i++) {

foreground_pixels[i] = (1.0f - LOW_PASS_FILTER_ALPHA) * foreground_pixels[i] + LOW_PASS_FILTER_ALPHA * GesturePixels[i];

}

int Filtered_maxPixel = -99999;

int Filtered_minPixel = 99999;

for (int i=0; i< NUMBER_SENSOR_PIXELS; i++) {

GesturePixels[i] = int(foreground_pixels[i] - background_pixels[i]);

if (GesturePixels[i] > Filtered_maxPixel) Filtered_maxPixel = GesturePixels[i];

if (GesturePixels[i] < Filtered_minPixel) Filtered_minPixel = GesturePixels[i];

if (GestureInProgress == 1) {

if (startvar == 1) {

pvar[1][i] = (GesturePixels[i] - pvar[0][i]);

stroke(150); // Now set a grey color for points

strokeWeight(2);

line(i*10+50, height/2, i*10+50, height/2 + map(pvar[1][i], -32768, 32767, -height/2, height/2));

}

pvar[0][i] = GesturePixels[i];

}

// For middle band of pixels use a different colour

if (i < 20) {

stroke(210, 52, 0);

strokeWeight(4);

}

else if (i >= 20 && i < 40) {

stroke(210, 210, 0);

strokeWeight(5);

}

else {

stroke(0, 210, 52);

strokeWeight(4);

}

point(i*10+50, map(GesturePixels[i], -32768, 32767, 0, 600));

}

if (GestureInProgress == 1 && startvar == 0) startvar = 1;

else if (GestureInProgress == 0 && startvar == 1) startvar = 0;

interpn(GesturePixels, interp_pixels, SENSOR_XRES, SENSOR_YRES, INTERP_FACTOR);

// Thresholding - zero out pixels below some percent of peak

int threshold = Filtered_maxPixel / ZERO_CLAMP_THRESHOLD_FACTOR;

for (int i = 0; i < NUM_INTERP_PIXELS; i++) {

if (interp_pixels[i] < threshold) {

interp_pixels[i] = 0;

}

}

// and Thresholding again - zero out below fixed threshold

threshold = ZERO_CLAMP_THRESHOLD;

for (int i = 0; i < NUM_INTERP_PIXELS; i++) {

if (interp_pixels[i] < threshold) {

interp_pixels[i] = 0;

}

}

// Center of mass

// Final part determines if a gesture is in progress or not

float[] cmx = {0.0};

float[] cmy = {0.0};

int[] totalmass = {0};

if (Filtered_maxPixel >= END_DETECTION_THRESHOLD && (Filtered_maxPixel-Filtered_minPixel) > 1000) {

calcCenterOfMass(interp_pixels, INTERP_XRES, INTERP_YRES, cmx, cmy, totalmass); // Only calculate COM if there is a pixel above the noise (avoid divide-by-zero)

cmx[0] = cmx[0]/INTERP_FACTOR;

cmy[0] = cmy[0]/INTERP_FACTOR * DY_PIXEL_SCALE; // scale y so it has same unit dimension as x

stroke(0, 102, 204);

strokeWeight(10);

point(width/2+(cmx[0]*10), height/2+(cmy[0]*10));

//println("CMX:"+nf(cmx[0])+ " CMY:"+nf(cmy[0]));

GestureInProgress = 1;

}

else GestureInProgress = 0;

}

}

}

}

}

}

void interpn(final int[] pixels, int[] interp_pixels, final int w, final int h, final int interpolation_factor)

{

int w2 = (w - 1) * interpolation_factor + 1;

int h2 = (h - 1) * interpolation_factor + 1;

int A, B, C, x, y;

float x_ratio = 1.0f / (float)interpolation_factor;

float y_ratio = 1.0f / (float)interpolation_factor;

// First stretch in x-direction, index through each pixel of destination array. Skip rows in destination array

for (int i = 0; i < h; i++) {

for (int j = 0; j < w2; j++) {

x = (int)(x_ratio * j); // x index of original frame

int index = i * w + x; // pixel index of original frame

if (x == w - 1) // last pixel on right edge of original frame

interp_pixels[i * w2 * interpolation_factor + j] = pixels[index]; // skip rows in dest array

else {

A = pixels[index];

B = pixels[index + 1];

float x_diff = (x_ratio * j) - x; // For 2x interpolation, will be 0, 1/2, 0, 1/2...

interp_pixels[i * w2 * interpolation_factor + j] = (int)(A + (B - A) * x_diff); // skip rows in dest array

}

}

}

// Then stretch in y-direction, index through each pixel of destination array

for (int i = 0; i < h2; i++) {

for (int j = 0; j < w2; j++) {

y = (int)(y_ratio * i); // y index of original frame

int index = y * w2 * interpolation_factor + j; // pixel index of frame

if (y == h - 1) // pixel on bottom of original frame

interp_pixels[i * w2 + j] = interp_pixels[index];

else {

A = interp_pixels[index];

C = interp_pixels[index + w2 * interpolation_factor];

float y_diff = (y_ratio * i) - y;

interp_pixels[i * w2 + j] = (int)(A + (C - A) * y_diff);

}

}

}

}

void calcCenterOfMass(final int[] pixels, final int xres, final int yres, float[] cmx, float[] cmy, int[] totalmass)

{

int cmx_numer=0, cmy_numer=0;

for (int i = 0; i < xres*yres; i++) {

cmx_numer += (i%xres)*pixels[i];

cmy_numer += (i/xres)*pixels[i];

totalmass[0] += pixels[i];

}

if (totalmass[0] == 0) {

totalmass[0] = 1; // avoid NaN

}

cmx[0] = (float)cmx_numer/(float)(totalmass[0]);

cmy[0] = (float)cmy_numer/(float)(totalmass[0]);

}

Demonstration

The first video just demonstrates the smoothing and filtering effects:

The second video demonstrates how it detects if gesture motion is in progress or it’s waiting for a gesture. It also displays a centre of mass position relative to the centre of the screen.

Finally, the third video demonstrates the use of highlighting change in the normalised values as gestures occur. This certainly opens up a way forward.

Next Steps

I now feel I have the basics in place. My next step will be to work out my methodology to give me a consistent gesture result.

This is shouting “use Machine Learning” so we will see if this is the simplest way forward but before I jump on that bandwagon, I plan to spend a bit more time researching gesture recognition algorithms.

Addendum: Creating gesture heat maps using Processing

I decided to recreate the Windows GUI software's heat map display using Processing.

My display brightness mapping values differed from those used in the Windows GUI software but the display output seem very similar.

I also include the Centre of Mass (CoM) dot and showed a trace of the previous two positions. This seems quite useful in providing directionality.

Hence, I am starting to see a possible solution for gesture detection by simply using the CoM trace direction and movements to determine gestures. I will be testing this theory over a couple of days.

The two videos just use a slightly different colour patterns but otherwise are very similar. Please note that the videos have no audio or description.

Hopefully you can recognise the different gestures used simply by looking at the different patterns generated in the display.

Basically I started with a mix of horizontal and vertical gestures, then click gestures then rotational gestures.

Top Comments