Hello everyone!

As I said in the previous blog, a great opportunity arose for me (and I think for the other roadtesters as well) to interact with an innovative, expensive technology. Until now, I myself have known devices that have buttons, then came the technology with touch controls, but what has appeared in recent years regarding the possibility of giving commands and controls through hand gestures is proof of the technological advance of our days, that not to mention that there is also video camera recognition technology, tracking eye blinks would be an example.

Giving commands through hand gestures is much more interactive than touchscreen commands for example, especially if we think about some situations such as, for example, our hands are dirty and we don't want to put our finger on the screen, or the panel control is at some distance and we do not reach it. Perhaps the most popular way of using gesture control is when we are in a car, on the back seat or behind the wheel. You are driving the car, but you need to keep your direction on the road, and you need to change the radio channel, or increase/decrease the volume, or even the temperature, normally you can do this from the buttons on the navigation system, or on the steering wheel , but I think we can separate them in a way. I don't have a car, but I think I can say the fact that there are no buttons on the steering wheel for absolutely all commands, that's why the manufacturer can come up with a new idea, an additional command and control function in the car through gestures. A preference can be set and the user can give commands through gestures only for the air conditioning, for example, or maybe we have a media mode and the driver knows that he also has gesture control at his disposal

Back to our kit. It is based on the MAX25405 from Analog Devices, which is a low-cost, data-acquisition system for gesture and proximity sensing. Detection distance is improved by integrating a complete optical system consisting of lens, aperture, visible light filter, and a 6x10 photodetector array. How does it work? The proximity, hand-detection, and gesture-recognition functions are achieved by detecting the light reflected from the controlled IR-LED light source while rejecting ambient light. An integrated 6x10-element optical sensor array performs the light measurements. This discrete light source is created externally with one or more FETs driven directly from the MA25405. The light source's PWM duty cycle is programmable from 1/16 to 16/16. The LEDs are pulsed on one or more times in a programmable sequence. This pulse sequence is repeated for each sample. A low-power, low-cost CPU, such

as the MAX32630, is required to process the data from the sensor.

Next, I will try to discuss some performance indicators that I discovered while experimenting with this kit.

What gestures are recognized by the MAX24505?

We find the answer to this question in the previous blog, it's quite easy: hand swipe left/right/up/down; finger/hand rotation CW and CCW; air click; proximity detection and Linger-to-Click. To validate these actions, we can use the MaximGestureSensorEVKit application.

How is the "trigger element" located?

The triggering element is that element detected by the sensor and located in the field of view (FOV). The visual field is the maximum surface covered by the observer, in our case it is represented by the sensor. A localization algorithm is used on the two axes, X and Y (relative to the Z position in the center of the sensor), which obviously returns some values. With this localization system we could also determine the fact that the object is located at some distance from the sensor.

So, how is the matter regarding the distance?

Well, Analog Devices have proposed a sensor with quite good capabilities, lateral coverage up to 40cm (for swipe left/right gestures), vertically we also have 40cm (up/down swipe), at an angle of + /-30 degrees. For the rotation type gesture, the radius of the circle we make with our hands is a maximum of 30cm, enough, we don't want to do physical exercises when we are behind the wheel of the car, for example. These limits represent an advance, compared to the MAX25205 sensor, the MAX25405 sensor has a set of lenses that improve distance vision through better image quality, leading to a higher true positive rate (TPR). The equation for true positive rate (TPR) is shown below where TP is the number of true positives and FN is the number of false negatives gestures detected.

TPR = TP/(TOTAL # OF RECORDED GESTURES)=TP/(TP+ FN) .

For the MAX25405, the manufacturer proposes a TPR index of up to 97.5%.

Now, for each application there is a suitable component, it depends on what we want to achieve, in the present case we refer mostly to the distance between the sensor and the trigger element. Depending on the desired result, we choose the necessary components.

Other constructive aspects

In the environment, in the car or a room, there are disruptive factors that affect the functioning of our system. For an optical sensor, perhaps the biggest problem is the ambient light, which can create shadows and false measurements, for example. Analog Devices came up with a method that can compensate to some extent this problem, an 875nm optical long pass filter can reject up to 68% of the sun's energy. Added to this is operating in the 940nm dip in the solar irradiance curve. Another problem is represented by the temperature in the environment, because the temperature of the human body, up to the hand with which we make those gestures in the air, is taken into account. At a low temperature, measurement data can be lost, which reflects on the successful execution of a gesture, for example, we want to swipe left, such as if only half, or less, of the measurements are valid, we swipe left until the sensor takes the command. On the other hand, if we are in a warmer environment, we can again talk about false measurements, these values can increase, they are interpreted incorrectly, and the sensor gives an error and the command is not executed. Fortunately, the MAX25405 sensor can operate in the -40*C and +85*C range. I don't think we will find it in such a cold or warm environment. Also, the sensor is hermetically sealed with an MSL1 (Moisture Sensitivity Level-unlimited floor life) moisture sensitivity rating, ensuring no moisture ingress on the sensor or its internal components. The result is long sensor lifetime.

We must also mention the costs of using such technology. It is said that sensors of this type from Analog Devices have a fairly good quality-price ratio, the sensor can recognize the most common movements made by the hand, and at the same time it is a cheaper solution than a video camera, which has to process images, so computing power is needed.

Now, I tried to interact with the MAX25405 in both possible environments in terms of ambient lighting, in short, I used it during the day and in the evening, in both cases at room temperature.

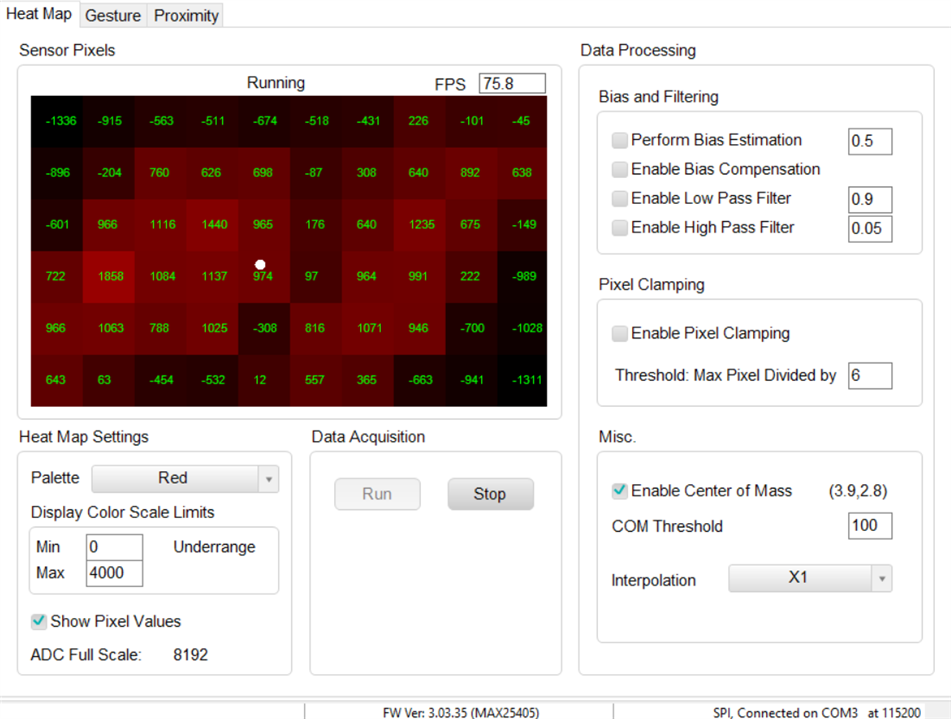

I also worked through the interface of the MaximGestureSensorEVKit program, and I would like to start with the Heat Map, because I think this window gives us the basic information we need. I also changed the colors of the palette, out of curiosity, but I prefer red, probably because I associate it with something warm, referring to the fact that with warmer objects, or the human body, the radiation emitted is obvious in the infrared spectrum, and in generally, this radiation also depends on the temperature.

I played a little with the preferred settings, such as center of mass, which introduces a point on the dial with pixels, a 6x10 array formed by IR photodiodes, and indicates the central point where the object, or rather our hand, acts. In the picture below, I tested during the day, I put my hand on the center of the board on the MAX25405 at a distance of about 10cm, and you can see that it is not necessarily necessary for the pixels around the point to have the largest value.

I could say that center mass detection is quite stable.

As we talked about those pixel values, below we have two fields, Min and Max, there must be a balance between the two, so that no information is lost. If you want, you can make an association with sensitivity.

We also have Pixel Clamping, basically, we will no longer see negative values on pixels, only positive ones. And this function could have positive and negative effects, if it is not set properly. In the two pictures below, you can see how part of the information is lost when the Pixel Clamping function is activated.

In the Gesture window, we obviously have the "main menu" of this kit, gesture recognition. I couldn't do tests in the most correct way, but I can say that during the day I reached a distance of 40 cm, up to which the sensor is able to recognize hand gestures. At night, or in a darker environment, I would say somewhere at 30cm, but once again I mention that I didn't have the best position for the sensor (I can't really manage to navigate on my PC, both with the sensor and with my phone take a picture or video).

I did a simple swipe test with an object, during the day, and everything seems fine.

I wanted to test the speed as well, I don't think the sensor can detect the movement at that speed with which you make the "hello" gesture when wave your hand, for example, if you understand me. But even so, it's very good how he's doing, even better than I thought.

It behaves well, it also depends on the surface, if it is something with a more glossy surface, then it might not be detected. But in the end, I say, the goal is to detect the movement of the hand. The air click is a little more demanding, it must be at that distance of 10cm. If you have a more disordered movement, the chances of making a mistake are quite high.

We have a little room for customization in the proximity area. We have 4 dials on which some values are represented, from 0 to 100. The value represents percent of maximum signal, measured in 1% increments. 100 percent represents the highest signal level or closest distance. This is useful in measuring the rough position of the hand, near or far. The MAX25205 supports a 1 x 3 row column region while the MAX25405 supports a 2 x 3 row column region. It would be best to start by calibrating the sensor, make sure that there is no object in the sensor's action surface and click on the Calibrate button. I prefer to stay with the 4 quadrants, somehow I associate them with the 4 IR emitters from OSRAM on the board. I am attaching a video below.

I think that switching between quadrants is also related to the viewing angle of the FOV, which is 30 degrees for the MAX25405.

I enjoyed experimenting with this kit, and even if I am a beginner, I will look to make an interesting application for me, something close to my programming and hardware knowledge. Of course, I have a lot of information to collect and put together, but maybe I can do something.

Good luck to all Roadtesters.

Thanks Element14Community!

Happy Holidays!