Overview

My goal is to build an ADAS and monitoring system for cars, serving as an advanced security and surveillance system when the car is parked, and a driver assistance system when the car is driven.

The core of the system will be one or more cameras mounted inside the vehicle, in addition to various sensors, like a GPS unit. A 4G data connection will enable streaming the video and location data in near real-time to a remote server.

To ensure continuous operation when the car is parked, the system will have its own power storage system.

The Kit

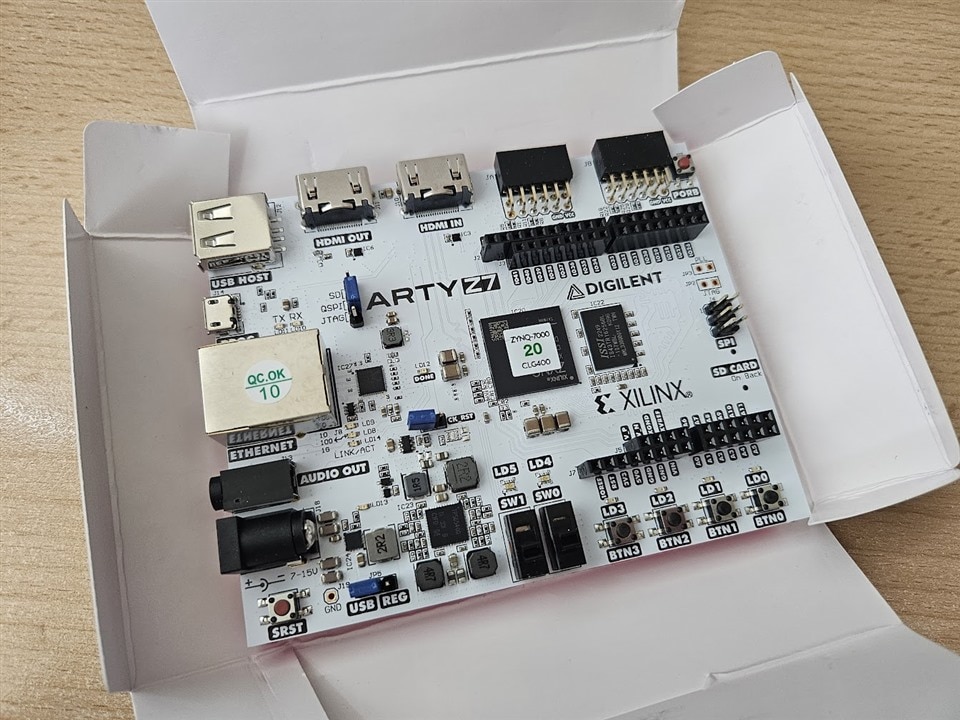

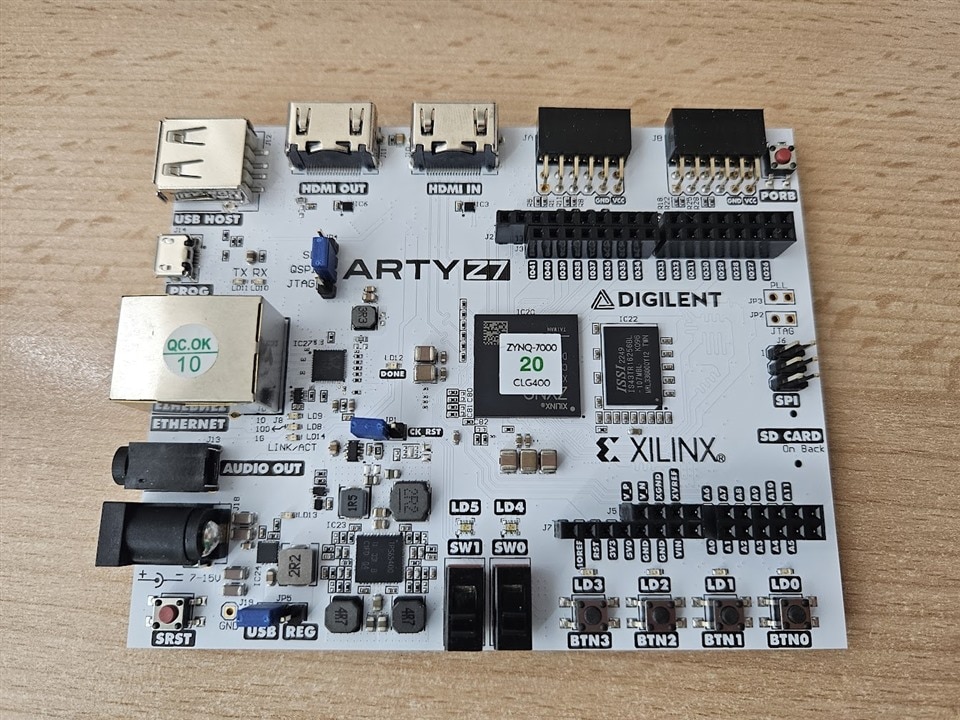

Arty-Z7

A development board with a dual core Cortex-A9 CPU, and an Artix-7 FPGA.

Pmod ALS

Ambient light sensor module for the Arty-Z7.

ZIQIAN HD 1080p Webcam

Not much to add here.

Project Plan

Vision-based systems

The camera will be mounted in the front of the car, acting both as a dashcam, and a surveillance camera when parked.

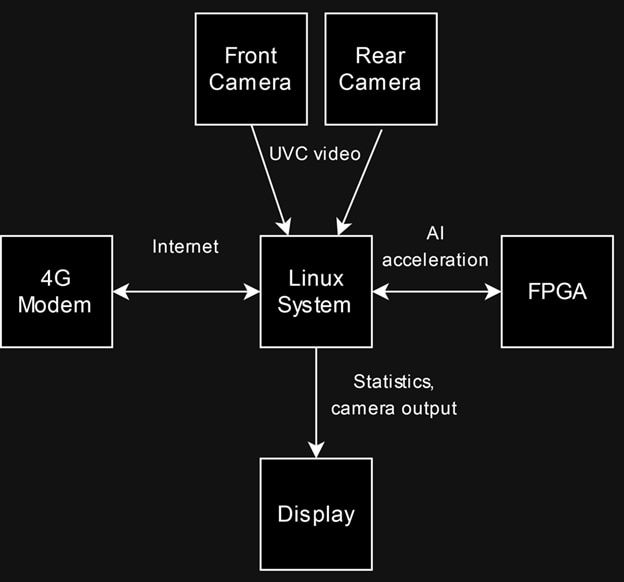

The goal is to run an object detection AI model on the FPGA part of the Artix-7, on the images captured by the camera. Thanks to the Cortex-A9 cores, it is possible to run Linux on the board, to handle the camera UVC capture, and pass it to the FPGA side for processing, then get back the results into Linux.

The object detection model will be trained to recognize traffic signs, and used to provide ADAS capabilities to the driver, such as a speed limit detection and alerting system. Upon encountering a speed limit sign, the recognized limit can be compared to the speed measured by a GPS unit, and an alert provided to the driver in case of speeding.

Another planned feature is monitoring traffic lights. This can be utilized to provide alerts to the driver, for example when a traffic light transitions from red to green.

When the car is parked, the camera will keep recording at a lower bitrate, to act as a surveillance device, in case the car gets damaged, or an attempted break-in happens.

Connectivity

The system will have its own 4G connection, to constantly stream the camera and sensor data to a remote server for storage and additional processing. This provides multiple benefits, such as not needing to put large amounts of storage in the car, minimizing power consumption of the system, and enabling the use of data processing techniques that are far too resource intensive to run on the low power budget of an in-car system.

Power storage and energy management

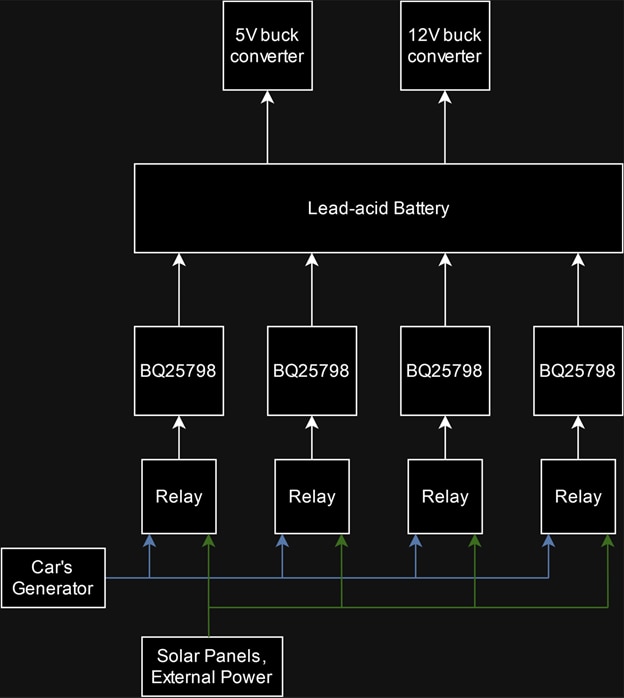

As the system is designed to operate even when the vehicle is parked, an energy storage system is required.

A large lead-acid battery will be present under the front passenger seat, which will be charged from the vehicle’s generator when driving. The option to use solar panels to charge when parked will also be available.

There will be an option to use a second lead-acid battery as well, to enable both hot-swap battery changes and battery capacity extension, in case the vehicle spends extended periods of time parked.

Timeline and planned blogs

To train an AI model for recognizing traffic signs and lights, I installed a Raspberry Pi HQ camera in my car a few weeks ago, along with a Pi 4 to capture a few hours of driving. From this footage, I’ve extracted about 5000 images, and hand-labeled them to create a training dataset. For testing, I’ve used YOLOv8 as the object recognition model, but this will most likely be unfeasible to run on the FPGA, so an earlier version of YOLO will be used.

The next step is to build and test the battery charging and management system. This will be based on four BQ25798 charging ICs, providing up to 150W charging, and MPPT support for utilizing solar panels. The next blog will be about this system.

Next is figuring out how to use the Zynq-7020 to accelerate the AI model used for object detection. There are a few different possibilities, but I cannot yet evaluate them, as I do not have the kit yet. There is a decent chance that the dual Cortex-A9 CPUs running Linux won’t be fast enough to handle capturing images from the camera, communicating with the FPGA, dealing with the 4G modem, processing sensor and object recognition data, and streaming video over the network. In this case, a Raspberry Pi 4 will be used to offload some of the non-vision related tasks. This would also let me use the Raspberry Pi HQ camera as the front dashcam, and use the provided webcam for capturing video from the rear window, where video quality is less important.

With both video processing and powering the system figured out, the next blog will be about integrating all the different parts, and physically installing them in a car. By this point, I should be able to see any possible remaining challenges and issues, and have enough time left to fix or work around them.

With the electronics installed, the next blog will be about software. There are two main challenges here: streaming video to the remote server, and collecting, storing, and processing all the sensor data. For the streaming part, I’ll have to write a custom video streaming protocol and accompanying software for both the transmitter and receiver halves. For sensor data storage and processing, hopefully I can use some off-the-shelf software, like GPSD and InfluxDB for collecting, storing, and replicating data to the remote server.

The final blog will be an overview of all the systems created, and video demonstrations of all the features provided.

-

DAB

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Comment-

DAB

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Children