#5 Eye on With USB Camera in Pynq Virtual Environment

Table of Contents

1 Image Process and Video Capture with USB camera

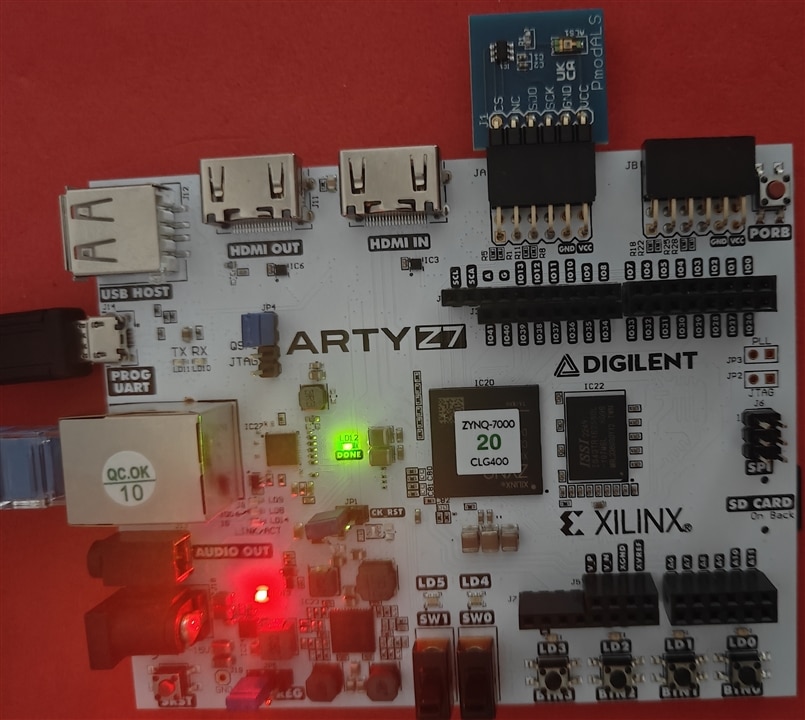

Arty Z7 supported image capture and video process with two options, one for Pynq Overlay HDMI video in and HDMI video out, the other is USB endpoint support for USB cameras.

With video capture, Arty Z7 is eye on to detect thing around, with intelligence added on.

2 Test Pynq I2C overlay with Pmod ALS light sensor

Prepare the Digilent Pmod ALS1 light sensor, this is I2C sensor data peripheral,

Connected with PMOD A with Silkprint mark JA

Power up the Arty Z7, and login the jupyter interface,open the PMOD light sensor notebook

Download base overlay.

from pynq.overlays.base import BaseOverlay

base = BaseOverlay("base.bit")

Read single luminance value

from pynq.lib.pmod import Grove_Light

from pynq.lib.pmod import PMOD_GROVE_G4

lgt = Grove_Light(base.PMODA,PMOD_GROVE_G4)

sensor_val = lgt.read()

print(sensor_val)

Plot the light intensity over time

import time

%matplotlib inline

import matplotlib.pyplot as plt

lgt.set_log_interval_ms(100)

lgt.start_log()

# Change input during this time

time.sleep(10)

r_log = lgt.get_log()

plt.plot(range(len(r_log)), r_log, 'ro')

plt.title('Grove Light Plot')

min_r_log = min(r_log)

max_r_log = max(r_log)

plt.axis([0, len(r_log), min_r_log, max_r_log])

plt.show()

This is demonstration for how Pynq overlay got data from peripheral.

3 USB camera for Video and Image

Connecting the USB camera with Arty Z7 board.

This video get image from linux command in "!" prefix, and the image is process with python Image library,

from PIL import Image as PIL_Image

orig_img_path = 'data/webcam.jpg'

!fswebcam --no-banner --save {orig_img_path} -d /dev/video0 2> /dev/null

img = PIL_Image.open(orig_img_path)

img

Capture the image

put it in black-white

bw_img = img.convert("L")

bw_img

rotate the image in 45 degree

This is how the it works with image capture from usb camera.

If there is HDMI-camera, there is another image capture solution with HDMI in, as,

from pynq.overlays.base import BaseOverlay

from pynq.lib.video import *

base = BaseOverlay("base.bit")

hdmi_in = base.video.hdmi_in

hdmi_out = base.video.hdmi_out

4 Yolo and Person Detection

Yolo isreal-time object detection and image segmentation model. The latest version is YOLO11. It is built on cutting-edge advancements in deep learning and computer vision, offering unparalleled performance in terms of speed and accuracy. For edge intelligence, earlier version is more applicable. Model like yolo3, yolo4 are still widely used. YOLOv3: This is the third version of the You Only Look Once (YOLO) object detection algorithm

After installation the code is easy as

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv3n model

model = YOLO("yolov3n.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the YOLOv3n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

The pynq-dpu can support yolo3 in model zoo, it is comiled into xmodel file format to loaded for DPU to process it.

5 Summary

in summary, with usb camera, the eye for Arty Z7 is open for intelligence applications.