Project Overview

I like running, but I also get injuries from time to time. I attribute them to my bad running form. Unfortunately, I'm not alone. Many runners have the same challenge.

Key Decisions

1. Use CPU instead of FPGA for video processing. Reasoning: I didn't have HDMI cameras or a USB to HDMI converter. Moving video stream from USB or network to FPGA requires CPU resources and is not a standard pattern for FPGA.

2. Use IP cameras instead of USB cameras, Reasong: Arty Z7 has only one USB port and my project requires feed from two cameras.

Arty Z7 - PINQ - Setup and Update

Python is the most productive language for me for AI applications. PYNQ provides a Python runtime environment for several boards, including Arty Z7.

It requires to flash SD card to enable it on Arty Z7.

After I flashed PYNQ, I created a network connection by connecting its ethernet port to a network switch.

Then I played with Jupiter notebooks provided as part of the PYNQ distribution. I was very interested in a USB Web Cam example. But it didn't work. The issue was with security permission for my USB webcam on Ubuntu. sudo usermod -a -G video $LOGNAME

sudo vi /etc/udev/rules.d/10-webcam.rulesSUBSYSTEM=="video0", GROUP="video", MODE="0660"

And reboot

After it was fixed and the board was restarted the example started working well.

As I've selected PYNQ as my runtime environment it needs to be secured. I've started by trying to update it. But it was not able to connect to the internet as my switch was only connecting my PC and the board. So I've configured a network sharing of my PC wireless LAN connection. Now the board has access to the Internet.

But after the update, I've noticed some differences. It becomes much slower. The slowdown was caused by two processes. I start investigating it. One issue was related to a high CPU usage and another one was related to a process, which was filling up logs.

Model Selection

TensorFlow

Examples of the movenet_singlepose_lightning model were based on the TensorFlow framework. It was developed by Google. While I was able to deploy TensorFlow Lite runtime on Arty Z7 I was not very successful in running it. I was always getting Core Dump errors. So I start looking for alternatives.

ONNX

ONNX is another ML framework. It is maintained by Microsoft and its partners. I was able to build it for Arty Z7. It was quite a slow and error-prone process. Fortunately, there was a movenet_singlepose_lightning model converted into ONNX format. It saved me some time required for model conversion. I start testing the model. It was able to process images and find key points and their confidence scores. But it took ~ 1 second per frame.

UPDATE (19/11/2024):

I was able to build onnxruntime with xnnpack and its Python wrapper directly on Arty Z7. It requires a fast SD card, a lot of swap space (~32GB), and a lot of time (a few days).

The first step is to remove the following package as onnxruntime is using another version.

sudo apt remove libeigen3-dev

After cloning the project you can try to build it. Here is my build command:./build.sh --config Release --parallel --skip_submodule_sync --skip_tests --use_xnnpack --build_wheel --cmake_extra_defines CMAKE_CXX_FLAGS="-Wno-dev -Wall -Wextra -O3 -g3 -mfloat-abi=hard -mfpu=neon-vfpv3 -mcpu=cortex-a9 -mfloat-abi=hard" CMAKE_C_FLAGS="-Wno-dev -Wall -Wextra -O3 -g3 -mfloat-abi=hard -mfpu=neon-vfpv3 -mcpu=cortex-a9 -mfloat-abi=hard"

Video Streaming with OpenCV and FFMPEG

It is important to capture runner forms from side and rear perspectives. It requires two cameras. I've decided to use a pair of IP cameras I already had. I've connected to them from OpenCV and developed a prototype on my PC. I thought that the code would work on Arty Z7 as well. However, the video streaming of RTSP H264 was not reliable at all on SBC. So I decided to use FFMPEG to stream from cameras and pipe RAW frames directly. I also used it for decoding H264, rotating, resizing, and cropping images. It worked with some challenges as after ~180 frames FFMPEG needs to be restarted. The restart takes ~2-3 seconds. FFMPEG has a lot of parameters and I hope I'll find the right set of them so it can receive streams without restarts.

Video Output

Not all video formats are supported by modern browsers. Encoding into some of them is very resource-intensive. After some research and experimentation I choose

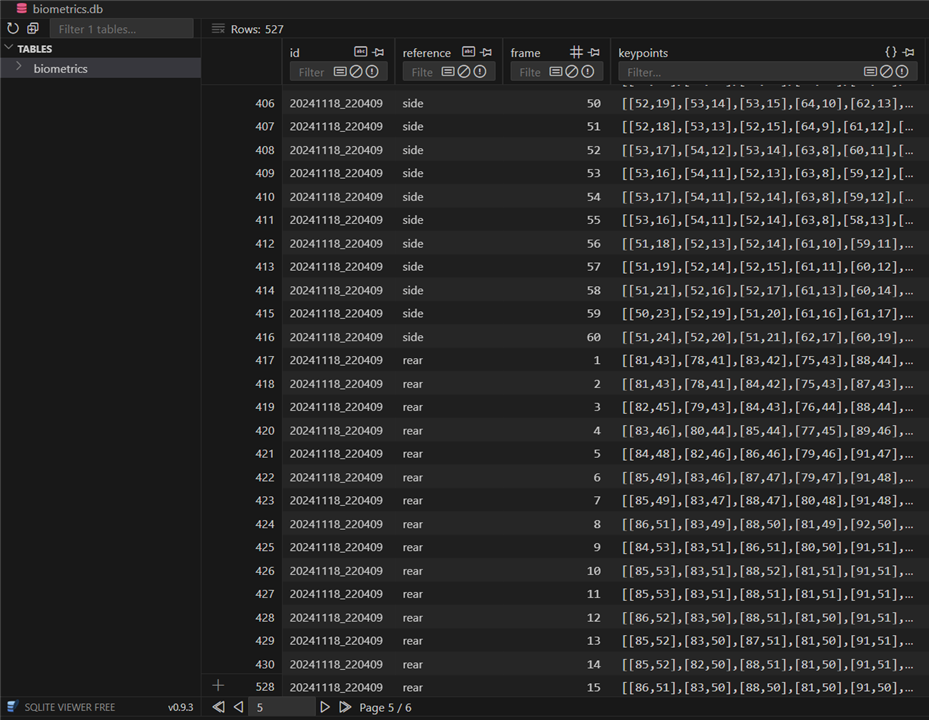

Data capture

I've decided to use the SQLite 3 database to record keypoints data for each frame produced by the model. It will allow to use it to run different biomechanics assessment algorithms and relate them to the videos.

Demo

I've done a short run and created a short video to capture my running forms and related metrics.

One video was captured from a side view and another from the rear.

To-Do List

I still have a long list of remaining tasks. Here are some of them:

Calculate key runners' metrics based on keypoints data set.

Complete integration with Tornado HTTP Server provided on PYNQ so it can show runner analytics on a smart TV during the run.

Create a stand for cameras.

Publish the project on Github.

Conclusion

Despite numerous challenges with software components, I was quite happy to get to this point. The project proved that even on the arml7v architecture, it is possible to run complex computer vision models in near real-time. While I haven't been able to complete all my objectives I've built a solid foundation for further improvements.

I would like to thank the AMD team and Element 14 for selecting my project proposal for this design challenge. The challenge pushed my curiosity and allowed me to explore new areas of sports biomechanics, computer vision, the ONNX framework, and the ML ecosystem. I would also like to thank all members of the community who have worked on this challenge or shared their feedback. And a special thanks to Amazon Q which helped me with some Python coding.

-

DAB

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

-

vlasov01

in reply to DAB

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Comment-

vlasov01

in reply to DAB

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Children