Table of Contents

Vitis AI

It is an interesting project from AMD to run inference development solutions. There are several versions of Vitis AI. This time, compared with the last post with PYNQ Computer Vision environment, I use the Vitis AI 2.0 image available here. Before to start the use of AI/ML models the board, it is recommended update the PMIC devices since, according to the models could be an increase of power comsumtion and the board reboots.Using the section 14 of the manual and a terminal, yo must be able to detect the actual firmware of our PMICs,

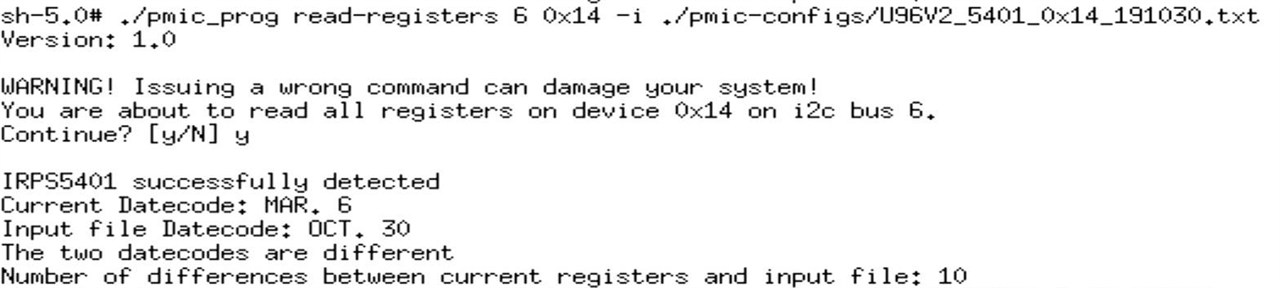

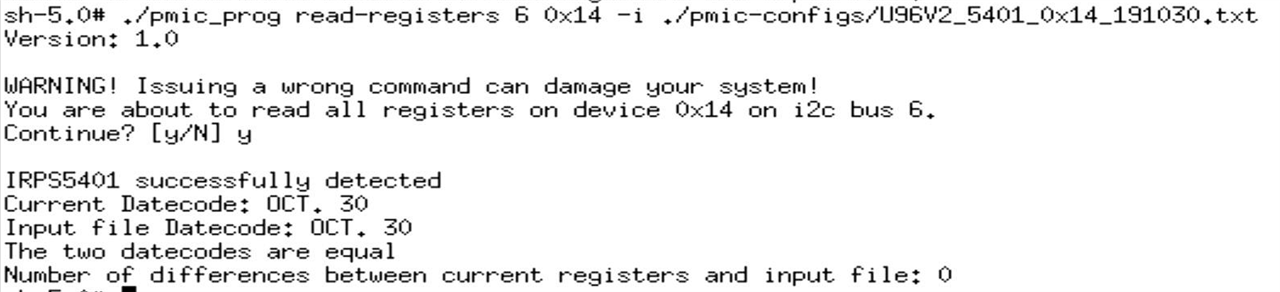

You MUST perform both updates on the same session our you will have board damage risk. To probe that PMICs are updated you can run the read-registers command and verify the date of the information available in the device.

Read the instructions in the manual for the PMIC device register update, avoid damnage your board.

The main advantage using Vitis AI is the usage of Deep Learning Processor Unit (DPU), an optimized processor for the AI inference, and the high level abstraction for the computer vision system development using the architecture

Camera support

Despite the effectiveness of Vitis AI, I had some details using my cameras. One of the devices with supported by Ultra 96 v2 is the Intel RealSense platform. The integration of the camera is really slow if you do not integrate from your image., this process took a lot of time using the librealsense repository. The incompatibility between the Vitis AI and the librealsense is the xinerama-dev since this component has errors with xorg in the version available on Vitis default images. PYNQ does not have this incompatibility, but requires a lot of compilation time to integrate on the board using a pre-build image. The RealSense platform has a special sensor visualization based on IR patterns to estimate depth in a scene. You can take a darkshot to visualize the IR patterns with other cameras as follows

Above you can see a video testing the D415 deep camera at 2 FPS. You can configure it to achieve a better acquisition time and process the information. But in Vitis AI I was not able to run the RealSense platform and my configurable camera had not support to direct usage in the platform to use it. This issue limited me the kind of cameras to use, where my macroscopy platform was not able to use. The main project is to the embed the seed characterization, but this time we will wait to do it.

Real-Time

The Ultra 96 has a special hardware to improve the processing of images or other kinds of data. This time, for image processing and deep learning inference, the platform is able to infer the information in a good time. An application to this it is the pose estimation, from an image we can superpose an 'skeleton' to illustrate the position of the body.

Two details did not convince me at all, one is the blinky screen when the video is processed and two is that we have no posibility to resize windows . Despite these inconveniences we are able to accelerate any kind of inference using the DPU. In addition to this, you can reconfigure your models using the Vitis AI framework in order to access to other DPUs or change the input and output sizes of the models.

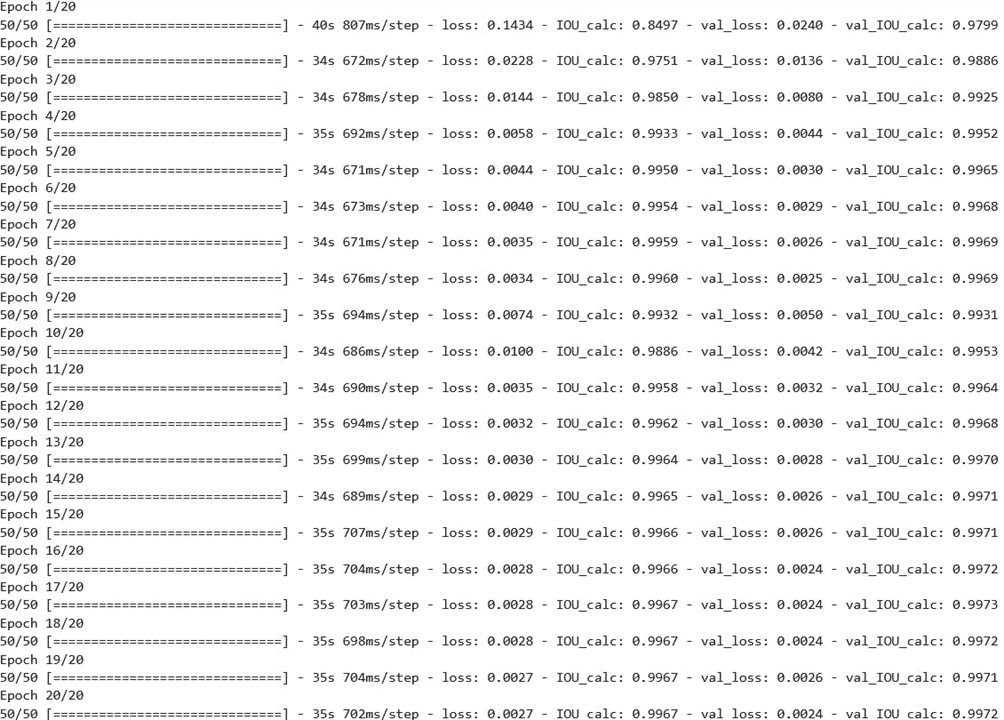

Remember, to accelerate this process, you need an NVIDIA board to improve the training time or the quantization of the models. We ha ve training log of a U-Net model below, this model was used to segment seeds in another project. This time I was working to embeding the processing in a small form factor platform.

I had results like the following to extract features from seeds and characterize one by one in order to have an inventory to get a harvest with high quality.