I've described the development and verification of an Edge Impulse model for audio classification in two earlier posts:

The next step is to deploy the model to the Nicla Vision board.

Model Deployment

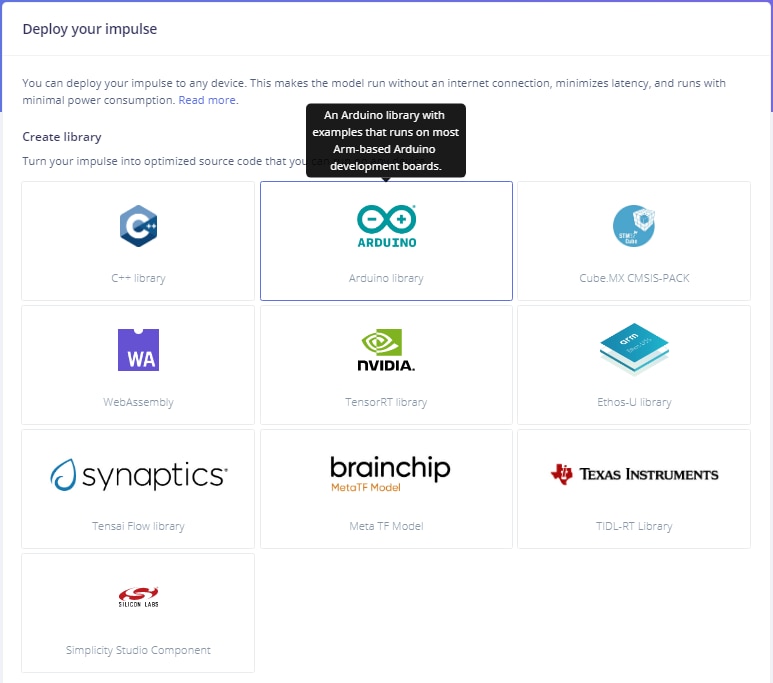

Build Arduino library

In order to integrate the model impulse with other code, it will be deployed as a library. I will be using the Arduino IDE with the MKR WAN 1310 and Nicla boards, so I will create an Arduino library.

Just need to select it and push the Build button.

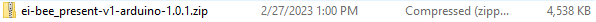

When the library is built, it will be packed as a .ZIP library ready to load into the Arduino IDE.

If you examine the library file structure, you will see that it has examples included for supported boards. The model parameters are located under the src directory.

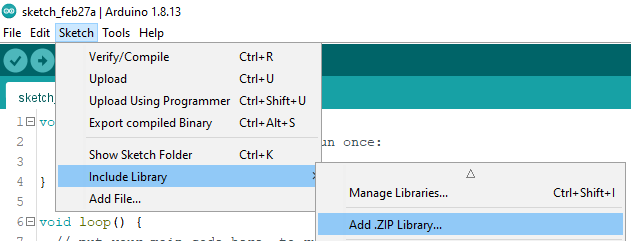

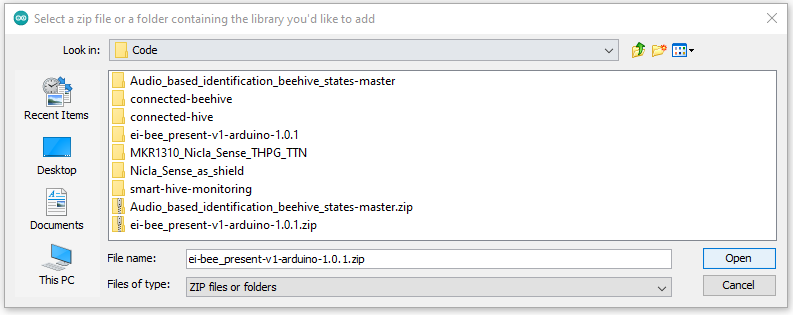

Then you just need to add the library to the IDE:

Test library on Nicla Vision

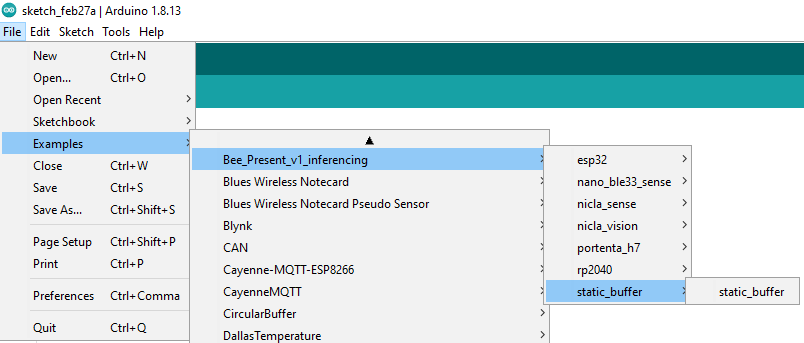

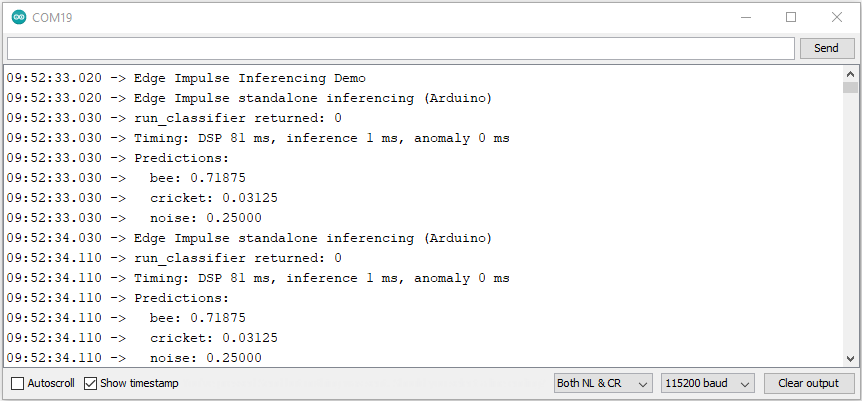

The recommended method to test the model operation is to use the Static Buffer example from the library.

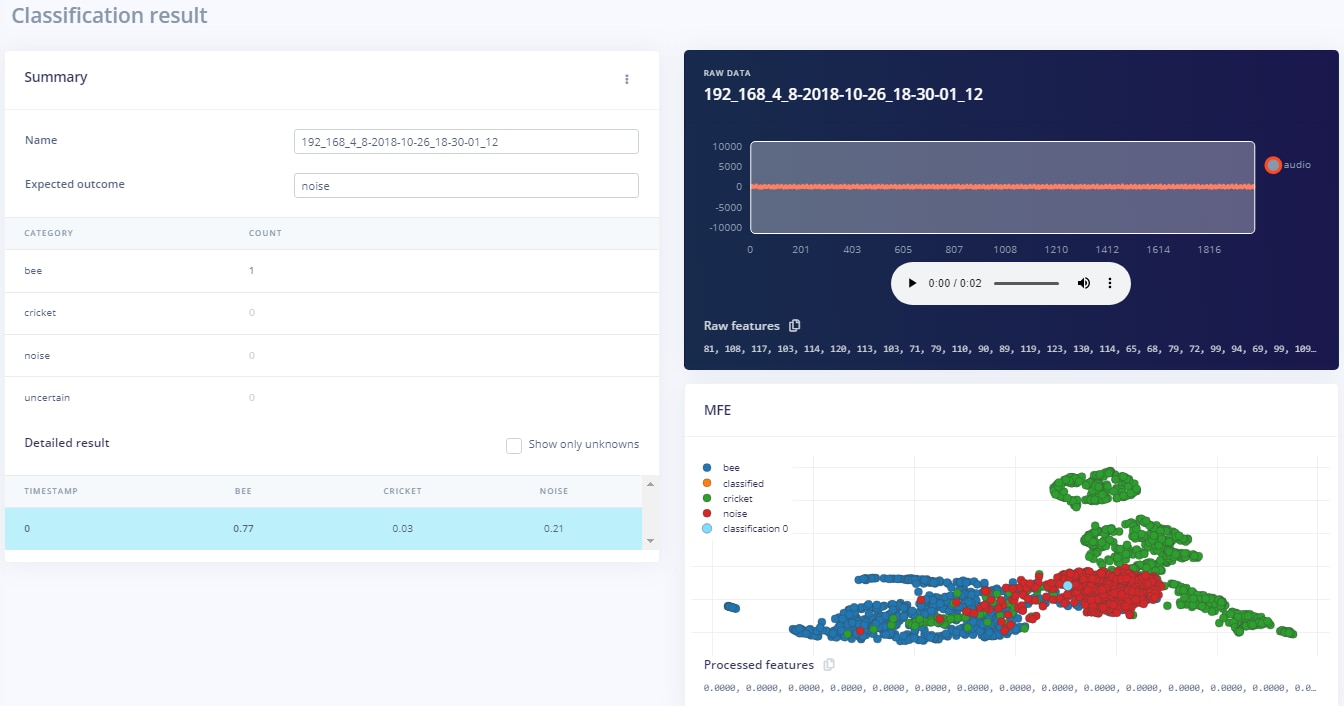

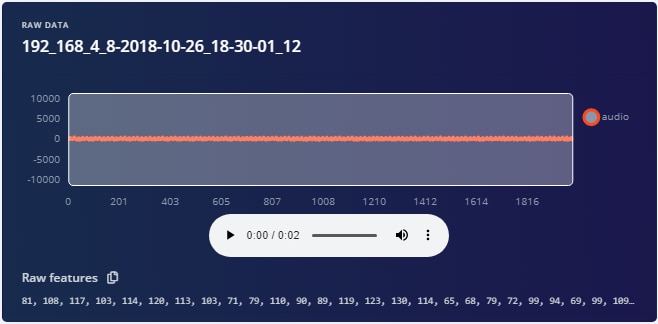

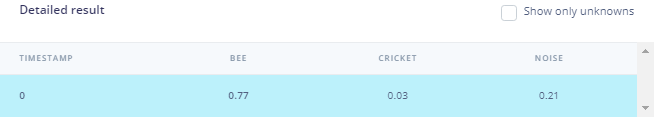

Using Live Classification on the project dashboard, you select and classify one of the test files.

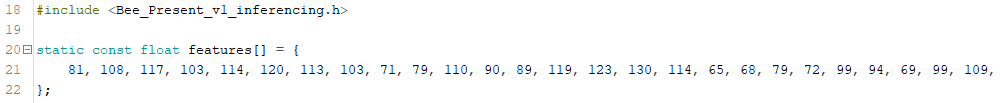

Copy the Raw features from that file and paste it into the features[] array in the static_buffer program and compile it and upload it to the Nicla Vision.

Run the program and compare the output with the Live Classification result.

So, almost but not quite what I expected. The numbers don't quite match. Not sure if this is due to some processing limitation on the Nicla Vision.

| Class | Live Classification | Nicla Vision |

| bee | 0.77 | 0.72 |

| cricket | 0.03 | 0.03 |

| noise | 0.21 | 0.25 |

Probably warrants further investigation, but I'm going to consider it good enough for now.

Next step is to create the program to do live inferencing on the Nicla Vision and interface it to the MKR WAN 1310.