PYNQ-Z2: Embedded Vision Workshop Series with Adam Taylor

Getting Started | Getting Up and Running | Unlocking Your Inner PYNQ Hero | Project14 | PYNQ Workshop

TL;DR - How many waves can you add to WaveDrom? Benchmark it using Taylor waves!

Grab a cup of tea, the purpose of this blog post is to give you a smile on your face.

The rabbit hole

This post is a tale that ends in Taylor waves... a very specific, beautiful kind of waves...

Let me tell you how I got sucked into the rabbit hole.

You've got mail

A few weeks ago I got triggered by an email I received, not that common spam email which gets sorted to your spam folder or you instantly delete in case it got through the spam rules, no, this one was different...

The subject created sparkles in my eyes, I dropped everything and started reading.

It was element14 who had sent me this delightful mail. Very soon an online course would start, taught by Adam Taylor.

There was no doubt, I was going to follow that course, no matter what. Even if they locked me in my house for several months... wait... that wouldn't be bad at all... Great!

The course was going to be in element14 style, using on24.com for the webinar, great slide deck, superb speaker, all your questions get answered.

You might be wondering what it was that got my interest, well Adam Taylor was going to teach you how to use the PYNQ Framework.

And this PYNQ Framework is a big thing, it enables you to quickly write up some code in Python. And once you get your stuff working you dive in to find the sweet spots for performance gains.

These performance gains are of course implemented in hardware, we are talking FPGA here...

And when you have finished your overlay, which is a bitstream, you can have python instruct the PYNQ board to load your overlay. It is even possible to create partial reconfigurable overlays, but that is another rabbit hole...

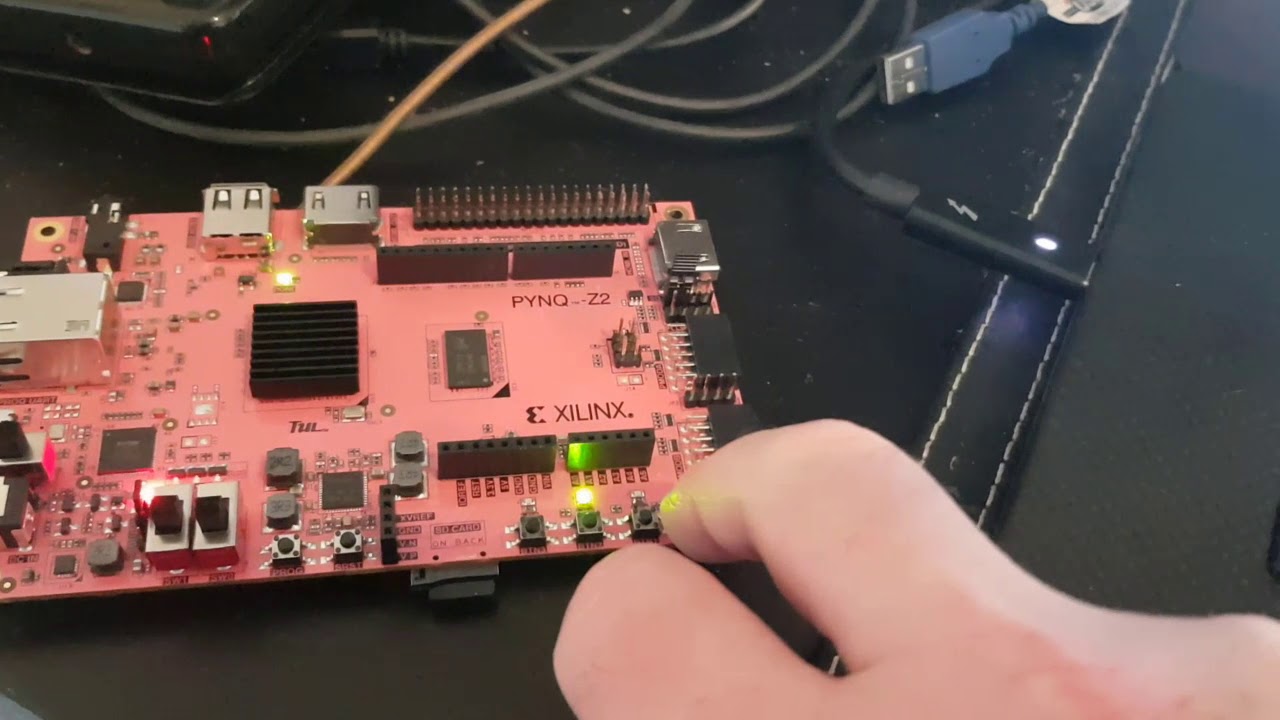

I gathered all the material required to follow the course: a PYNQ-Z2 board, an SD card and an HDMI camera.

The PYNQ-Z2PYNQ-Z2 is a Zynq-7020 series device by XILINX. It essentially has two parts, the programmable logic (PL this is the FPGA) and a programming subsystem (PS which runs on a dual core ARM Cortex-A9).

The board has lots of interfaces (DDR, USB, UART, Ethernet, CAN, SPI, I2C).

Webinars

In total there will be three sessions to follow.

We start by exploring the PYNQ framework and basics of overlays.

Session 1

After downloading an image, writing it to an SD card and booting the board, I got to see Jupyter Lab in chrome and I could start coding.

During the webinar Adam told us about the board and how to program it.

First we did a small tutorial about reading buttons and having some logic function light up some leds accordingly. Next we learnt about using overlays and we got to see what such a PYNQ board is capable of doing. There is quite a big community creating overlays which you can use in your own projects. Neural Network inferencing on such a board goes 10x to 100x faster than in software.

WaveDrom

Another interesting thing I learned about was WaveDrom, this is a package which can be used on the PYNQ to display wave outputs for your simulations. Nice!

After the webinar I started looking up the capabilities of this WaveDrom package and decided to experiment with it to see how many waveforms it can handle.

And it should be no surprise to you, what is the best use case for creating lots of waves? Right, Taylor waves!

Now, don't be fooled, this is for the brave at heart!

I suppose you have the board and the PYNQ framework running...

Let's get started, open up your browser and go to PYNQ:9090/lab

Some installation required

First you should open up a terminal and install scikit-learn:

sudo pip3 install --upgrade cython sudo pip3 install sklearn

This will take over an hour to install... just let it spin for a while.

Don't give up yet, we're just starting our journey...

On to the code (all code is attached as a zip file at the bottom of this blog post)

Next create a new notebook and import the following packages:

from pynq.lib.logictools import Waveform import numpy as np import matplotlib.pyplot as plt import cv2 from sklearn.cluster import MiniBatchKMeans from datetime import datetime

Now we need some functions to normalize and denormalize the Taylor data:

def normalize(x):

"""

Normalize a list of sample data in the range of 0 to 1

: x: List of data. The shape is (32, 32, 3)

: return: Numpy array of normalized data

"""

return np.array((x - np.min(x)) / (np.max(x) - np.min(x)))

def denormalize(x,max):

"""

Normalize a list of sample data in the range of 0 to 1

: x: List of data. The shape is (32, 32, 3)

: return: Numpy array of normalized data

"""

return np.array(max*x)

At this point I was getting stressed... As every well educated engineer knows Taylor waves need something pretty amazing to start from.

We are using an FPGA, those are good at handling images so let's start with an image, we can continue with video afterwards.

#Load image

im = cv2.imread('images/taylor.jpg')

#Resize image

resized = cv2.resize(im, (64,64), interpolation = cv2.INTER_AREA)

Since I did not know if this would work I thought it would be best to throw in some color quantization, that looks fancy and we surely must show off the capabilities of the board!

# Following code to do some color quantisation is by Adrian Rosebrock

# https://www.pyimagesearch.com/2014/07/07/color-quantization-opencv-using-k-means-clustering/

# load the image and grab its width and height

image = resized

(h, w) = image.shape[:2]

# convert the image from the RGB color space to the L*a*b*

# color space -- since we will be clustering using k-means

# which is based on the euclidean distance, we'll use the

# L*a*b* color space where the euclidean distance implies

# perceptual meaning

image = cv2.cvtColor(image, cv2.COLOR_RGB2LAB)

# reshape the image into a feature vector so that k-means

# can be applied

image = image.reshape((image.shape[0] * image.shape[1], 3))

# apply k-means using the specified number of clusters and

# then create the quantized image based on the predictions

clt = MiniBatchKMeans(n_clusters = 16)

labels = clt.fit_predict(image)

quant = clt.cluster_centers_.astype("uint8")[labels]

# reshape the feature vectors to images

quant = quant.reshape((h, w, 3))

image = image.reshape((h, w, 3))

# convert from L*a*b* to RGB

quant = cv2.cvtColor(quant, cv2.COLOR_LAB2RGB)

image = cv2.cvtColor(image, cv2.COLOR_LAB2RGB)

Let's see what the Taylor data looks like:

#Show the image

plt.imshow(quant)

plt.title('quant')

plt.show()

The real stuff

And now the most important part of our code, this is where the real work happens, we'll setup a timer to see how long it takes to generate these waves:

#Now let's create some Taylor waves!

quant=denormalize(normalize(quant),7)

switcher={

0:'x',

1:'2',

2:'=',

3:'1',

4:'u',

5:'z',

6:'0',

7:'d',

}

linestr=""

taylorlogic ={ "signal" : ["Taylor"] }

print(taylorlogic)

for y in range(0,63):

linestr=""

for x in range(0,63):

linestr = linestr + switcher.get(int(round(quant[y,x][0],0)),'d')

taylorlogic['signal'].append({ "name": y, "wave": linestr, "data": "" })

print(taylorlogic)

start = datetime.now()

taylorwave = Waveform(taylorlogic)

taylorwave.display()

end = datetime.now()

micros = int((end - start).total_seconds() * 1000000)

print("Displaying the Taylor waves took {} microseconds".format(micros))

Just show me what Taylor waves are

And this is what the Taylor waves look like:

Now, I must admit I was a bit disappointed too, after looking through my code I found the bug.

And now the beauty

Apply these changes:

#Load image

#im = cv2.imread('images/taylor.jpg')

im = cv2.imread('images/swift2.jpg')Displaying the Taylor waves took 13.378.224 microseconds

So, now you know what Taylor waves are! Beautiful, aren't they?

Final thoughts

I was surprised that displaying these 64 waveforms of 64 clockcycles took so long to render...

The waveform is actually an SVG.

I suppose the creation of the SVG is happening in javascript and this takes a long time.

So while it is very interesting to see some ouput of your design while you are programming,

it probably will not be as powerful as an Analog Discovery's output.

Some more profiling needs to be done to see which parts of this code could be optimized.

Well, this ends my write up for the first PYNQ Workshop, I hope you enjoyed reading it!

While this was an experiment with WaveDrom as much as fooling around I am looking forward to the next PYNQ Workshop where we will learn how to create our own overlays.

That way I hope to be able to speed up some parts of this silly code.

I will be using WaveDrom again in the future.

If you enjoyed reading my post, please like it!

To be honest, you don't need the color quantization to do any of this, I just had so much fun with it that I left it in...

Thanks to element14 and adamtaylorcengfiet for this fantastic webinar!

Kind regards,

Yuri

Top Comments