Introduction:

In this blog, I will try to implement OCR (Optical Character Recognition) using pytesseract package (python package to access the Tesseract OCR) and EAST Text Detector on the Zero2W board.

Python-tesseract is a wrapper for Google's Tesseract OCR Engine. It can read all image types supported by the Pillow and Leptonica imaging libraries, including jpeg, png, gif, bmp, tiff, and others.

OpenCV’s EAST text detector is a deep learning model, based on a novel architecture and training pattern. It is capable of (1) running at near real-time at 13 FPS on 720p images and (2) obtains state-of-the-art text detection accuracy.

Setup:

First, update and upgrade the board and install pip:

$ sudo apt update

$ sudo apt full-upgrade

$ sudo apt install python3-pip

To implement the Tesseract-OCR, we will need the following packages:

- Pillow

pip install Pillow

- Tesseract OCR Engine

sudo apt install tesseract-ocr -y

- Pytesseract (Python wrapper for Tesseract OCR)

pip install pytesseract

For East Text Detector, we will need following packages:

- Opencv:

pip install opencv-python

- Imutils:

pip install imutils

- Download the frozen_east_text_detection.pb dataset and paste it in the working directory.

After installing the packages, we can now start working on our code.

OCR using Pytesseract:

Code:

The code for OCR is given below:

from PIL import Image, ImageOps

import pytesseract

import argparse

import os

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True, help="path to input image to be OCR'd")

args = vars(ap.parse_args())

#Open the test image

image = Image.open(args["image"])

#Convert the image to grayscale

gray = ImageOps.grayscale(image)

#Temporarily save the grayscale file

filename = "{}.png".format(os.getpid())

gray.save(filename)

#Open the grayscale file and run OCR on it

text = pytesseract.image_to_string(Image.open(filename))

os.remove(filename)

print(text)

To start the OCR, run the following command:

python pytesseract.py --image path/to/image.jpg

The path to the test image is passed through the '--image' argument.

This image is then opened by the 'Image.open()' function.

Then the image is converted to grayscale and temporarily saved on the disk.

The grayscale image is then opened and fed to the 'image_to_string' converter of the pytesseract.

Testing:

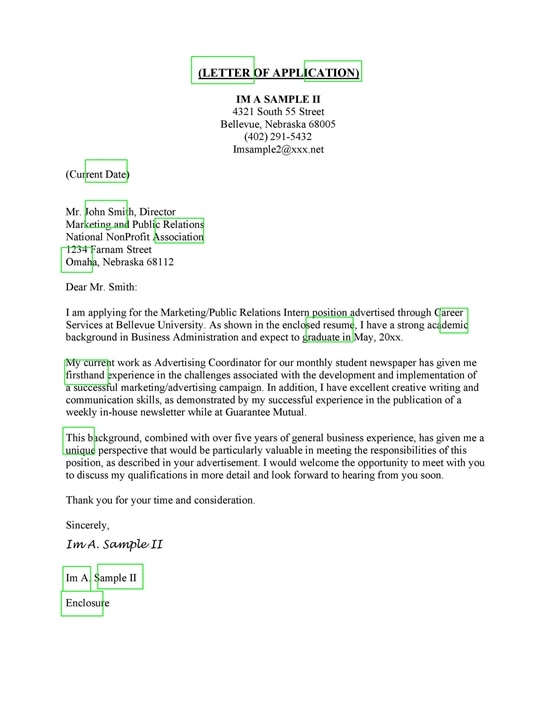

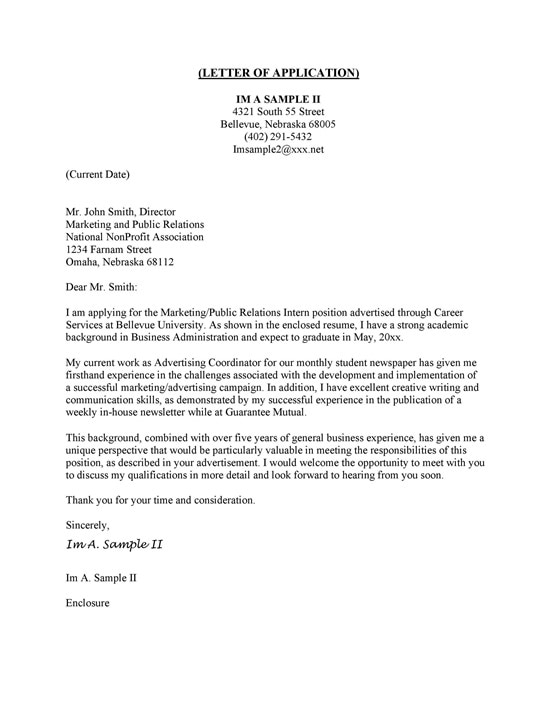

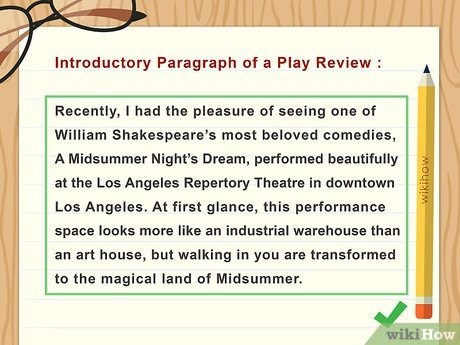

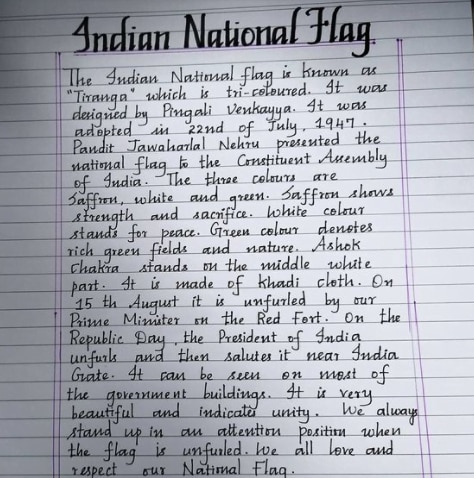

I will be feeding different text images to the OCR engine and comparing the text output.

|

Took 1.07 seconds |

|

Took 2.55 seconds |

|

Took 16.84 seconds |

|

Took 26.2 seconds |

|

Took 3.98 seconds |

It can be seen that the device is able to recognize printed text efficiently and in reasonable time. It is not that good with recognizing handwritten texts and texts containing some sort of images and natural scenes. But this isn't a limitation of the Zero2W instead it is a limitation of the OCR software - tesseract. However, it can be trained to recognize handwritten texts.

The time taken for OCR depends upon the amount of text (number of words) present in the image.

OCR using EAST Text Detector:

Code:

# import the necessary packages

from imutils.object_detection import non_max_suppression

import numpy as np

import argparse

import time

import cv2

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", type=str,

help="path to input image")

ap.add_argument("-east", "--east", type=str,

help="path to input EAST text detector")

ap.add_argument("-c", "--min-confidence", type=float, default=0.5,

help="minimum probability required to inspect a region")

ap.add_argument("-w", "--width", type=int, default=320,

help="resized image width (should be multiple of 32)")

ap.add_argument("-e", "--height", type=int, default=320,

help="resized image height (should be multiple of 32)")

args = vars(ap.parse_args())

# load the input image and grab the image dimensions

image = cv2.imread(args["image"])

orig = image.copy()

(H, W) = image.shape[:2]

# set the new width and height and then determine the ratio in change

# for both the width and height

(newW, newH) = (args["width"], args["height"])

rW = W / float(newW)

rH = H / float(newH)

# resize the image and grab the new image dimensions

image = cv2.resize(image, (newW, newH))

(H, W) = image.shape[:2]

# define the two output layer names for the EAST detector model that

# we are interested -- the first is the output probabilities and the

# second can be used to derive the bounding box coordinates of text

layerNames = [

"feature_fusion/Conv_7/Sigmoid",

"feature_fusion/concat_3"]

# load the pre-trained EAST text detector

print("[INFO] loading EAST text detector...")

net = cv2.dnn.readNet(args["east"])

# construct a blob from the image and then perform a forward pass of

# the model to obtain the two output layer sets

blob = cv2.dnn.blobFromImage(image, 1.0, (W, H),

(123.68, 116.78, 103.94), swapRB=True, crop=False)

start = time.time()

net.setInput(blob)

(scores, geometry) = net.forward(layerNames)

end = time.time()

# show timing information on text prediction

print("[INFO] text detection took {:.6f} seconds".format(end - start))

# grab the number of rows and columns from the scores volume, then

# initialize our set of bounding box rectangles and corresponding

# confidence scores

(numRows, numCols) = scores.shape[2:4]

rects = []

confidences = []

# loop over the number of rows

for y in range(0, numRows):

# extract the scores (probabilities), followed by the geometrical

# data used to derive potential bounding box coordinates that

# surround text

scoresData = scores[0, 0, y]

xData0 = geometry[0, 0, y]

xData1 = geometry[0, 1, y]

xData2 = geometry[0, 2, y]

xData3 = geometry[0, 3, y]

anglesData = geometry[0, 4, y]

# loop over the number of columns

for x in range(0, numCols):

# if our score does not have sufficient probability, ignore it

if scoresData[x] < args["min_confidence"]:

continue

# compute the offset factor as our resulting feature maps will

# be 4x smaller than the input image

(offsetX, offsetY) = (x * 4.0, y * 4.0)

# extract the rotation angle for the prediction and then

# compute the sin and cosine

angle = anglesData[x]

cos = np.cos(angle)

sin = np.sin(angle)

# use the geometry volume to derive the width and height of

# the bounding box

h = xData0[x] + xData2[x]

w = xData1[x] + xData3[x]

# compute both the starting and ending (x, y)-coordinates for

# the text prediction bounding box

endX = int(offsetX + (cos * xData1[x]) + (sin * xData2[x]))

endY = int(offsetY - (sin * xData1[x]) + (cos * xData2[x]))

startX = int(endX - w)

startY = int(endY - h)

# add the bounding box coordinates and probability score to

# our respective lists

rects.append((startX, startY, endX, endY))

confidences.append(scoresData[x])

# apply non-maxima suppression to suppress weak, overlapping bounding

# boxes

boxes = non_max_suppression(np.array(rects), probs=confidences)

# loop over the bounding boxes

for (startX, startY, endX, endY) in boxes:

# scale the bounding box coordinates based on the respective

# ratios

startX = int(startX * rW)

startY = int(startY * rH)

endX = int(endX * rW)

endY = int(endY * rH)

# draw the bounding box on the image

cv2.rectangle(orig, (startX, startY), (endX, endY), (0, 255, 0), 2)

# show the output image

cv2.imwrite("eastoutput.jpg", orig)

To start OCR, run the following command in the terminal:

python eastdetector.py --image path/to/image.jpg --east path/to/frozen_east_text_detection.pb

Testing:

|

Time Taken: 3.59 sec Time Taken: 3.59 sec |

|

Time Taken: 3.5 sec |

|

Time Taken: 3.47 sec |

|

Time Taken: 3.57 sec |

|

Time Taken: 3.78 sec |

|

Time Taken: 3.50 sec |

It can be seen that the EAST Detector was able to detect text in images 1, 2, 4 and 6 with good accuracy. But in 3rd and 5th, image it couldn't perform good. It seems that the EAST Detector is very good at detecting texts from natural scenes where there is sufficient distance between words. It can't detect those texts which have very less spacing between its words. Moreover, it can be seen that the time taken for OCR is almost same in each image.

Conclusion:

The Zero2W board can be used for OCR for detecting printed texts on clear backgrounds using the Tesseract OCR. Thus, it can be used as document reader and image to text converter in practical life. Tesseract OCR is poor at recognizing text from natural scenes.

To recognize text from natural scenes, we can use the EAST Text Detector. It is more efficient and accurate as compared to the Tesseract OCR.

Zero2W takes around 3 sec to recognize texts from natural scenes using EAST Detector. Its reasonable.

Whereas if we use the Tesseract OCR, the time taken to run OCR depends upon the number of words in the image. Zero2W can be used with it, if the time taken is within some reasonable limit (in my case it is around 16 sec).