RoadTest: AMD Xilinx Kria KV260 Vision AI Starter Kit

Author: skruglewicz

Creation date:

Evaluation Type: Development Boards & Tools

Did you receive all parts the manufacturer stated would be included in the package?: True

What other parts do you consider comparable to this product?: the “Lattice MACHXO3L Starter Kit” and “OrangeCrab Dev BD”

What were the biggest problems encountered?: No major problems

Detailed Review:

The AMD XILINX Kria KV260 Vision AI Starter Kit is a platform for developing advanced vision applications, equipped with a non-production version of the production K26 SOM. It comes with an evaluation

carrier card optimized for vision applications, featuring multi-camera support and Raspberry Pi connectors. Developers of all types can get applications up and running in under an hour, with no FPGA experience needed. The kit is designed to simplify hardware and software development requirements, making it the fastest and easiest platform for application development with the goal of volume deployment on Kria K26 SOMs. The kit includes the development platform, accessory pack, and a 13MP autofocus RGB camera module.

carrier card optimized for vision applications, featuring multi-camera support and Raspberry Pi connectors. Developers of all types can get applications up and running in under an hour, with no FPGA experience needed. The kit is designed to simplify hardware and software development requirements, making it the fastest and easiest platform for application development with the goal of volume deployment on Kria K26 SOMs. The kit includes the development platform, accessory pack, and a 13MP autofocus RGB camera module.

This is my review of the first starter kit in the AMD XILINX Kria portfolio, the KV260 Vision AI starter kit. This kit is advertised as “an out-of-the-box platform for advanced vision applications”. According to the marketing material, “it allows users to target the starter kit as soon as it’s out of the box, with NO installation or FPGA knowledge required”, a capability that I will try to prove in this review. This expands on the line of devices starting with FPGA’s and SoCs in 1984 then Accelerator Cards in 2018, onto Versal ACAP in 2019 and now, the release of System-On Modules in 2021.

A little background on myself, I am a retired Senior Software Engineer with a Bachelor’s of Science Degree in Computer Science from Boston University. I graduated from BU in 1980 and had been working as a Software Engineer since then, until I retired in 2018. I have a limited knowledge of FPGA design but have participated in RoadTest and Design Challenges sponsored by various vendors on element14, where I have acquired a tremendous amount of knowledge in embedded systems. I reviewed an FPGA starter kit by Lattice for the Element14 Summer of FPGAs event in the summer and fall of 2021 and wrote a blog about it. Now, I have been given the opportunity to try an AMD XILINX product through a RoadTest.

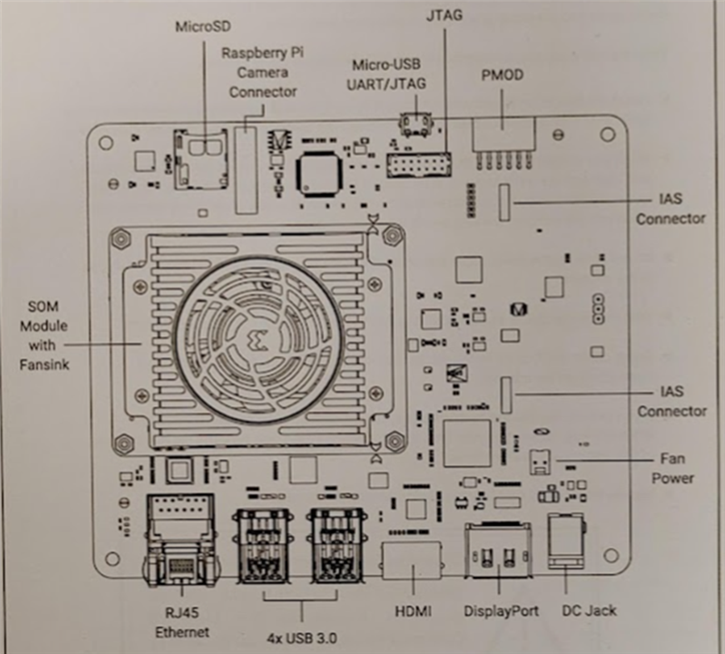

At the heart of the kit, is the AMD KRIA K26 SOM (System On Module). The SOM integrates a custom-built AMD Xilinx Zynq UltraScale+ MPSoC. The SOM provides an embedded processing system with tightly integrated programmable logic and a rich set of configurable I/O capabilities. Also on the SOM, there is DDR memory, nonvolatile storage devices, and a security module. An aluminum thermal heat spreader and a 12V Fan is mounted on the heat sink and is attached on top of the SOM.

At the heart of the kit, is the AMD KRIA K26 SOM (System On Module). The SOM integrates a custom-built AMD Xilinx Zynq UltraScale+ MPSoC. The SOM provides an embedded processing system with tightly integrated programmable logic and a rich set of configurable I/O capabilities. Also on the SOM, there is DDR memory, nonvolatile storage devices, and a security module. An aluminum thermal heat spreader and a 12V Fan is mounted on the heat sink and is attached on top of the SOM.

The SOM is attached to a carrier card for Development and Deployment purposes. The Carrier card communicates with the SOM. This carrier card is built by AMD, but a carrier card can be designed by a user for a specific application, following the guidelines to communicate with the SOM. The carrier card PCB contains the connectors needed to work with the SOM interfaces.

The SOM is attached to a carrier card for Development and Deployment purposes. The Carrier card communicates with the SOM. This carrier card is built by AMD, but a carrier card can be designed by a user for a specific application, following the guidelines to communicate with the SOM. The carrier card PCB contains the connectors needed to work with the SOM interfaces.

My package arrived on February 6th, 2023 at 1:30 PM, and now the fun begins. I am very excited to finally get to review an AMD product. I have heard so many good things about their product line in this community. I have watched several webinars on the AMD line of products and most recently, a two-day webinar on the Kria KV260 Vision AI Starter Kit.

I can’t wait to crack it open but first I need to prepare an unboxing video.

3 Goals of the RoadTest

There are 3 goals given on the landing page for this RoadTest that I have tried to cover in this review.

The next 3 sections describe how I met these goals.

I actually went overtime on this. You don’t have to watch the whole thing, if you choose. It’s my first attempt at a video production for a RoadTest. I used the free version of KAPWING (kapwing.com), to edit my videos and I just scratched the surface of the capabilities of this excellent online media editor.

Here you go:

More professional and to the point. It describes how quickly and easy it is to launch the Smart Camera accelerated application, with NO FPGA experience or tools required.

After unboxing the kit as described in the video above, I went to the small pamphlet included in the kit.

Using the highlighted link. https://www.xilinx.com/KV260-Start, or the scanning the UPC code , brought me to the page https://www.xilinx.com/products/som/kria/kv260-vision-starter-kit/kv260-getting-started/getting-started.html

, brought me to the page https://www.xilinx.com/products/som/kria/kv260-vision-starter-kit/kv260-getting-started/getting-started.html

Now, I will describe my experience following the instructions on this page:

The first thing I did was to upgrade my Ubuntu version to 22.04 on my laptop, as I anticipated using it to test the kit. However, it turns out that I did not need to upgrade because the Kria KV260 will have Ubuntu v22.04 installed on the SIM card inserted in the kit. To access the OS, you can either use a serial terminal connection from your PC or attach a mouse, keyboard, and a second HDMI monitor to take advantage of the GNOME Desktop and use the kit as a standalone Linux workstation, which is pretty cool!

The introduction posted an important update that was required, followed by boot firmware updates. I noted these instructions but did not do the upgrade, which may have been the reason why I had some trouble with my Ubuntu test.

UPDATE 01/21/2024 -- In answer to the above questions I did some more reserch and created a blog in the FPGA group to clear this up

This post we’ll go through the steps for updating the boot firmware of your Kria SoM. I describe how I updated my Kria SoM boot firmware on the KV260 starter kit. If you are unaware Ubuntu 22.04 is NOT compatible with the Boot Firmware that comes installed on the KV260 SoM.

This demo offers the following:

Now onto actually performing the demo described in the Out-of-the-Box with the Kria KV260: Up and Running in Under an Hour Video presented above. From the startup page I went to the Get Started with Ubuntu link and followed all the steps outlined below.

Success

Success, But now it’s been over an hour. And I have not run the examples yet. So the claims in the marketing material are untrue for a novice user like me. However, I was able to use the kit as a standalone GNOME Desktop workstation by connecting a display, keyboard, and mouse. To take advantage of the GNOME Desktop, use the "Instructions for GNOME Desktop" section below, which requires a keyboard, mouse, and monitor to be connected.

Kria SOM has official Ubuntu support with a certified Ubuntu image. Currently, there is one application (NLP-smartvision) ported for out-of-box support in the Ubuntu image. Kria SOM also has PYNQ support, bringing Python productivity to the embedded platform. The example contains hardware (A microphone that did not come with the kit), so I could not complete the examples.

This has apparently changed since I started my review, as I was able to find a link to a camera application. The Quick Start page Setting up the Board and Application deployment , outlines the process of setting up the board and deploying the application. The introduction section states, "This document shows how to set up the board and run the smartvision application. This guide and its prebuilt smartvision firmware are targeted for Ubuntu 22.04 and XILINX 2022.1 toolchain. The previous version of this application (targeted for Petalinux on XILINX 2021.1 toolchain) is still available online." I intended to try this new smartcam on Ubuntu, but unfortunately ran out of time.

By the time you get to this section, you have successfully completed the Ubuntu flow. I read through this section. Links were reviewed and I noted pages of interest that I will come back to. I listed them here and decided to try PetaLinux instead of Ubuntu?

For more information on developing with Ubuntu, refer to the Ubuntu wiki pages: Getting Started with Ubuntu and Tips and Tricks

To continue developing with Kria, the following resources are recommended:

There are several Linux OS options available for Getting Started with the KV260.

Typically for evaluating products for element14 RoadTest, I have been experimenting with example programs available for the kit to gain knowledge with the toolchains. The examples help an intermediate embedded developer like me, to evaluate the usage of the product. A well written example is worth its weight in gold. This section contains the example from the Website that I chose to experiment with.

I was not able to get the examples working under Ubuntu so I tried to use PetaLinux instead.

Read thru

K26 Wiki to directly use PetaLinux BSPs for application development and deployment, rather than Ubuntu, the latest PetaLinux BSPs are available.

Github.io page Further technical documentation is available here

The Starter Kit has a primary and secondary boot device, isolating the boot firmware from the run-time OS and application. This allows you to focus on developing and updating your application code within the application image on the secondary boot device, without having to touch the boot firmware. The primary boot device is a QSPI memory located on the SOM, which is pre-programmed (pre-loaded QSPI image) at the factory. The secondary boot device is a microSD card interface on the carrier card.

For setting up the microSD card, you’ll need to download the latest SD card image and then write it using an Image Flashing tool.

The following are the key connections for the AMD Kria KV260 Vision AI Starter Kit:

Instructions for Windows

Baud rate = 115200

Data bits = 8

Stop bits = 1

Flow control = None

Parity = None

QUESTION on petalinux startup??

The following text comes up when booting the system? Just before the login prompt. What does this do? Something about JupyterLab ?

|

[I 2018-03-09 04:35:06.108 ServerApp] jupyterlab | extension was successfully linked. [I 2018-03-09 04:35:06.320 LabApp] JupyterLab extension loaded from /usr/lib/python3.8/site-packages/jupyterlab [I 2018-03-09 04:35:06.321 LabApp] JupyterLab application directory is /usr/share/jupyter/lab [I 2018-03-09 04:35:06.343 ServerApp] jupyterlab | extension was successfully loaded. [I 2018-03-09 04:35:06.344 ServerApp] Serving notebooks from local directory: /home/petalinux/notebooks [I 2018-03-09 04:35:06.345 ServerApp] Jupyter Server 1.2.1 is running at: [I 2018-03-09 04:35:06.345 ServerApp] http://192.168.1.7:8888/lab?token=004023867f176c7682afc4f125b411c9ac07cd9646bbc911 [I 2018-03-09 04:35:06.345 ServerApp] or http://127.0.0.1:8888/lab?token=004023867f176c7682afc4f125b411c9ac07cd9646bbc911 [I 2018-03-09 04:35:06.345 ServerApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation). [C 2018-03-09 04:35:06.368 ServerApp] To access the server, open this file in a browser: file:///home/petalinux/.local/share/jupyter/runtime/jpserver-992-open.html Or copy and paste one of these URLs: http://192.168.1.7:8888/lab?token=004023867f176c7682afc4f125b411c9ac07cd9646bbc911 [W 2023-02-27 16:02:07.002 LabApp] Could not determine jupyterlab build status without nodejs |

The reason that It comes up, is because at this point in time all the software is not loaded yet and try the 1st link after the camera app is running

The following link is run from the browser on the PC

http://192.168.1.7:8888/lab?token=eadda69f8091d4d7d74589f3403f09b0f86858fc160be051

This one does not work?

or http://127.0.0.1:8888/lab?token=eadda69f8091d4d7d74589f3403f09b0f86858fc160be051

This completes Step 3 and I am seeing the prompt.

The Kria KV260 Vision AI Starter Kit introduces the concept of accelerated applications. These are pre-built applications for AI and SW developers, giving them a head start to develop their target end-applications You can quickly run one of our accelerated apps and even customize it with different AI models or sensors. Follow the steps on this page, to try the “Smart Camera accelerated app”

The KV260 Vision AI Starter Kit has ability to load and run applications that configure the hardware to implement a variety of functions. The utility “xmutil” is used to load the various applications. Only One accelerated application can be loaded at a given time. We will first load an application that programs the SOM to behave as a smart camera, with the ability to process a 1080p video stream from either a MIPI or USB camera, and display it over HDMI or DisplayPort, or stream it over wired Ethernet.*You will need a viewer capable of displaying video streamed over Ethernet, for example ffplay or vlc.

You have already made the connections needed to launch this application in Step 3. Now you will:

AMD provides package feeds in run-time package management (rpm) format for users to dynamically load AMD accelerated applications on top of running a Linux starter image using dnf package manager utility command “dnf install”. AMD also provides the xmutil utility (xmutil getpkgs) to search the package feed and query the available accelerated applications package groups for the Vision AI Starter Kit. Run the following commands in your paralinux command window. Commands are given below:

Prior to executing the newly installed application available at /opt/xilinx/bin, the FPGA firmware (PL bitstream + device tree overlay + PL drivers) needs to be loaded using xmutil utility commands. Follow the steps to load Smart Camera accelerated application firmware on the Vision AI Starter Kit.

Now comes the big moment… Place the AR1335 camera module pointing to the users face and run the Smart Camera accelerated app.

If you would like to try the GitHub doc for paralinux, use this link for the camera app. It uses the 2021.1 link https://xilinx.github.io/kria-appsdocs/kv260/2021.1/build/html/docs/smartcamera/smartcamera_landing.html

Quick Start Setting up the Board and Application deployment

You can register an account and you will be able to download software

This site has forums, a knowledge Base, Blogs, and an advance search.

There are example applications on the Xilinx GitHub docs page. There are 3 release of the Boot Firmware 2022.1, 2021.1, and 2020.2.

As you can see from the chart above, PetaLinux is supported on all 3, but Ubuntu is only supported on 2, as seen below.

.

What I found, at the time of this writing , is that the navigation menu on the right hand side, is kind of tricky. In that the pages that are loaded, have similar content but the supported firmware version number is not apparent from the page content. The only way that you can actual tell what supported firmware version example page you using is to use the URL line, the version number is in the URL. Here the 2022 page is on the Left and the 2021 page is on the Right.

If you look real hard under the navigation bar between the logo and the search bar you will see the version.

There are other release context links that you can press at the bottom of the navigation bar, to toggle between release but you cannot get back to the 2022 version context!.

The first time you navigate from the SOM Landing page navigation button, you are placed in version 2022 context as shown below….

This makes it very confusing when trying to follow an example for Ubuntu or PetaLinux. I just wanted to point this out, because I was lost running the examples between Ubuntu and PetaLinux, I needed to go back to the landing page before I realized what was going on with the context links! A big waste of time, I’d say.

I was able to meet all the 3 goals that I set out to accomplish with this review.

My out-of-box experience of the product was very rewarding. I found it very easy to get the kit up and running and perform some interesting Vision AI examples. IoT is wicked hard and the concept of FPGA can be really daunting to a beginner. Even though, I have some previous knowledge of the concept, for me using the SOM made it more transparent then other FPGA kits I have evaluated.

I rated the product based on my beginner status of using FPGA tech. I’m an independent Maker and evaluating Embedded development kits is a hobby of mine. I do not have huge funds to invest in Kits and I’m fortunate to have been given the opportunity to evaluate embedded MCU evaluation kits from many companies.

I never give a perfect score but this kit ranked very high compared to other kits that I have evaluated.

The Kit was a pleasure to work with. Quite frankly it performed better than my initial expectations

The illustrations in the documentation made it real easy to design with. Even though, I did not do any real deep design work with this review, I was able to get the out of the box experience working, with only a couple of hitches.

From what I saw at the operation level of evaluating the application examples were excellent. I scored this based on the doc pages navigation context links, which I found to be confusing.

The documentation made it easy to use. At points the documentation was a bit hard to navigate, due to links that were leading to the wrong information or sometimes leading to the wrong supported versions of the OS.

There is an abundance of support materials and sometimes duplicate information presented in several different places,

I think that the price for the kit is warranted, but the price puts me and other independent makers probably out of being able to afford it. The price is worth the money to someone using the SOM and designing Carrier cards for it.

I have only scratched the surface of using the capabilities of the Kit. and I will keep on coming back to this review to refresh my knowledge of the kit and continue to experiment with this Vision AI starter kit. Some of the things that I did not have time for, but will try to came back to are:

Roadtest supplied links:

• Product Brief

• Datasheet

• User Guide

• Getting Started Guide

• Github Applications

• Kria SOM Github

• Avnet Page Brief

• Getting Started Video

Vision-based Applications with Kria Online Workshop

This was an extensive 2 day workshop that I attended on the Kria KV260. I took the workshop before receiving the kit. It is very detailed and takes a deep dive into using the kit. And the examples.

Session 1: https://attendee.gotowebinar.com/recording/5123003334544520619

Session 2: https://attendee.gotowebinar.com/recording/8740415281647336878

Top Comments

This was a really fun board to play with I was able to meet all the 3 goals that I set out to accomplish with this review.

Nice review.

Loved how you detailed all your steps, even your mistakes ! I'll take some notes from your review !

I really appreciate the Appendix A !

I've seen that you've gone with the 2021 version…

Kapwing looks interesting...does their branding always appear in the finished video?