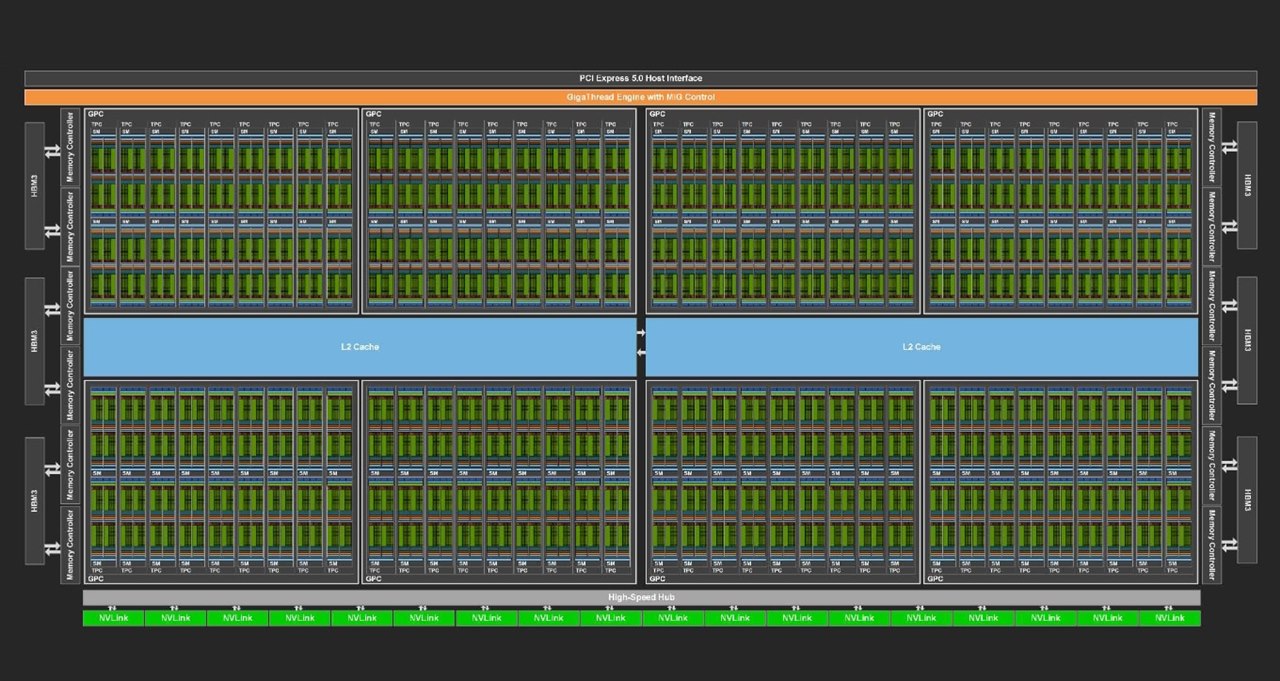

The specialized language model boasts 43 billion parameters and is aimed at bolstering chip design productivity. (Image credit: Nvidia)

Nvidia recently unveiled its ChipNeMo specialized language model that’s designed to boost chip design productivity. The platform boasts 43 billion parameters and looks to streamline aspects of chip design by answering questions, condensing bug reports and script crafting for EDA (Electronic Design Tools) tools. “The goal here is to make our designers more productive,” stated Bill Dally, Nvidia’s chief scientist, in a recent interview. “If we even got a couple percent improvement in productivity, this would be worth it. And our goals are actually to do quite a bit better than that.”

ChipNeMo was trained using Nvidia’s dataset garnered from the company’s internal code and text libraries, which include architecture and design models. That approach allows ChipNeMo to operate with a refined understanding of Nvidia’s chip design and architectures. As mentioned earlier, the platform can answer general questions on chip design processes, produce detailed bug reports and write scripts for interfacing with CAD tools. Those tools can run simulations and benchmarks to verify performance and stability.

ChipNeMo offers an extensive knowledge base that can be utilized by a single Nvidia A100 GPU, making it an efficient platform for developers looking to design the latest chips. Certainly so for novices and beginners, allowing them to find correct information quickly. That being said, generative AI has been in the news lately for providing false or misleading information using widely known information, meaning they’re not accurate platforms in any given situation. To avoid those issues, Nvidia is employing a RAG (Retrieval Augmented Generation) technique to ensure accuracy in the design process. RAG functions by referencing databases of source documents to ground the platform’s outputs, meaning if the information it produces is inaccurate, it’s because the source documents are as well.

To that end, ChipNeMo is strictly tailored for Nvidia’s GPUs and their architectures and internal processes. It’s also not slated for commercial release; however it’s an interesting approach to leveraging AI for designing next-gen chips.

Have a story tip? Message me at: http://twitter.com/Cabe_Atwell