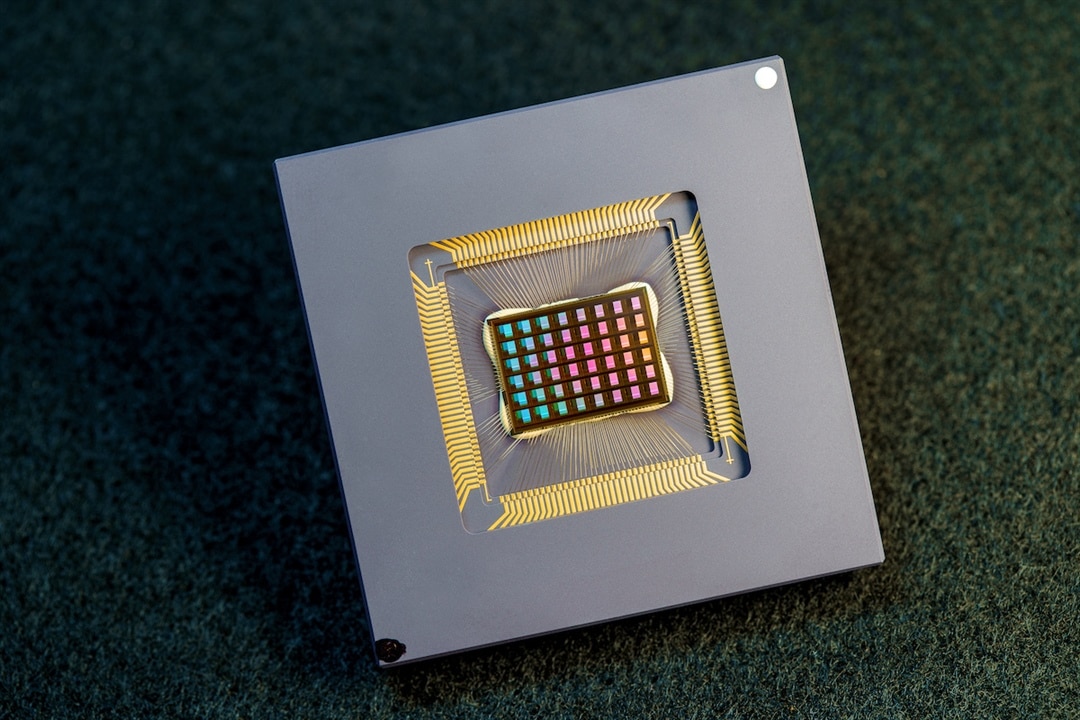

The NeuRRAM chip is more energy-efficient than state-of-the-art chips. It’s also more versatile and as accurate as digital chips. (Image Credit: UC San Diego)

Researchers recently released a roadmap of how neuromorphic computing can be scaled up to compete with modern computing methods. They detail a practical glimpse into approaching the human brain’s cognitive capacity with comparable form factor and power consumption. Neuromorphic computing has a host of applications, such as AI, AR/VR, wearables, scientific computing, smart cities, smart farming, and more. The neuromorphic chips can exceed the performance, energy efficiency, and space efficiency of traditional computers, making them more beneficial for AI, health care, and robotics.

Neuromorphic computing is a technology that replicates the human brain and nervous system functionality and structure. Software and hardware are specifically designed for this purpose to simulate neural and synaptic structures---a more efficient approach compared to traditional computers.

These systems rely on artificial neurons and synapses for processing data. They’re also capable of handling multiple tasks, featuring millions of artificial neurons to process data simultaneously. Each artificial neuron has integrated memory and computation (called in-memory computing), so they don’t need a separate storage and processing unit. Due to their parallel nature, neuromorphic systems have the potential to detect anomalies and recognize patterns.

“Neuromorphic computing is particularly relevant today, when we are witnessing the untenable scaling of power- and resource-hungry AI systems,” said Gert Cauwenberghs, a Distinguished Professor in the UC San Diego Shu Chien-Gene Lay Department of Bioengineering and one of the paper’s co-authors.

The researchers proposed optimizing features, including sparsity---a human brain’s defining feature. The brain forms several neural connections before selectively pruning the majority of those connections. As a result, spatial efficiency is optimized while “retaining information at high fidelity.” Emulating this feature could lead to more energy-efficient and compact neuromorphic systems.

“The expandable scalability and superior efficiency derive from massive parallelism and hierarchical structure in neural representation, combining dense local synaptic connectivity within neurosynaptic cores modeled after the brain's gray matter with sparse global connectivity in neural communication across cores modeling the brain's white matter, facilitated through high-bandwidth reconfigurable interconnects on-chip and hierarchically structured interconnects across chips,” said Cauwenberghs.

“This publication shows tremendous potential toward the use of neuromorphic computing at scale for real-life applications. At the San Diego Supercomputer Center, we bring new computing architectures to the national user community, and this collaborative work paves the path for bringing a neuromorphic resource for the national user community,” said Amitava Majumdar, director of the division of Data-Enabled Scientific Computing at SDSC here on the UC San Diego campus, and one of the paper’s co-authors.

Additionally, the researchers propose stronger collaborations within academia and between academia and industry. They also emphasize making the field more accessible by developing a wider array of user-friendly programming languages. The researchers think this can improve collaboration across disciplines and industries.

Have a story tip? Message me at: http://twitter.com/Cabe_Atwell