Hi everyone,

I'm encountering a strange behavior during an intercomparison between several calibrated RTD temperature probes. Here's the context:

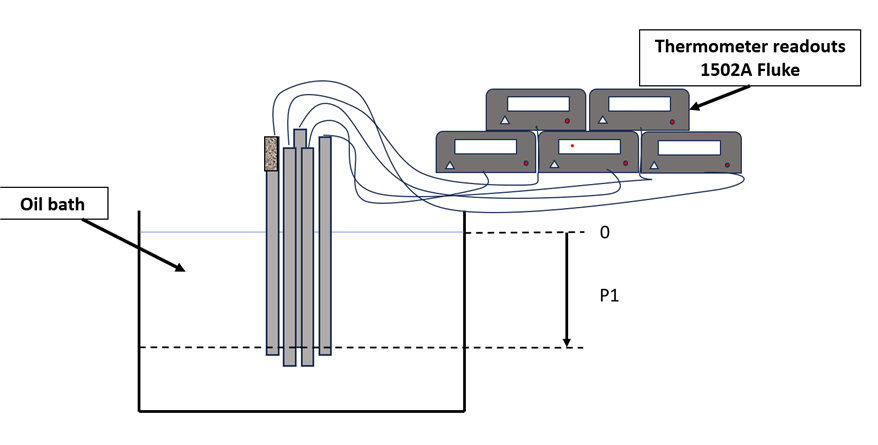

- I'm using 5 RTD sensors, one of which is a Hart Scientific 5615.

- Each sensor is connected to its own readout instrument.

- Measurements were performed in both an oil bath and a dry air oven to rule out medium-related effects.

- All sensors were allowed to stabilize for over 20 minutes at each temperature point.

Observed behavior

- From 0 °C to 121 °C, all sensors agree very closely, with negligible deviations.

- Below 0 °C, the 5615 starts to deviate by approximately 0.2°C from the others (MPE is at +/- 0.03 °C yes extreme precision is needed).

- Once the temperature is raised again, the 5615 returns to normal behavior.

- The issue is repeatable, regardless of medium, measurement point, or configuration.

Hypotheses already ruled out

- Response time: ruled out (long stabilization time).

- Material or construction differences: all sensors are identical in build and sheath, the only difference is the model of this particular sensor, but it's calibrated by the same Lab and it shouldn't be a problem.

- Measurement point: tested at various positions multiple times.

- Measurement medium: tested in both oil and air, I get the same result.

- Readout device: each sensor has its own dedicated instrument.

Possible causes under consideration

- Incorrect application of ITS-90 or Callendar–Van Dusen coefficients in the readout?

- Localized calibration error at low temperatures, is it possible? Could it be that the sensor is linear for "high" tempreratures and non-linear for "lower" ones?

- Firmware or electronic behavior specific to the readout used with the 5615?

- Interpolation or coefficient misapplication in the readout or calibration file?

Has anyone experienced a similar issue with the 5615 or other high-precision RTDs?

Any suggestions for further testing or troubleshooting would be greatly appreciated.

Thanks in advance!

.

.