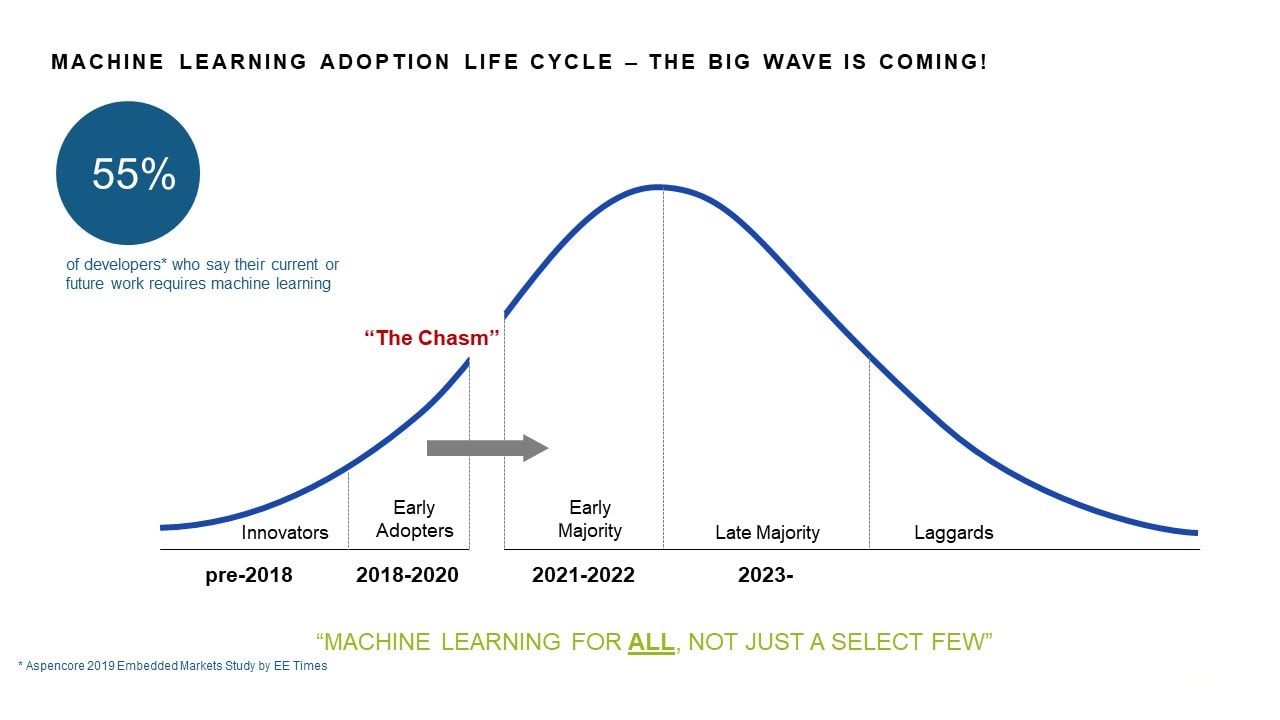

As with many new complex technologies, machine learning is following an adoption life cycle whereby ‘early adopters’ have utilized this technology for the last few years. So to speak, they are ahead of the curve. But starting in 2021, the adoption shift will go toward the ‘early majority’ (Figure 1). Until now, NXP’s eIQ machine learning (ML) software development environment has successfully supported the early adopters, but as we cross ‘The Chasm’, machine learning support must become more comprehensive and easier-to-use. I’ll explain.

What Is an Early Adopter?

Early adopters for machine learning go back more than just a few years–there have been people (and companies) working on this technology for decades. However, the move to machine learning at the edge is a relatively recent activity and the requirements are different. The tools are different. System resources are more constrained. The applications are more responsive. The early adopters have had to figure out the entire process–from model training to deployment of the inference engine that runs the model, as well as all other aspects of the system integration (i.e. the video pipeline from capture to output of the inference).

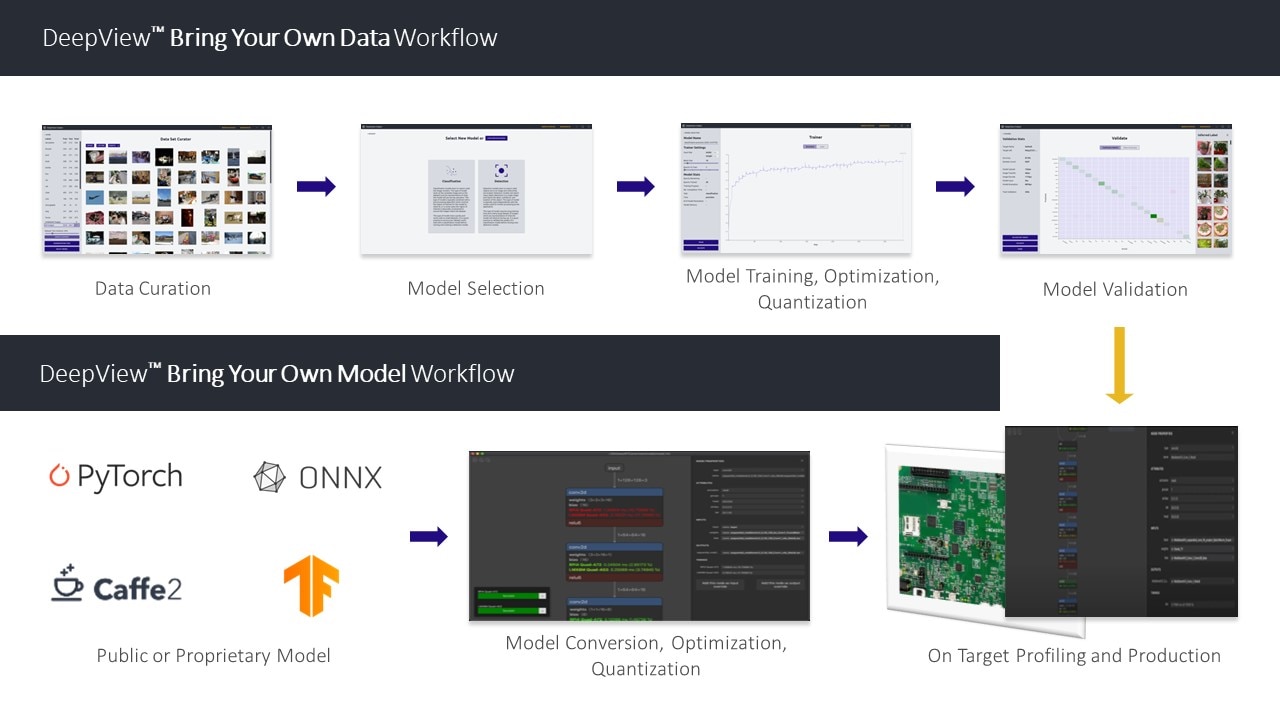

In the development process, once a model is trained, optimized and quantized, the next phase consists of deploying that model on the device, allowing it to perform its inferencing function. NXP provided eIQ to support this process, combining all the software pieces, application examples, user guides, etc. to allow the advanced developer to deploy a variety of open source inference engines on our devices. Today, eIQ has inferencing support for TensorFlow Lite, Arm NN, ONNX runtime and Glow neural network compiler. To use eIQ today, the developer follows a process we call ‘bring your own model’, or BYOM. Build your trained models using public or private cloud-based tools, then simply bring your models into the eIQ software environment and plug it into the appropriate inference engine.

Machine Learning for All

The 2019 Embedded Markets Study by EE Times indicated that 55% of developers said their current or future work requires machine learning (I believe this number has gone up since then). To cross the chasm and enable machine learning for the majority of developers, machine learning support must become more comprehensive and easier-to-use. More comprehensive support means that NXP must provide an end-to-end workflow, allowing developers to bring in their training data, select the right model for their application, perform model training, optimization and quantization and finally perform on-target profiling and subsequently move to production.

Easier-to-use is a matter of perspective, but for majority adoption this means that NXP must provide a simplified yet optimized user interface. Theoretically, this would enable a machine learning development environment that could essentially hide the details, and with the click of a few options, import the user’s training data and deploy the model on the target device.

Crossing the Chasm with Au-Zone Technologies

To make this a reality and cross that adopter chasm, NXP has made an investment in Canada-based Au-Zone Technologies, establishing an exclusive, strategic partnership to expand eIQ with easy-to-use ML tools and expand its offering of silicon-optimized inference engines for Edge ML. Au-Zone’s DeepView ML Tool Suite will augment eIQ with an intuitive, graphical user interface (GUI) and workflow, enabling developers of all experience levels (e.g. embedded developer, data scientist, ML expert) to import datasets and/or models, rapidly train and deploy NN models and ML workloads across the NXP Edge processing portfolio (Figure 2).

The DeepView tool suite includes a dataset workspace that will provide developers with a means to capture and annotate images for model training and validation. Start by drawing a box around an object(s) of interest and describe the item (search the Internet for the paid and free versions of tools to perform this) and from there you can start building machine learning datasets and train models. The workspace also supports dataset augmentation allowing developers to quickly adjust the image parameters to improve model training by reducing over-fitting and increasing robustness to dynamic real-world environments. For example, dataset augmentation functions include image rotation, blurring, color conversions, etc. Remember, the NN model only ‘sees’ numbers, so any variation on an input image will look different. The more variations you can provide on the original training data, the more accurate your model will be.

The DeepViewML trainer allows developers to select hyperparameters and click train. In most cases, you won’t be training your model from scratch, so this DeepViewML trainer supports transfer learning (i.e. modifying the last layers of the model). A model optimizer is also part of the DeepView ML tool suite; it will help you fine-tune your model for the desired target platform and inference engine. The optimizer provides automatic graph-level optimizations such as pruning, fusing and folding layers to reduce complexity and improve performance with no loss of precision. Furthermore, optimizations that have accuracy tradeoffs are configurable by the developer. These include quantization, layer replacement and weight rounding. You’ll be able to validate lossy optimizations and compare them using the validator tool to fully understand the impact (i.e. size versus accuracy).

Through this strategic partnership, we’ll also be adding Au-Zone’s DeepView run-time inference engine to the eIQ family; this will complement the open source inference technologies already deployed. A key feature of this DeepView engine is that it will optimize the system memory usage and data movement uniquely for each NXP SoC architecture. Users will then have an additional choice of inference engines - the profiling tool will quickly allow users to do performance and memory size comparisons on the various inference runtime options.

If you haven’t already joined the machine learning movement, hopefully you’ll be crossing the chasm soon and taking advantage of the comprehensive eIQ machine learning (ML) software development environment, complete with the DeepViewML Tool Suite and DeepView run-time inference engine, available in Q1, 2021.