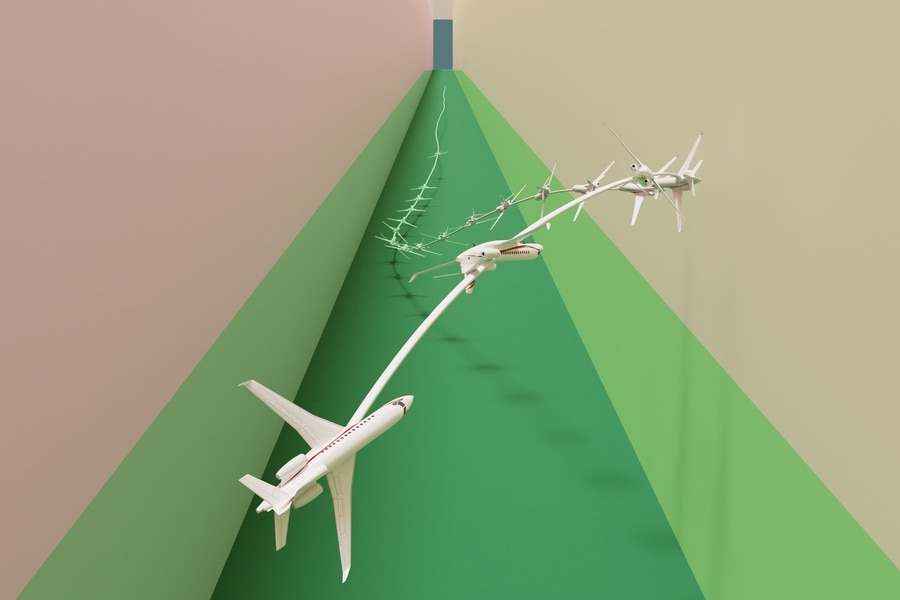

MIT has developed a new technique for auto-flying jet aircraft using deep reinforcement learning. (Image Credit: MIT)

MIT researchers recently created a machine-learning technique that solves complex stable-avoid problems with more stability and safety than other methods. This allows the agent to reach and stay stable in its goal region. In their simulation, the approach flew a jet through a narrow corridor without crashing.

"This has been a longstanding, challenging problem. A lot of people have looked at it but didn't know how to handle such high-dimensional and complex dynamics," says Chuchu Fan, the Wilson Assistant Professor of Aeronautics and Astronautics, a member of the Laboratory for Information and Decision Systems (LIDS), and senior author of a new paper on this technique.

Existing methods usually rely on straightforward math to solve complex stable-avoid problems. However, the final results aren't sustainable for real-world dynamics. Better approaches typically rely on reinforcement learning that receives an award whenever it gets close to the end goal during training. Ultimately, there are two goals in MIT's system. They involve staying stable and avoiding obstacles. So the team split the problem into two steps.

The first step involved reworking the stabilize-avoid problem into a constrained optimization problem. By doing so, the agent reaches and stabilizes its goal, allowing it to remain within a specific area. The constraints also prevent it from colliding with obstacles. In the other step, the researchers reformulated the constrained optimization problem into the epigraph form, solved via a deep reinforcement learning algorithm. This epigraph form allows the team to address problems brought on by other reinforcement learning techniques.

"But deep reinforcement learning isn't designed to solve the epigraph form of an optimization problem, so we couldn't just plug it into our problem. We had to derive the mathematical expressions that work for our system. Once we had those new derivations, we combined them with some existing engineering tricks used by other methods," So says.

The team proceeded to design control experiments featuring different initial conditions. Some simulations required the agent to reach and stay within a goal region while dramatically swerving around to avoid colliding with obstacles. The team also discovered their approach is the only one capable of stabilizing each trajectory while maintaining safety. They also applied this method to a simulated jet aircraft that flew similarly to the jet from the Top Gun: Maverick movie. It maintained a low altitude, flew in a narrow corridor, and stabilized to a target close to the ground.

The team believes this technique could be the building blocks for highly dynamic robot controllers, allowing them to achieve the safety and stabilization that autonomous delivery drones require. It can even be applicable in a vehicle after skidding off the road, allowing the driver to return to a stable trajectory.

And speaking of Autonomous driving, Tesla's vehicles have been involved in 736 accidents when drivers used the Autopilot feature since 2019. In the first quarter of 2023, Tesla's vehicles have gotten into 125 accidents. What makes matters worse is that 17 of those accidents were fatal and claimed 11 lives since May 2022. Although the report doesn't detail the number of accidents Tesla avoided, it still presents valid concerns related to autonomous driving. However, the increase in accidents has also led to investigations surrounding the technology in Tesla vehicles, which no longer use RADAR. Instead, it relies on a camera-based vision procession that maps the external surroundings.

"We believe that a goal we should strive for as a field is to give reinforcement learning the safety and stability guarantees that we will need to provide us with assurance when we deploy these controllers on mission-critical systems. We think this is a promising first step toward achieving that goal," says lead author Oswin So, a graduate student.

The team wants to improve its method so that it can consider uncertainties while solving the optimization. In addition, they plan on looking into how the algorithm performs with hardware deployment since they expect mismatches between the model and real-world dynamics.

Have a story tip? Message me at: http://twitter.com/Cabe_Atwell