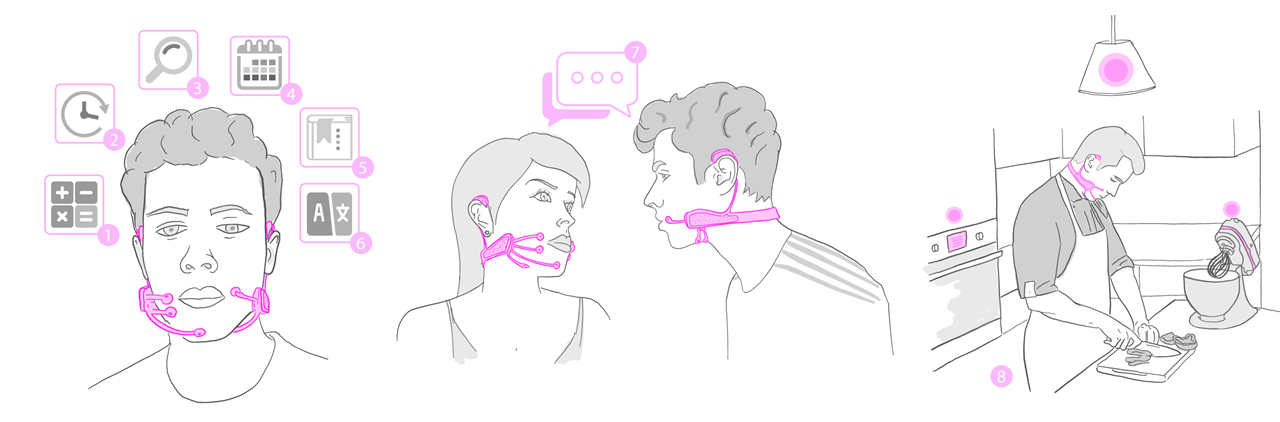

Arnav Kapur's AlterEgo device enables people to communicate without speaking. (Image Credit: MIT Media Lab)

MIT graduate student Arnav Kapur developed AlterEgo, an AI-powered headset that reads the wearer's mind, allowing them to communicate with machines without speaking. More fittingly, this AlterEgo is suitable for those diagnosed with diseases like amyotrophic lateral sclerosis and multiple sclerosis.

Kapur first revealed his AlterEgo device in 2018 as a prototype. By wearing it, users can communicate with AI assistants, people, services, and machines through their internal articulation of words and ideas. The mind-reader device also keeps everything private, so all those thoughts and conversations are locked up in the person's brain.

The non-invasive, wearable, peripheral neural interface relies on bone conduction technology for sending and receiving data. Ultimately, this makes it more unique than other devices. This essentially allows a user to communicate in their natural language with AI or machines without saying anything or making external movements. "The feedback to the user is given through audio, via bone conduction, without disrupting the user's usual auditory perception and making the interface closed-loop. This enables a human-computer interaction that is subjectively experienced as completely internal to the human user—like speaking to one's self," according to MIT.

Users can use their thoughts to control appliances or even communicate with others. (Image Credit: Arnav Kapur, Neo Mohsenvand/MIT)

It features a new peripheral myoneural interface designed for silent speech input that reads endogenous electrical signals from the wearer's neck and face surface. Afterward, these get processed to pick up any silent words spoken by the individual. Electrophysiological signals are then processed via a modular neural network-based pipeline that detects and recognizes silent spoken words. It also comes with a smart system for user command/query processing and producing responses. Lastly, the bone conduction output sends audio information back to the wearer, including a response or confirmation to a command. MIT trained AlterEgo with user-specific data and says it has an accuracy score of over 90% on an application-specific vocabulary.

AlterEgo can work as a handy wearable for those struggling with speech disorders, such as Amyotrophic Lateral Sclerosis and Multiple Sclerosis. As such, the device can help those individuals communicate their thoughts and needs without relying on traditional speech methods. MIT also says it has more applications beyond that, "the Internet and AI would weave into our daily life as a 'second self' and augment our cognition and abilities."

The device also has telecommunications applications, allowing wearers to "communicate with the ease and bandwidth of vocal speech with the addition of the fidelity and privacy that silent speech provides." Users can even make it work like a digital memory. In that case, they can record streams of information, accessing them later via the system. For example, people with memory issues could use their thoughts to instruct the system to provide certain information, like an acquaintance's name or the answer to a question.

What's even neater is that it can be used to control IoT devices or various appliances without physical action. Additionally, connecting AlterEgo to a Bluetooth speaker would enable a user to think of a phrase that gets translated into another language for multi-language conversations.

Have a story tip? Message me at: http://twitter.com/Cabe_Atwell