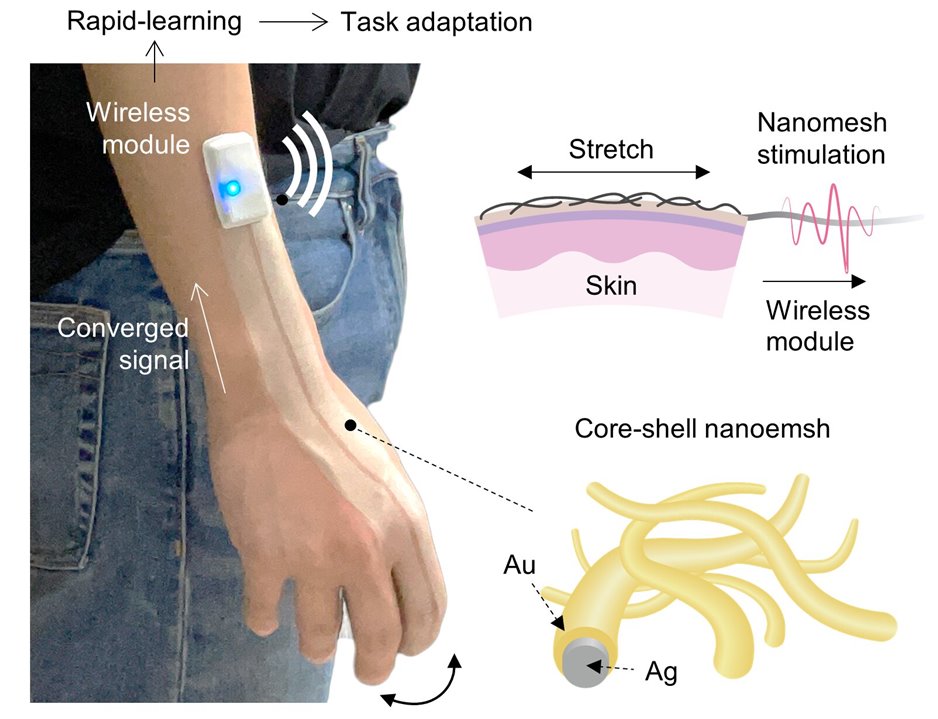

The spray-on mesh consists of a Bluetooth module that transmits signal changes. (Image Credit: Kyun Kyu "Richard" Kim, Bao Group, Stanford University)

Stanford University researchers developed a biocompatible material that sprays onto the back of a hand and uses AI to determine which tasks are being performed based on hand gestures and motions. The mesh features a tiny electrical network capable of sensing while the skin stretches and bends. According to the team, this smart skin could have sports, gaming, telemedicine, and robotics applications.

The electrically sensitive mesh network is embedded in polyurethane, a very durable and stretchable material commonly used in skateboard wheels. It consists of millions of silver nanowires coated with gold that stays in contact with each other, forming electrical pathways. The biocompatible, breathable, and electrically active mesh stays on the skin until it's rubbed off with soap and water. It adjusts itself according to the wrinkles and human finger folds. The mesh can also be equipped with a Bluetooth module to transmit signal changes.

"As the fingers bend and twist, the nanowires in the mesh get squeezed together and stretched apart, changing the electrical conductivity of the mesh. These changes can be measured and analyzed to tell us precisely how a hand or a finger or a joint is moving," explained Zhenan Bao, a K.K. Lee Professor of Chemical Engineering and senior author of the study.

The team decided to use a skin-spraying technique, allowing the mesh to be supported without a substrate. This prevented unwanted motion artifacts from occurring while using an individual conductive mesh trace to produce multi-joint data for each finger. While this new sprayable device fits any hand size or shape, it could be applied to the face to record emotional cues. This may lead to new computer animation approaches or avatar–led virtual meetings with precise hand gestures and facial expressions.

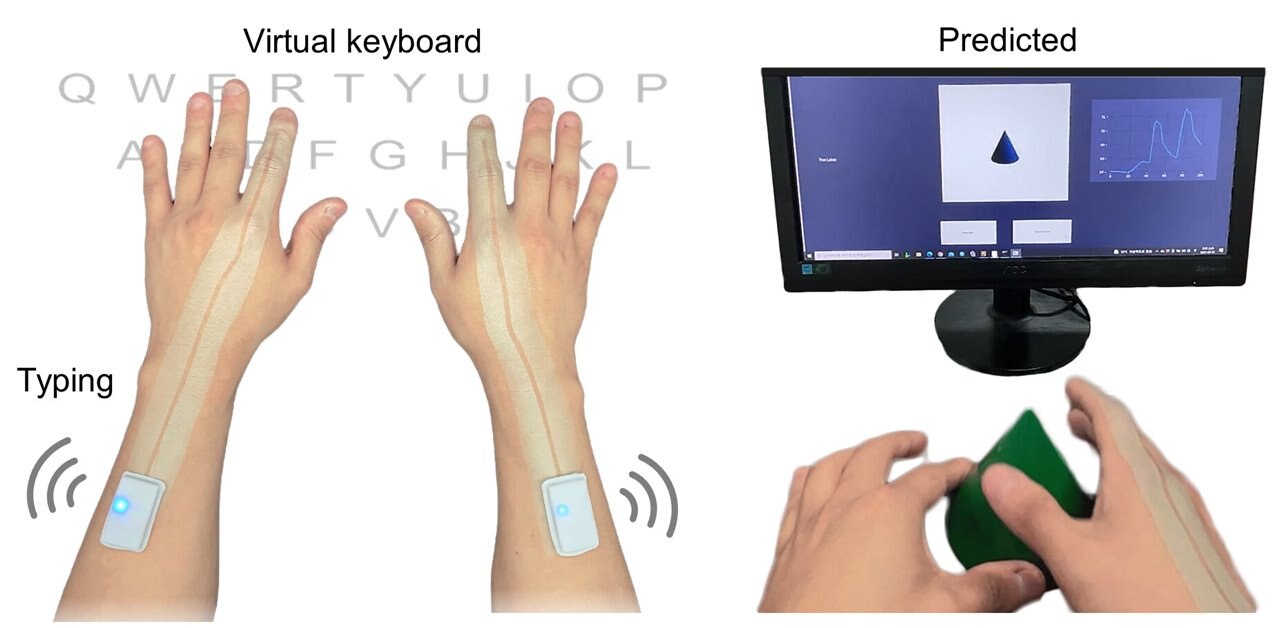

The spray-on mesh recognizes typing and simple objects held by the wearer. (Image Credit: Kyun Kyu "Richard" Kim, Bao Group, Stanford University)

Computers then track any conductivity-changing patterns, mapping them to physical tasks and gestures. For example, typing an X on the keyboard causes the algorithm to recognize that task based on any electrical conductivity changing patterns. After training the algorithm, the physical keyboard will no longer be needed. This concept can be applied to also recognize sign language or objects by tracing their exterior surfaces.

"We brought the aspects of human learning that rapidly adapt to tasks with only a handful of trials known as 'meta-learning.' This allows the device to rapidly recognize arbitrary new hand tasks and users with a few quick trials," said Kyun Kyu "Richard" Kim, a post-doctoral scholar in Bao's lab, the first author of the study.

"Moreover, it's a surprisingly simple approach to this complex challenge that means we can achieve faster computational processing time with less data because our nanomesh captures subtle details in its signals," Kim added.

The team developed a prototype capable of simple object recognition, achieved through touch. It can also perform predictive two-handed typing on an invisible keyboard. In that case, the algorithm typed "No legacy is so rich as honesty" from William Shakespeare and "I am the master of my fate, I am the captain of my soul" from William Ernest Henley's poem "Invictus."

Have a story tip? Message me at: http://twitter.com/Cabe_Atwell