If you have a question about the Experimenting with Sensor Fusion competition, you can ask it here.

If you have a question about the Experimenting with Sensor Fusion competition, you can ask it here.

I think this is a very exciting and interesting "experimenting with" topic!

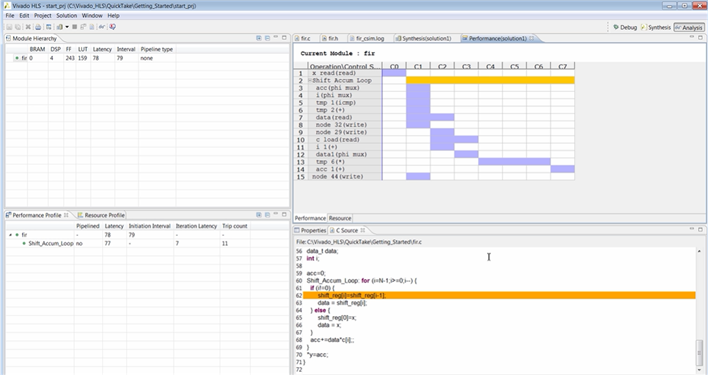

What personal attracts me to the event is a chance to experiment with Xilinx's High-Level Synthesis tools: https://www.xilinx.com/video/hardware/getting-started-vivado-high-level-synthesis.html

What detracts me from applying is the amount of work it would take me personally to get the FPGA board and sensor setup to point to even start experimenting with HLS.

General Questions:

Are there sample projects that an experimenter can build on?

Perhaps an E14 FPGA expert can be coerced to participate as a Mentor and develop a basic starting point project with a swag kit :)

If you are intending to apply, do you to intend to try and implement signal processing routines in hardware versus on a soft-core processor?

I would like to apply, I am still in the analysis phase of what possibilities I have to do something decent in two months. I am very scared by the instability of the element14 website on weekends when I can spend some time. The last three weekends I have tried to work on a blog but I have finally had to leave it.

The application I am working on is water leak monitoring by merging the data of changes detected by processing the images taken from mechanical water meters and the IMU attached to the pipe.

My idea would be to first make a bare metal prototype using a soft-core processor, preferably MicroBlaze, so as not to include another development environment like Keil in the equation.

I would then go on to accelerate up some part of the image processing using Vitis HLS.

The application I'm thinking of would gain a lot from using openCV, either from Vitis HLS or perhaps going to Petalinux, although it's not strictly necessary. I have had bad experiences with Petalinux this year because my internet connection is very bad and the generation of images almost always fails.

I haven't found examples of openCV using the SP701 or with the Spartan-7 FPGA so I'm still in doubt.

As a last phase, it would be to use TinyML to make a model that can predict leaks based on that information.

WOW! Your projects are always so interesting and ambitious!

Re Editing: I draft my content in wordpress then just copy paste the blog preview into the editor. It works well for text and images and then add any e14 specific items. The thought of losing blog text scares me too, so I draft in wordpress.

I have not thought of a project yet as I don't know what is realistically possible.

Thanks for sharing your ideas on this topic, it gives me some sense of what is possible!

Thanks for commenting on Wordpress. Do you use an online service or have you installed a local instance? Until now I had tried editing the document in MS Word, in Word 365, in Google docs but with all of them I had problems with the resolution of the images or with tables when copying.

I like that blogs use all the widgets that element14 offers, such as links to products, the source code widget, internal links to other pages. It's not easy for me to write blogs, first because of the language and second because I don't have the ability to express myself, so I often rearrange the paragraphs or leave them overnight to see if they can be read during the day :P visualizing it already in element14 helps me review them.

I had the same frustrating issues with MS Word, resizing the images and then not copy pasting into the editor. I manage the WordPress installation on a LAMP server I rent. But I believe the a free account on wordpress.com would likely behave the same. I'm not suggesting its perfect but I prefer it over the E14 editor :) Then I just select all of the blog preview and copy paste into the e14 editor.

As you suggest there is still some time needed to add the e14 final touches like product links and source code highlighting.

Your blogs are always wonderfully written and formatted and interesting to read, keep it up:)

using openCV, either from Vitis HLS or perhaps going to Petalinux, although it's not strictly necessary. I have had bad experiences with Petalinux this year because my internet connection is very bad and the generation of images almost always fails.

I haven't found examples of openCV using the SP701 or with the Spartan-7 FPGA so I'm still in doubt.

I have the openCV hardware accelerators working on a Zynq. In that case, the openCV (the HW accelerated library is actually called Vitis Vision) calls are implemented inside the FPGA (hardware) instead of in the ARM processor (software).

this comes with warnings: you have to select what functions to implement. There's no room for a rich set.

It only makes sense for processes that

One example is the resize function. On a Zynq, the speed went from 1s when the resize function was implemented in software (similar to Pi, BB speed) to 200 ms when the openCV resize function was implemented in fabric.

I think it doesn't work on Spartan-7:

Vitis Vision library is designed to work with Zynq, Zynq Ultrascale+, VCK190, and Alveo

FPGAs. The library has been verified on zcu102, zcu104, vck190, U50, and U200 boards.

using openCV, either from Vitis HLS or perhaps going to Petalinux, although it's not strictly necessary. I have had bad experiences with Petalinux this year because my internet connection is very bad and the generation of images almost always fails.

I haven't found examples of openCV using the SP701 or with the Spartan-7 FPGA so I'm still in doubt.

I have the openCV hardware accelerators working on a Zynq. In that case, the openCV (the HW accelerated library is actually called Vitis Vision) calls are implemented inside the FPGA (hardware) instead of in the ARM processor (software).

this comes with warnings: you have to select what functions to implement. There's no room for a rich set.

It only makes sense for processes that

One example is the resize function. On a Zynq, the speed went from 1s when the resize function was implemented in software (similar to Pi, BB speed) to 200 ms when the openCV resize function was implemented in fabric.

I think it doesn't work on Spartan-7:

Vitis Vision library is designed to work with Zynq, Zynq Ultrascale+, VCK190, and Alveo

FPGAs. The library has been verified on zcu102, zcu104, vck190, U50, and U200 boards.

I think the issue is that the usual Vitis Acceleration flow requires a linux kernel, which in case of Zynq can run on the hard ARM processors. In case of Spartan theoretically one can have Linux running on the microblaze, but then the microblaze is not based on ARM, so I would think a lot of the libraries used to compile the Xilinx XRT would need to be compiled for microblaze, and so on. If Xilinx is not supporting Vitis Vision Library on the Spartan, then that means it's a lot of work to do all of that .

On the other hand, IPs compiled using HLS should still work, as those do not require XRT, and plus you also have all the video IPs available from Xilinx for free in Vivado, which enable building fairly complex video designs (from personal experience). I have been very tempted to participate in this contest, since I have done fair bit of work with video processing on the Xilinx FPGAs (mostly on Zynq), however I am already busy with a roadtest, and not sure what other commitments the next 2 months will bring.