I finally got to the finish line for Save The Bees challenge. I did assemble all the building blocks together and got a good run on the bench.

- Initial bench testing report of ZinnBee Project

I assembled everything together and reviewed my previous codes. There are two parts in the system: MKR1310 + Nicla Vision for transmitter, and MKR1310 + OLED+OLED+SD card as the receiver , which is almost same as the system frame introduced at ZinnBee Blog#2.

I did integrate Nicla Vision module with MKR1310 via I2C successfully. I was able to integrate LoRaWAN function into MKR1310 without issues. Fig 1 presents the whole ZinnBee bench testing setup.

Fig 1. ZinnBee Bench Testing Setup

However, there was an unexpected issue happened from MKR IoT Carrier module, which blocked me to use the temperature sensor for environmental monitoring.

I did have a priority list for ZinnBee project:

1st. Edge Impulse® ML on Nicla Vision module.

2nd. LoRa WAN communication between MKR1310 and MKR 1300

3rd. Non critical tasks: temperature monitoring, OLED display and SD logger.

The overall testing video is presented at the link.

The Lego object ID meaning:

Ascii code: 48 (decimal 0) : big dinosaur

Ascii code: 49 (decimal 1) : boy

Ascii code: 50 (decimal 2) : mice

Ascii code: 51 (decimal 3) : small dinosaur

LoRa WAN receiver, MKR1300, took quite long time to update the message from MKR1310 transmitter. You can check the time stamp from OLED on the video. It took almost about 30 seconds to get one package of LoRa WAN message with RSSI at -22 indoors.

Here is the source code for Nicola Vision module:

# Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf, uos, gc

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((120, 120)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

from machine import I2C

def Lego_ID(i):

if i == 0:

print ("It is a big dinaosaur!")

elif i == 1:

print ("It is a boy!")

elif i == 2:

print ("It is a mice!")

else:

print ("It is a small dinaosaur!")

def Lego_ID2MKR1310(i):

if i == 0:

return b'0'

elif i == 1:

return b'1'

elif i == 2:

return b'2'

else:

return b'3'

net = None

labels = None

bigDinosaur_classify_data = 1.0 # item 0

boy_classify_data = 1.0 # item 1

mice_classify_data = 1.0 # item 2

tinyDinosaur_classify_data = 1.0 # item 3

boy_search = 0.0

try:

# Load built in model

labels, net = tf.load_builtin_model('trained')

except Exception as e:

raise Exception(e)

# I2C

i2c = I2C(1,freq=100000)

print(i2c)

#print(i2c.scan())

#address =i2c.scan()[0]

address = 14

print(address)

data = i2c.readfrom(address, 7)

print(data)

data = 1

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

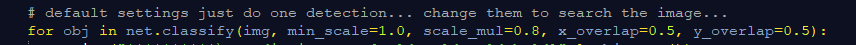

# default settings just do one detection... change them to search the image...

for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

img.draw_rectangle(obj.rect())

# This combines the labels and confidence values into a list of tuples

predictions_list = list(zip(labels, obj.output()))

boy_search = 0.0

boy_id = 0

for i in range(len(predictions_list)):

print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))

if boy_search <= predictions_list[i][1]:

boy_search = predictions_list[i][1]

boy_id = i

# print("boy_search = %f" % (predictions_list[i][1]))

else:

boy_search = boy_search

boy_id = boy_id

Lego_ID(boy_id)

print(clock.fps(), "fps")

i2c.writeto(address,Lego_ID2MKR1310(boy_id))

Conclusion: ZinnBee is able to identify 4 Lego objects and transfer the IDs via LoRa WAN networking on the bench. The overall performance is good enough for prove of the concept experiment now.

2.Summary of ZinnBee Project

ZinnBee project explored Arduino Nicla Vision module, MKR1310/1300 for LoRa WAN application and Edge Impulse® ML process. The design challenges were most from my background, which means I had to learn almost everything to get the building blocks up into running. For example, LoRa WAN module, Edge Impulse® ML process. From what I learned in the past 4-5 months, LoRa WAN is a good communication channel for Save The Bees application. However, ML via camera/image applications will need a lot of work to improve the performance and reliability.

Notes: I will update this blog by end of the week, such as uploading my source codes into Github and perhaps more opinions on ML topics.

Follow-up discussion on ML topic:

Edge Impulse classification performance on paper at Fig 2:

Fig 2.Edge Impulse Training Model Performance

From ZinnBee bench experiment under the limited data sets (40 images/object), Nicla Vision camera was able to detect proper object as far as the captured image matching with the data sets. One thing I am not quite clear is that how much estimation margin Edge Impulse ML can go. I know there are many factors I can play with, such as scaling, overlap as the code here:

It looks like Save The Bees project has brought more questions than answers for ML algorithms.

ZinnBee Blog#01: Introduction of the project to Save the Bees

ZinnBee Blog#02: Initial Project Structure

ZinnBee Blog#03: LoRa WAN Communication Framework

ZinnBee Blog#04: First Impression of Arduino Nicla Vision Machine Learning Process

ZinnBee Blog#05: I2C Bus Between Arduino Nicla Vision Module and MKR1310