The new machine-learning technique can improve the controllability of robots, such as drones. (Image Credit: NoName_13/pixabay)

MIT and Stanford University researchers created a new machine-learning technique for better and more efficient robot controllability in dynamic environments with rapidly changing conditions. This method relies on a specific control theory structure to learn a model for effectively controlling complex dynamics like wind impacts from a flying vehicle’s trajectory. It could help autonomous vehicles travel on slippery roads without skidding, allow a drone to stay close to a skier while they go downhill with strong winds, and enable a free-flying robot to tow objects in space.

“The focus of our work is to learn intrinsic structure in the dynamics of the system that can be leveraged to design more effective, stabilizing controllers,” says Navid Azizan, the Esther and Harold E. Edgerton Assistant Professor in the MIT Department of Mechanical Engineering and the Institute for Data, Systems, and Society (IDSS), and a member of the Laboratory for Information and Decision Systems (LIDS). “By jointly learning the system’s dynamics and these unique control-oriented structures from data, we’re able to naturally create controllers that function much more effectively in the real world.”

The team’s approach extracts an effective controller from the model rather than having the controller learn via additional steps that various machine-learning techniques rely on. Additionally, this method doesn’t need as much data to learn an effective controller. It may help their system perform much quicker in rapidly changing environments.

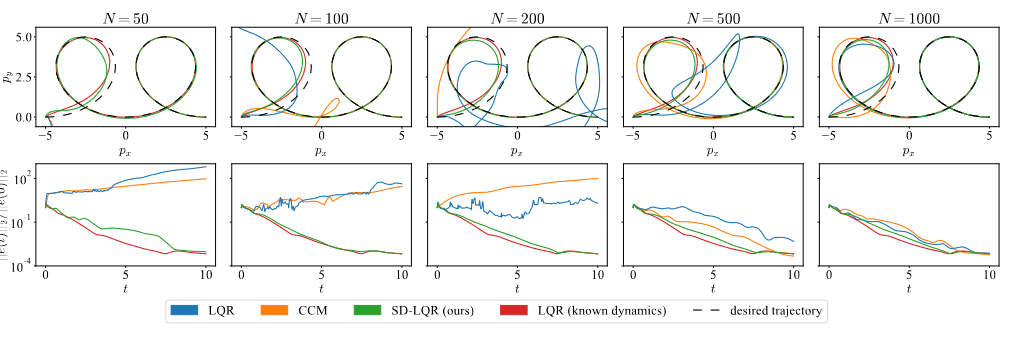

This figure shows a double loop-the-loop trajectory results of the team’s technique. (Image Credit: MIT/Stanford University)

“We found that beyond learning the dynamics, it’s also essential to learn the control-oriented structure that supports effective controller design. Our approach of learning state-dependent coefficient factorizations of the dynamics has outperformed the baselines in terms of data efficiency and tracking capability, proving to be successful in efficiently and effectively controlling the system’s trajectory,” Azizan says.

After running tests on their machine-learning technique, they discovered the controller followed trajectories and outperformed baseline methods. The controller almost performed very similarly to a ground-truth controller. “By making simpler assumptions, we got something that actually worked better than other complicated baseline approaches,” says lead author Spencer M. Richards, a graduate student at Stanford University. They also realized their approach is data-efficient, performing at a high level without using much data. In one case, it used 100 data points to model a highly dynamic rotor-driven vehicle. Techniques with multiple learned components had suffered in performance compared to those with smaller datasets.

With that efficiency, a drone or robot could learn quickly in a rapidly changing environment. The team’s method is very useful for robotic arms and free-flying spacecraft in low-gravity environments. Now the researchers want to create more interpretable models that can identify data about a dynamical system, potentially resulting in higher-performance controllers.

“Despite its ubiquity and importance, nonlinear feedback control remains an art, making it especially suitable for data-driven and learning-based methods. This paper makes a significant contribution to this area by proposing a method that jointly learns system dynamics, a controller, and control-oriented structure,” says Nikolai Matni, an assistant professor in the Department of Electrical and Systems Engineering at the University of Pennsylvania, who was not involved with this work. “What I found particularly exciting and compelling was the integration of these components into a joint learning algorithm, such that control-oriented structure acts as an inductive bias in the learning process. The result is a data-efficient learning process that outputs dynamic models that enjoy intrinsic structure that enables effective, stable, and robust control. While the technical contributions of the paper are excellent themselves, it is this conceptual contribution that I view as most exciting and significant.”

Have a story tip? Message me at: http://twitter.com/Cabe_Atwell