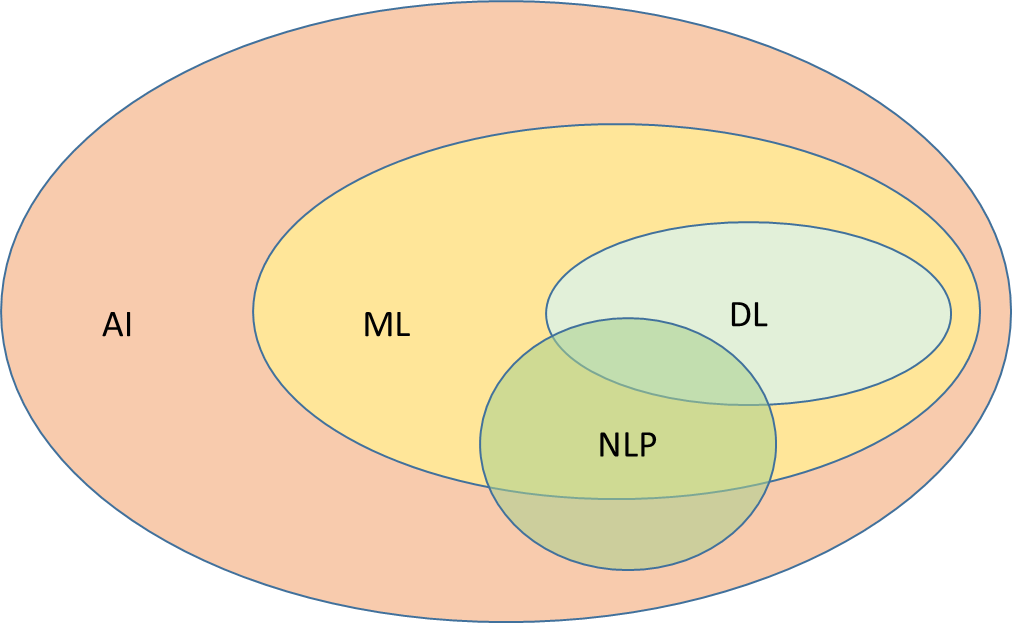

Natural language processing (NLP) is an interdisciplinary field that makes use of computers to comprehend or process human (or natural) languages. NLP uses many artificial intelligence (AI) techniques, including several types of machine learning (ML). Automatic speech recognition (ASR) is an application of NLP that also uses several ML techniques.

NLP Techniques

NLP techniques are based on six basic principles. Let's discuss them in this section.

- Stemming: This cluster of algorithms considers the common suffixes and prefixes of the language and analyzes them to derive a word’s infinitive form.

- Lemmatization: These algorithms overcome flaws inherent within stemming. Lemmatization incorporates grammar and linguistic knowledge into the extraction of a word's infinitive form, leading to more accurate results.

- Keywords extraction: Keyword extraction, also known as keyword analysis or keyword detection, is an NLP text analysis technique for automatically extracting frequent expressions and words from the body of a text.

- Named Entity Recognition (NER): NER is a fundamental NLP technique that identifies and extracts entities, such as names, dates, and places, from a text body.

- Topic Modelling: A text topic model uses several algorithms. Latent Dirichlet is the most common method. With Latent Dirichlet, the text undergoes analysis and is broken into words and statements, after which various topics are extracted.

- Sentiment Analysis: This method's core function is to extract sentiment behind a text body via analysis of the containing words. Through this analysis, the text can be deemed to have negative, positive, or neutral sentiment.

NLP Building Blocks: Word Embeddings, RNN, and LSTM

By varying different neural networks, advanced NLP algorithms can be created. Machine learning-based NLP algorithms (and most AI) are Directed by Acyclic Graph (DAG) networks, which comprise artificial neurons arranged in connected layers that propagate into the output layer. These models represent how neuroscientists think our brains work.

Word Embeddings

Neural networks work based on numerical vectors, however, words are not numerical. Word embeddings are a set of language modeling techniques that are able to convert non-numerical vocabulary words into numerical vectors. This type of processing might comb through thousands of words, grouping words that are often seen closely together, assigning them close numerical values.

Recurrent Neural Network (RNN)

A Recurrent Neural Network (RNN) takes into consideration past data. In an RNN, the output is looped back into the input, so the algorithms can process pass states. In NLP, an RNN is useful to determine context. For example, the sentence, “Bill thought the dog was huge, but it was not that big” contains the words “huge” and “big”, which might lead a computer to think that the dog was very large. An RNN will catch the inversion words “but” and “not”, and use them to better determine the meaning of the sentence.

Long Short-Term Memory (LSTM)

Most words are inconsequential in RNN sentence processing. To ignore the words that might not be important, a Long Short-Term Memory (LSTM) is employed. The LSTM has three cell states: the forget state, the output state, and the update state, each having a unique purpose. These states work in conjunction to populate a “forget layer” that determines if the information should be kept or forgotten.

Language Understanding: Transformer and BERT

Notable NLP architecture breakthroughs created the Transformers - an architecture enabling new NLP models. Transformers combine two common neural network architectures, the RNN and the CNN (Convolutional Neural Network). The Transformer model has the advantage of being non-sequential, meaning there is no need for the input sequence to undergo processing in a particular order.

This innovation allows parallelization and scaling of Transformers more so than preceding NLP models. Considerable research is being dedicated to the Transformer models due to their superior speed and performance to traditional models, translating into unique use cases in organizations. You can access them in this original Transformer and BERT paper.

Google AI Language researchers introduced and subsequently open-sourced Bidirectional Encoder Representations from Transformers (BERT) in 2018. The BERT model's key innovation is applying the Transformer model bidirectional training to language modeling.

In contrast to sequential text input directional models, the Transformer encoder reads text input bidirectionally. The BERT model result shows that a bidirectionally trained language model has an extensive sense of language context and flow compared to single-direction language models. The BERT model variants are highly successful in various NLP tasks, including document classification, document entanglement, and sentiment analysis.

NLP Applications

Typical NLP applications include speech recognition, understanding spoken language, and dialogue systems.

Fighting Online Bullying and Hate Speech: Facebook used the BERT algorithm, teaching it multiple languages simultaneously. The algorithm creates a statistical image of bullying or hate speech in several languages. Facebook employs automatic content monitoring tools for numerous languages. Their monitoring tool, RoBERTa, enables the company to automatically block unwanted content and minimize such unwelcome incidents by 70%.

NLP-Powered Chatbots: Using NLP allows chatbots to understand a wide range of questions. Because human’s do not adhere to formulas or keywords when chatting, NLP algorithms can be used to decipher what a user is asking. AI-powered chatbots are widely employed, and are becoming more useful as the technology advances.