Introduction

This blog post provides details on how to perform stereo calibration for the Ultra96-V2 development board with Dual-Camera Mezzanine.

Getting Started with the pre-built image

If you want to re-build the vivado 2021.2 and petalinux 2021.2 projects yourself, refer to the following blog post:

If you want to start from a pre-built image, use the 201.2 design that is augmented with Vitis-AI 2.0, available in the following project:

Cloning the dualcam python examples repository

With the SD card image programmed on hardware, and the Ultra96-V2 configured for WIFI access, clone the following repository:

$ git clone -b 2021.2 https://github.com/AlbertaBeef/avnet_dualcam_python_examples

$ cd avnet_dualcam_python_examples

Stereo Calibration Theory

This tutorial will not cover the theory behind stereo calibration, as this topic is well documented on-line.

For more details on camera calibration and stereo calibration, refer to the following articles from LearnOpenCV:

- https://learnopencv.com/camera-calibration-using-opencv/

- https://learnopencv.com/introduction-to-epipolar-geometry-and-stereo-vision/

- https://learnopencv.com/making-a-low-cost-stereo-camera-using-opencv/

In summary, the goal of the calibration process is to understand the mathematical model between 3D coordinates in the real-world, and 2D coordinates in captured images.

Camera calibration allows removal of undesirable effects such as lens distortion:

For stereo calibration, the two cameras are further calibrated in order to achieve epipolar geometry.

This means that each point in one image will appear on the same horizontal line in the other image, and makes the depth estimation problem mathematically feasible.

Most calibration algorithms make use of a chessboard image, since it has known geometry which can be detected in the 3D real-world.

My favorite calibration procedure is the one presented by Luxonis for their stereo OAK-D camera.

Luxonis make use of a chessboard, augmented with AruCo markers, which makes the calibration chart detection faster and more efficient.

They also provide a guided sequence of 13 poses, that make the entire calibration more user friendly.

This tutorial is inspired by the above existing calibration techniques.

Printing the Calibration Chart

As mentioned before, we will be using a chessboard image augmented with ArUco markers, also called the ChArUco chart.

Print the following chart on a sheet of paper:

Next, fix the chart on a rigid surface in order to eliminate all warps in the chart. This important as imperfections will affect the calibration accuracy.

Measure the real size of the chessboard squares, and ArUco markers.

As an example, for the example images captured in the repo, this was:

- square size = 21mm

- marker size = 16.5mm

Performing Stereo Calibration

The stereo calibration procedure is performed by the following script:

The calibration script guides the user to capture 13 pairs of images (called left_p[0-12].png and right_p[0-12].png, respectfully), which have the calibration chart placed in the following general orientation:

For each capture, it is important to have the calibration chart facing the dual camera mezzanine, in the approximate position illustrated, and visible in both left and right images.

An example of the capture pairs of images can be viewed in the following directories:

- https://github.com/AlbertaBeef/avnet_dualcam_python_examples/tree/2021.2/stereo_data/left

- https://github.com/AlbertaBeef/avnet_dualcam_python_examples/tree/2021.2/stereo_data/right

The calibration output corresponding to these images is provided in the following file:

This file contains the following calibrated data:

- Camera Calibration data (for each camera)

- Lens Distortion Coefficients

- D1/D2 (radial and tangential distortion)

- K1/K2 (focal length fx&fy, optical center)

- Extrinsic Matrices (real world to camera)

- R1/R2 (rotation&translation matrix)

- Projection Matrices

- P1/P2

- Lens Distortion Coefficients

- Stereo Calibration data

- Rotation Matrix

- R

- Translation Matrix

- T

- Essential Matrix

- E = T × R

- Fundamental Matrix

- F = inv(K2)T × E × inv(K1)

- Rotation Matrix

To run the calibration procedure, invoke the script as follows:

$ python3 avnet_dualcam_ar0144_stereo_calibrate.py --squareSizeCm 2.15 --markerSizeCm 1.65 --invertHorizontal

The provided arguments are defined as follows:

- squareSizeCm : chessboard square size (defined previously as 21.5mm, provided in cm)

- markerSizeCm : marker size (defined previously as 16.5mm, provided in cm)

- invertHorizontal : invert images horizontally to facilitate placement of calibration chart

The calibration procedure consists of two modes, which are both executed by default:

- capture, where 13 pairs of images of size 1280x800 will be captured and saved in the following directories:

- ./stereo_data/left

- ./stereo_data/right

- process, where the stereo calibration will be performed, and results saved in the following file:

- ./stereo_data/calib/dualcam_stereo.yml

The first image displayed will be an informative message indicating which keys to press during the capture mode.

Next, the capture process will query for 13 poses, as illustrated in this video:

For each capture, it is important to have the calibration chart facing the dual camera mezzanine, in the approximate position illustrated, and entirely visible in both left and right images.

Once the capture mode is finished, the process mode will automatically start.

Note that it is possible to execute the capture and process modes seperately using the following argument:

- --mode capture : only execute capture (image capture)

- --mode process : only execute process (calibration)

Here is an example output of a complete capture + process session.

$ python3 avnet_dualcam_ar0144_stereo_calibrate.py --squareSizeCm 2.15 --markerSizeCm 1.65 --invertHorizontal

[DualCam] Looking for devices corresponding to AP1302/dev/video2/dev/media1ap1302.0-003c

[DualCam] Detected SYZYGY dualcam (sensors placed left-right on board)

[DualCam] Initializing capture pipeline for ar0144_dual 1280 800media-ctl -d /dev/media1 -V "'ap1302.0-003c':2 [fmt:UYVY8_1X16/2560x800 field:none]"media-ctl -d /dev/media1 -V "'b0000000.mipi_csi2_rx_subsystem':0 [fmt:UYVY8_1X16/2560x800 field:none]"media-ctl -d /dev/media1 -V "'b0000000.mipi_csi2_rx_subsystem':1 [fmt:UYVY8_1X16/2560x800 field:none]"media-ctl -d /dev/media1 -V "'b0010000.v_proc_ss':0 [fmt:UYVY8_1X16/2560x800 field:none]"media-ctl -d /dev/media1 -V "'b0010000.v_proc_ss':1 [fmt:RBG24/2560x800 field:none]"media-ctl -d /dev/media1 -V "'b0040000.v_proc_ss':0 [fmt:RBG24/2560x800 field:none]"media-ctl -d /dev/media1 -V "'b0040000.v_proc_ss':1 [fmt:RBG24/2560x800 field:none]"

[DualCam] Disabling Auto White Balancev4l2-ctl --set-ctrl white_balance_auto_preset=0 -d /dev/video2

[DualCam] Opening cv2.VideoCapture for 2560 800

Starting image capture. Press the [ESC] key to abort.Will take 13 total images, 1 per each polygon.800 1280Capturing ------------------------Markers count ... 44py: Saved image as: left_p0_0.pngCapturing ------------------------Markers count ... 44py: Saved image as: right_p0_0.pngImages captured --> 0Capturing ------------------------Markers count ... 34py: Saved image as: left_p1_1.pngCapturing ------------------------Markers count ... 39py: Saved image as: right_p1_1.pngImages captured --> 1Capturing ------------------------Markers count ... 42py: Saved image as: left_p2_2.pngCapturing ------------------------Markers count ... 42py: Saved image as: right_p2_2.pngImages captured --> 2Capturing ------------------------Markers count ... 43py: Saved image as: left_p3_3.pngCapturing ------------------------Markers count ... 40py: Saved image as: right_p3_3.pngImages captured --> 3Capturing ------------------------Markers count ... 42py: Saved image as: left_p4_4.pngCapturing ------------------------Markers count ... 37py: Saved image as: right_p4_4.pngImages captured --> 4Capturing ------------------------Markers count ... 40py: Saved image as: left_p5_5.pngCapturing ------------------------Markers count ... 44py: Saved image as: right_p5_5.pngImages captured --> 5Capturing ------------------------Markers count ... 26py: Saved image as: left_p6_6.pngCapturing ------------------------Markers count ... 38py: Saved image as: right_p6_6.pngImages captured --> 6Capturing ------------------------Markers count ... 42py: Saved image as: left_p7_7.pngCapturing ------------------------Markers count ... 39py: Saved image as: right_p7_7.pngImages captured --> 7Capturing ------------------------Markers count ... 44py: Saved image as: left_p8_8.pngCapturing ------------------------Markers count ... 39py: Saved image as: right_p8_8.pngImages captured --> 8Capturing ------------------------Markers count ... 44py: Saved image as: left_p9_9.pngCapturing ------------------------Markers count ... 44py: Saved image as: right_p9_9.pngImages captured --> 9Capturing ------------------------Markers count ... 43py: Saved image as: left_p10_10.pngCapturing ------------------------Markers count ... 41py: Saved image as: right_p10_10.pngImages captured --> 10Capturing ------------------------Markers count ... 41py: Saved image as: left_p11_11.pngCapturing ------------------------Markers count ... 31py: Saved image as: right_p11_11.pngImages captured --> 11Capturing ------------------------Markers count ... 35py: Saved image as: left_p12_12.pngCapturing ------------------------Markers count ... 37py: Saved image as: right_p12_12.pngImages captured --> 12

Starting image processing~~~~~~~~~~~ POSE ESTIMATION LEFT CAMERA ~~~~~~~~~~~~~=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p0_0.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p10_10.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p11_11.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p12_12.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p1_1.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p2_2.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p3_3.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p4_4.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p5_5.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p6_6.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p7_7.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p8_8.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/left/left_p9_9.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p0_0.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p10_10.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p11_11.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p12_12.png43 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p1_1.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p2_2.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p3_3.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p4_4.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p5_5.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p6_6.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p7_7.png43 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p8_8.png44 number of Markers corners detected in the above image=> Processing image /home/root/avnet_dualcam_python_examples/stereo_data/right/right_p9_9.png44 number of Markers corners detected in the above imageCAMERA CALIBRATION(1280, 800)Camera Matrix initialization.............[[857.1668 0. 643.9126 ] [ 0. 856.0823 387.56018] [ 0. 0. 1. ]]CAMERA CALIBRATION(1280, 800)Camera Matrix initialization.............[[857.1668 0. 643.9126 ] [ 0. 856.0823 387.56018] [ 0. 0. 1. ]]~~~~~~~~~~~~~RMS error of left~~~~~~~~~~~~~~0.271278407138195950.2636582695039592[[863.66644698 0. 644.45998875] [ 0. 862.57372353 398.32571049] [ 0. 0. 1. ]][[862.49062625 0. 651.33360285] [ 0. 861.39939047 398.1687972 ] [ 0. 0. 1. ]][[1.78593490e+01] [6.22164495e+01] [1.55524760e-04] [1.48234433e-03] [4.77799154e+01] [1.80181985e+01] [6.11228981e+01] [4.99820866e+01] [0.00000000e+00] [0.00000000e+00] [0.00000000e+00] [0.00000000e+00] [0.00000000e+00] [0.00000000e+00]][[ 1.34431671e+01] [-6.64473329e+00] [ 4.67727647e-04] [ 2.80569675e-03] [ 6.94820433e+02] [ 1.36278105e+01] [-7.97379100e+00] [ 6.98101470e+02] [ 0.00000000e+00] [ 0.00000000e+00] [ 0.00000000e+00] [ 0.00000000e+00] [ 0.00000000e+00] [ 0.00000000e+00]]allIds_l1313allIds_lallIds_r~~~~~~~~~~~~~RMS error of L-R~~~~~~~~~~~~~~0.2567820984546291~~~~~ Starting Stereo Calibration ~~~~~

R1 (left)[[ 0.999984 0.000614 -0.005638] [-0.000585 0.999987 0.005064] [ 0.005641 -0.005061 0.999971]]

R2 (right)[[ 0.999911 0.00126 -0.013316] [-0.001327 0.999986 -0.005054] [ 0.01331 0.005071 0.999899]]

M1 (left)[[863.30426 0. 645.5743 ] [ 0. 863.8515 399.63293] [ 0. 0. 1. ]]

M2 (right)[[862.2782 0. 649.1176 ] [ 0. 862.3503 398.21323] [ 0. 0. 1. ]]

R[[ 0.99997 -0.000781 0.007665] [ 0.000703 0.999948 0.010127] [-0.007672 -0.010122 0.999919]]

T[[-4.934046] [-0.006215] [ 0.06571 ]]

M3 (rgb)[[0. 0. 0.] [0. 0. 0.] [0. 0. 0.]]\R (rgb)[[0. 0. 0.] [0. 0. 0.] [0. 0. 0.]]

T (rgb)[0. 0. 0.] Took 17 seconds to run image processing.Mesh path/home/root/avnet_dualcam_python_examples/depthai_helpersshape of maps(800, 1280)(800, 1280)(800, 1280)(800, 1280)<-----------------Epipolar error of LEFT-right camera---------------->HU IHERPrinting p1 and p2[[863.100906 0. 663.396759 0. ] [ 0. 863.100906 399.381893 0. ] [ 0. 0. 1. 0. ]][[ 863.100906 0. 663.396759 -4258.960496] [ 0. 863.100906 399.381893 0. ] [ 0. 0. 1. 0. ]]Image name right_p0_0.pngAverage Epipolar Error per image on host in right_p0_0.png : 0.07706821986607143Image name right_p10_10.pngAverage Epipolar Error per image on host in right_p10_10.png : 0.05926799774169922Image name right_p11_11.pngAverage Epipolar Error per image on host in right_p11_11.png : 0.05467376708984375Image name right_p12_12.pngAverage Epipolar Error per image on host in right_p12_12.png : 0.08408007902257583Image name right_p1_1.pngAverage Epipolar Error per image on host in right_p1_1.png : 0.07137843540736608Image name right_p2_2.pngAverage Epipolar Error per image on host in right_p2_2.png : 0.06840798514229911Image name right_p3_3.pngAverage Epipolar Error per image on host in right_p3_3.png : 0.0678647722516741Image name right_p4_4.pngAverage Epipolar Error per image on host in right_p4_4.png : 0.06874891008649553Image name right_p5_5.pngAverage Epipolar Error per image on host in right_p5_5.png : 0.05979178292410714Image name right_p6_6.pngAverage Epipolar Error per image on host in right_p6_6.png : 0.07102007184709822Image name right_p7_7.pngAverage Epipolar Error per image on host in right_p7_7.png : 0.10439994118430397Image name right_p8_8.pngAverage Epipolar Error per image on host in right_p8_8.png : 0.06121695382254464Image name right_p9_9.pngAverage Epipolar Error per image on host in right_p9_9.png : 0.08073861258370536Average Epipolar Error: 0.07134149122344359Displaying Stereo Pair for visual inspection. Press the [ESC] key to exit.py: DONE.

After calibration, the epipolar error between left/right image pairs will be reported, along with supporting images for visualization:

NOTE : a large screen is required to view the full image (2x1280 x 800)

If successful, the calibration process will display a green window:

Otherwise, a red windows with error message will be displayed.

Performing Stereo Depth Estimation

Once calibrated, the results can be tested with the following software based depth estimation:

$ python3 avnet_dualcam_ar0144_stereo_depth.py --calibration_file ./stereo_data/calib/dualcam_stereo.yml

This will display a collage of [left + depth + right] images:

Since the depth estimation is performed in software, it will be slow.

To speed things up, specify a smaller input image size

$ python3 avnet_dualcam_ar0144_stereo_depth.py --calibration_file ./stereo_data/calib/dualcam_stereo.yml --width 640 --height 480

Where to Buy the Hardware

The following links describe the hardware requirements needed to run this tutorial.

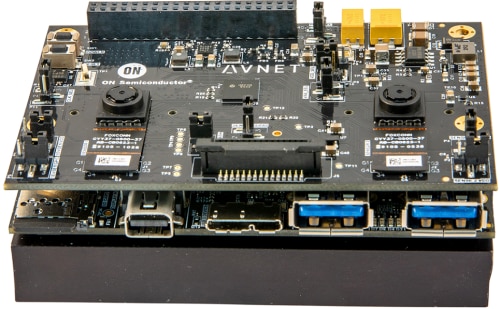

Ultra96-V2

or Ultra96-V2 I-grade

Ultra96-V2 4A Power Supply

Buy Ultra96-V2 4A Power Supply

Ultra96-V2 JTAG/UART Adapter

Buy Ultra96-V2 JTAG/UART Adapter

96Boards onsemi Dual Camera Mezzanine

Buy 96Boards onsemi Dual Camera Mezzanine

Going Further

A Vitis-AI 2.0 design has been built on top of this 2021.2 design, and is documented as a hackster.io project.

http://avnet.me/vitis-ai-2.0-dualcam

This hackster.io project includes the following additional content:

- instructions for building Vitis platform and Vitis-AI design

- pre-built SD card image for Vitis-AI enabled design

- python scripts for stereo processing (anaglyph, stereo neural inference)

Conclusion

I hope this tutorial will help you get started quickly with the Ultra96-V2 and Dual-Camera Mezzanine, and inspire you to create innovative stereo applications.