Introduction

This project is part 4 of a 5 part series of projects, where we will progressively create AI enabled platforms for the following Tria development boards:

- ZUBoard

- Ultra96-V2

- UltraZed-7EV

These projects can be rebuilt using the source code on github.com:

The following series of Hackster projects describe how the above github repository was created and serves as documentation:

- Part 1 : Tria Vitis Platforms — Building the Foundational Designs

- Part 2 : Tria Vitis Platforms — Creating a Common Platform

- Part 3 : Tria Vitis Platforms — Adding support for Vitis-AI

- Part 4 : Tria Vitis Platforms — Adding support for Hailo-8

- Part 5 : Tria Vitis Platforms — Adding support for ROS2

The motivation of this series of projects is to enable users to create their own custom AI applications.

Introduction Part IV

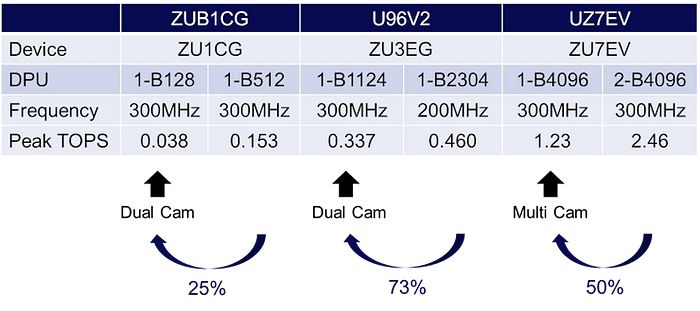

In the previous projects ( Part 2, Part 3 ), we created Vitis overlays that were augmented with the PL-based DPU engine. We saw that the DPU was a scalable engine, adapting to the available PL resources, but at the expense of reduced performance, as shown in the following table:

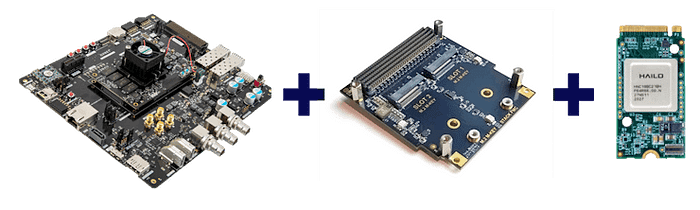

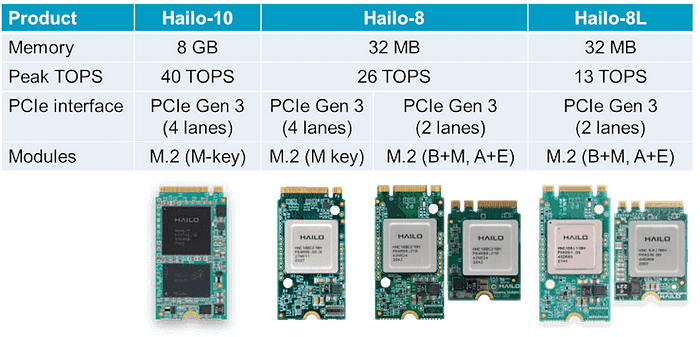

In this project, we propose to add an AI engine as an external device : the Hailo-8 Acceleration module. This will offer a constant 26 TOPS (peak) of performance, regardless of what logic resources are available in the PL.

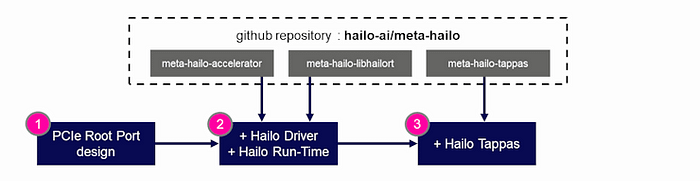

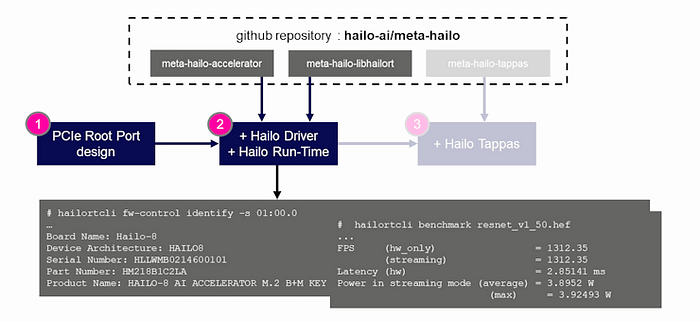

In order to integrate the Hailo embedded driver, run-time, and TAPPAS, I refer to the following three milestones:

- Milestone 1 — Hailo-8 detected on PCI express bus

- Milestone 2 — Hailo-8 detected by driver and runtime

- Milestone 3 — Hailo-8 working with TAPPAS

Milestone 1 — Hailo-8 detected on PCI express bus

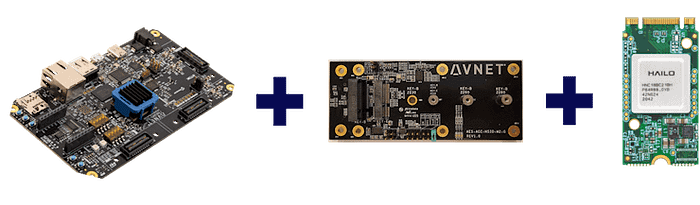

In order to achieve the first milestone, we need to attach the Hailo-8 acceleration module(s) to our hardware targets, using the PCIe enabled designs (apps):

- ZUBoard : tria-zub1cg-base, tria-zub1cg-dualcam

- UltraZed-EV : tria-uz7ev-nvme

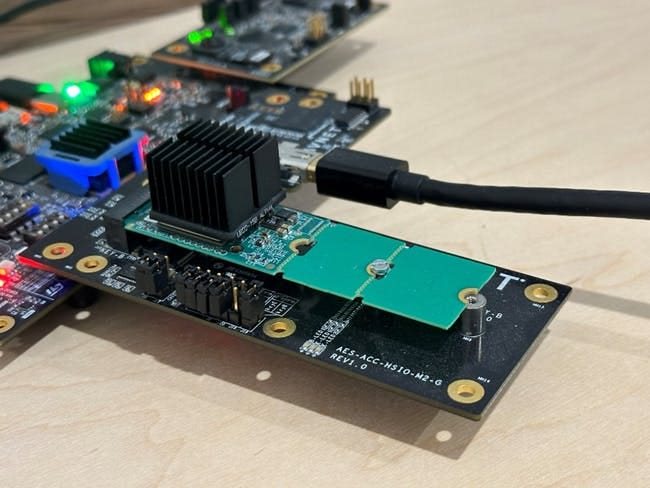

For the ZUBoard, we will be using the M.2 HSIO to attach the Hailo-8 B+M Key module:

For the UltraZed-EV, we will be using the Opsero M.2 Stack FMC to attach the Hailo-8 M-Key module:

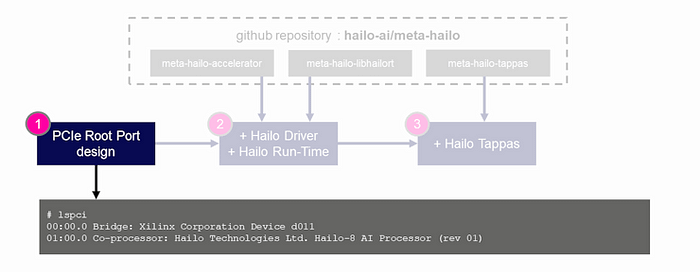

After booting our target hardware, we want to load our PCIe enabled designs, and query the PCIe bus with the “lspci” utility

On the UltraZed-EV

root@uz7ev-evcc-2023-2:~# xmutil loadapp tria-uz7ev-nvme

...

tria-uz7ev-nvme: loaded to slot 0

root@uz7ev-evcc-2023-2:~# lspci

0000:00:00.0 PCI bridge: Xilinx Corporation Device d021

0001:00:00.0 PCI bridge: Xilinx Corporation Device 9132

0001:01:00.0 Co-processor: Hailo Technologies Ltd. Hailo-8 AI Processor (rev 01)

On the ZUBoard

root@zub1cg-sbc-2023-2:~# xmutil load tria-zub1cg-dualcam

...

tria-zub1cg-dualcam: loaded to slot 0

root@zub1cg-sbc-2023-2:~# lspci

00:00.0 Bridge: Xilinx Corporation Device d011

01:00.0 Co-processor: Hailo Technologies Ltd. Hailo-8 AI Processor (rev 01)

Use the “lspci -vv” variant of the command to list the details of the Hailo-8 AI Processor.

root@zub1cg-sbc-2023-2:~# lspci -vv

00:00.0 Bridge: Xilinx Corporation Device d011

...

01:00.0 Co-processor: Hailo Technologies Ltd. Hailo-8 AI Processor (rev 01)

Subsystem: Hailo Technologies Ltd. Hailo-8 AI Processor

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx-

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 111

Region 0: Memory at 600000000 (64-bit, prefetchable) [size=16K]

Region 2: Memory at 600008000 (64-bit, prefetchable) [size=4K]

Region 4: Memory at 600004000 (64-bit, prefetchable) [size=16K]

Capabilities: [80] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s <64ns, L1 unlimited

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0.000W

DevCtl: CorrErr- NonFatalErr- FatalErr- UnsupReq-

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x4, ASPM L0s L1, Exit Latency L0s <1us, L1 <2us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 5GT/s (downgraded), Width x2 (downgraded)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Not Supported, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt+ EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete- EqualizationPhase1-

EqualizationPhase2- EqualizationPhase3- LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [e0] MSI: Enable- Count=1/1 Maskable- 64bit+

Address: 0000000000000000 Data: 0000

Capabilities: [f8] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot+,D3cold-)

Status: D3 NoSoftRst+ PME-Enable- DSel=0 DScale=1 PME-

Capabilities: [100 v1] Vendor Specific Information: ID=1556 Rev=1 Len=008 <?>

Capabilities: [108 v1] Latency Tolerance Reporting

Max snoop latency: 0ns

Max no snoop latency: 0ns

Capabilities: [110 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=10us PortTPowerOnTime=10us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=0us LTR1.2_Threshold=0ns

L1SubCtl2: T_PwrOn=10us

Capabilities: [128 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 0

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [200 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES- TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

We are doing great !

Before we dive into the remaining two milestones, it is important to get an overview of the Hailo AI SW Suite, and determine which version we will be integrating.

Hailo AI Software Suite Overview

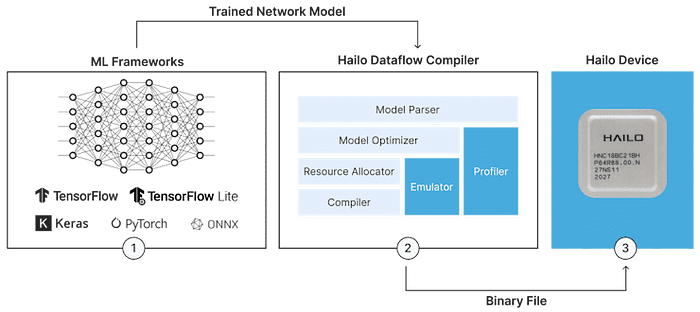

Hailo’s AI Software Suite allows users to deploy models to the Hailo AI accelerators.

In addition to the Hailo AI accelerator devices, Hailo offers a scalable range of PCIe Gen 3.0 compatible M.2 AI accelerator modules:

This project will only cover the following Hailo AI acceleration modules:

- Hailo-8 : M.2 M Key (PCIe Gen 3.0, 4 lanes), 26 TOPS

- Hailo-8 : M.2 B+M Key (PCIe Gen 3.0, 2 lanes), 26 TOPS

The Hailo AI Software Suite supports the following frameworks:

- TensorFlow Lite

- ONNX

Hailo chose TensorFlow Lite, not because of its popular use for “reduced set of instructions and quantized” models, but rather because it is a “more stable” exportable format that also supports full floating-point models.

Other frameworks are indirectly supported by exporting to the TF-Lite or ONNX formats.

The deployment involves the following tasks:

- Model Parsing

- Model Optimization & Resource Allocation

- Model Compilation

The Model Parsing task translates models from industry-standard frameworks to Hailo executable format (HAR). It allows the user to identify unsupported layers, or sequence of layers in the model that are not supported by the compiler. This step is crucial when training our own custom model, since we can adapt the model architecture to use layers that are supported by the target compiler prior to training, thus saving hours (or days) in our deployment flow.

The Model Optimization and Resource Allocation tasks convert the model to the internal representation, using state of the art quantization, then allocates this internal representation to available resources in the Hailo AI accelerator. In order to perform this analysis and conversion, a sub-set of the training dataset is required. The size of the required calibration data is typically in the order of several 1000s of samples.

The Model Compilation task converts the quantized model to micro-code that can be run on the Hailo AI acceleration device.

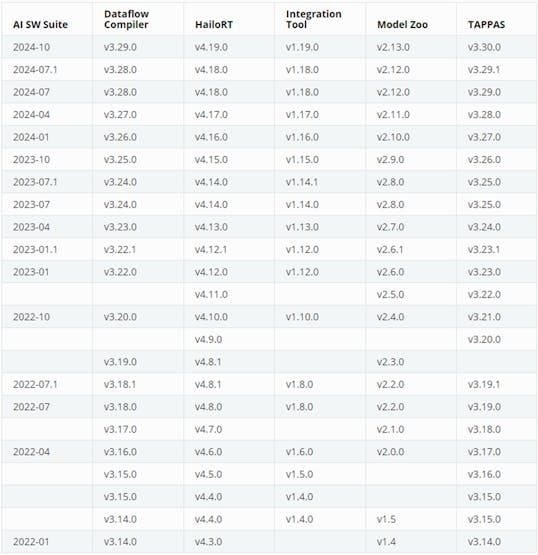

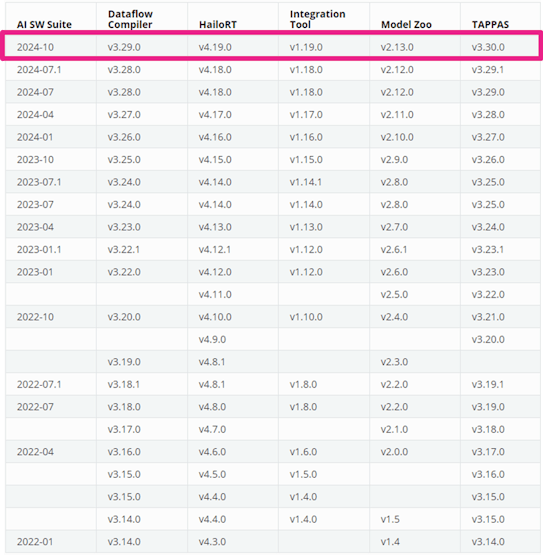

Current versions of the Hailo AI SW Suite are listed in the following table, which can be found on the Hailo Developer Zone:

Choosing a Hailo AI SW Suite Version

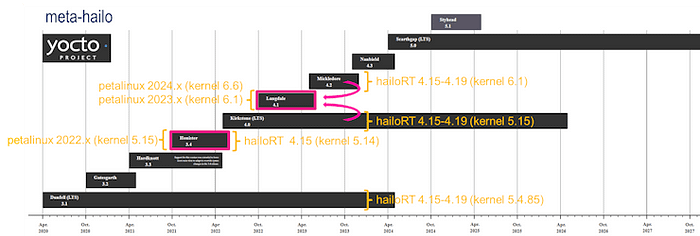

In a previous project, I integrated the Hailo embedded stack in a 2022.2 petalinux project using the meta-hailo yocto recipes

In that version, the integration of the Hailo embedded software went really well, since we had alignment of the yocto versions between Petalinux 2022.2 and HailoRT 4.15

- Petalinux 2022.2 : yocto = honister, kernel = 5.15

- HailoRT 4.19 : yocto = honister, kernel 5.14

With Petalinux 2023.2, however, we do not have an alignment of the yocto versions with HailoRT 4.19:

- Petalinux 2023.2 : yocto = landdale, kernel = 6.1

- HailoRT 4.19 : yocto = kirstone or mickledore, kernel = 6.1

The following yocto release diagram illustrates this alignment and mis-alignment for various versions of Petalinux versus HailoRT :

For the reasons above, I have decided to attempt to integrate the latest 2024–10 version of Hailo AI SW Suite, which includes HailoRT 4.19:

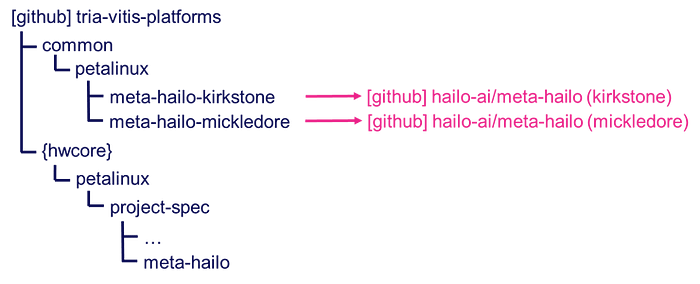

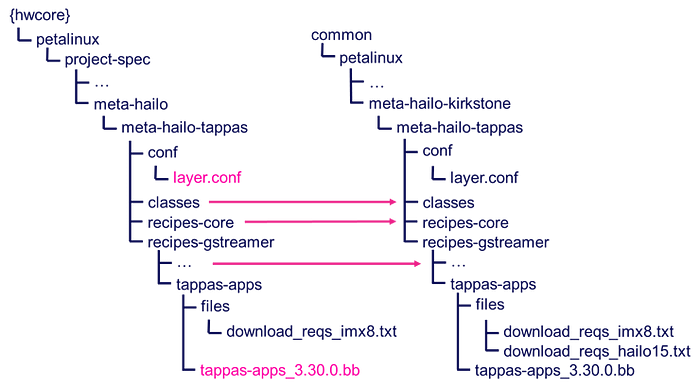

The following two branches (mickledore, kirkstone) of the meta-hailo recipe were integrated into our repository as github sub-modules in the “common” directory:

Milestone 2 — Hailo-8 detected by driver and runtime

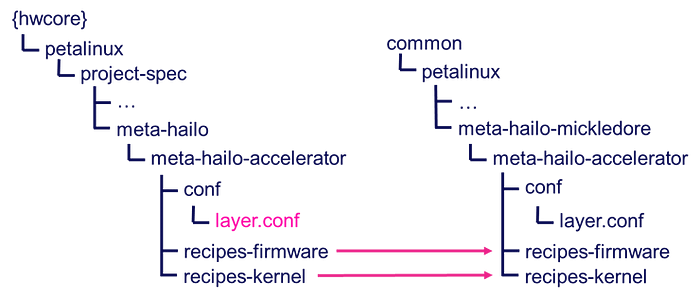

In order to integrate the Hailo driver and run-time, I chose to use the latest “mickledore” version of the yocto recipes.

For the Hailo driver and firmware, I created symbolic links to the content, and added a modified version of the layer.conf file to add support for the “langdale” yocto version:

project-spec/meta-hailo/meta-hailo-accelerator/conf/layer.conf

...

LAYERDEPENDS_meta-hailo-accelerator = "core"

LAYERSERIES_COMPAT_meta-hailo-accelerator = "mickledore langdale"

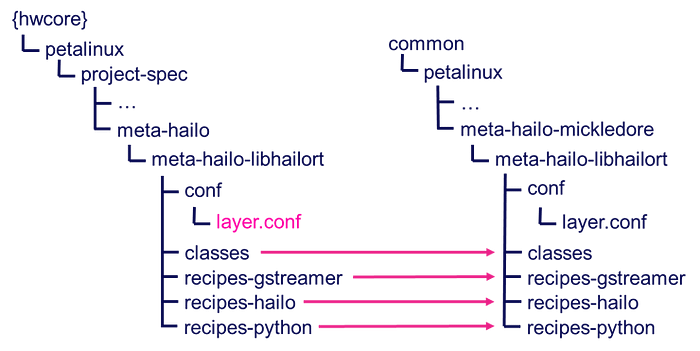

Similarly, for the Hailo run-time APIs, I created symbolic links to the content, and added a modified version of the layer.conf file to add support for the “langdale” yocto version:

project-spec/meta-hailo/meta-hailo-libhailort/conf/layer.conf

...

LAYERDEPENDS_meta-hailo-libhailort = "core"

LAYERSERIES_COMPAT_meta-hailo-libhailort = "mickledore langdale"

With these recipes in place, we can integrate them into our petalinux project by adding the following lines to our user-rootfsconfig file:

project-spec/meta-user/conf/user-rootfsconfig

...

CONFIG_hailo-pci

CONFIG_hailo-firmware

CONFIG_hailortcli

CONFIG_libhailort

CONFIG_pyhailort

CONFIG_libgsthailo

With these packages enabled, we can rebuild our petalinux project, boot our new images on the hardware target, and test the driver and run-time APIs.

You will already have noticed the following new content being generated specific to the Hailo-8 driver during linux boot.

root@zub1cg-sbc-2023-2:~# dmesg | grep hailo

[ 8.162743] hailo 0000:01:00.0: Probing: Allocate memory for device extension, 11632

[ 8.162801] hailo 0000:01:00.0: enabling device (0000 -> 0002)

[ 8.162813] hailo 0000:01:00.0: Probing: Device enabled

[ 8.162875] hailo 0000:01:00.0: Probing: mapped bar 0 - 00000000321ef596 16384

[ 8.162889] hailo 0000:01:00.0: Probing: mapped bar 2 - 00000000465a8748 4096

[ 8.162904] hailo 0000:01:00.0: Probing: mapped bar 4 - 000000007855d716 16384

[ 8.162921] hailo 0000:01:00.0: Probing: Setting max_desc_page_size to 4096, (page_size=4096)

[ 8.162965] hailo 0000:01:00.0: Probing: Enabled 64 bit dma

[ 8.162973] hailo 0000:01:00.0: Probing: Using userspace allocated vdma buffers

[ 8.162981] hailo 0000:01:00.0: Disabling ASPM L0s

[ 8.162997] hailo 0000:01:00.0: Successfully disabled ASPM L0s

[ 8.163204] hailo 0000:01:00.0: Writing file hailo/hailo8_fw.bin

[ 8.248885] hailo 0000:01:00.0: File hailo/hailo8_fw.bin written successfully

[ 8.248920] hailo 0000:01:00.0: Writing file hailo/hailo8_board_cfg.bin

[ 8.250291] Failed to write file hailo/hailo8_board_cfg.bin

[ 8.250305] hailo 0000:01:00.0: File hailo/hailo8_board_cfg.bin written successfully

[ 8.250312] hailo 0000:01:00.0: Writing file hailo/hailo8_fw_cfg.bin

[ 8.250353] Failed to write file hailo/hailo8_fw_cfg.bin

[ 8.250358] hailo 0000:01:00.0: File hailo/hailo8_fw_cfg.bin written successfully

[ 8.348872] hailo 0000:01:00.0: Firmware loaded successfully

[ 8.401807] hailo 0000:01:00.0: Probing: Added board 1e60-2864, /dev/hailo0

root@zub1cg-sbc-2023-2:~#

The presence of the Hailo-8 driver can be confirmed with the “lsmod” command.

root@zub1cg-sbc-2023-2:~# lsmod

Module Size Used by

...

hailo_pci 77824 0

...

Also, the “lspci” command will output information regarding the PCI devices, and their kernel drivers:

root@zub1cg-sbc-2023-2:~# lspci

00:00.0 Bridge: Xilinx Corporation Device d011

01:00.0 Co-processor: Hailo Technologies Ltd. Hailo-8 AI Processor (rev 01)

root@zub1cg-sbc-2023-2:~# lspci -k

00:00.0 Bridge: Xilinx Corporation Device d011

01:00.0 Co-processor: Hailo Technologies Ltd. Hailo-8 AI Processor (rev 01)

Subsystem: Hailo Technologies Ltd. Hailo-8 AI Processor

Kernel driver in use: hailo

Kernel modules: hailo_pci

root@zub1cg-sbc-2023-2:~# lspci -v

00:00.0 Bridge: Xilinx Corporation Device d011

...

01:00.0 Co-processor: Hailo Technologies Ltd. Hailo-8 AI Processor (rev 01)

Subsystem: Hailo Technologies Ltd. Hailo-8 AI Processor

Flags: bus master, fast devsel, latency 0, IRQ 61

Memory at 600000000 (64-bit, prefetchable) [size=16K]

Memory at 600008000 (64-bit, prefetchable) [size=4K]

Memory at 600004000 (64-bit, prefetchable) [size=16K]

Capabilities: [80] Express Endpoint, MSI 00

Capabilities: [e0] MSI: Enable- Count=1/1 Maskable- 64bit+

Capabilities: [f8] Power Management version 3

Capabilities: [100] Vendor Specific Information: ID=1556 Rev=1 Len=008 <?>

Capabilities: [108] Latency Tolerance Reporting

Capabilities: [110] L1 PM Substates

Capabilities: [128] Alternative Routing-ID Interpretation (ARI)

Capabilities: [200] Advanced Error Reporting

Capabilities: [300] Secondary PCI Express

Kernel driver in use: hailo

Kernel modules: hailo_pci

root@zub1cg-sbc-2023-2:~# lspci -vv

00:00.0 Bridge: Xilinx Corporation Device d011

...

01:00.0 Co-processor: Hailo Technologies Ltd. Hailo-8 AI Processor (rev 01)

Subsystem: Hailo Technologies Ltd. Hailo-8 AI Processor

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx-

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 61

Region 0: Memory at 600000000 (64-bit, prefetchable) [size=16K]

Region 2: Memory at 600008000 (64-bit, prefetchable) [size=4K]

Region 4: Memory at 600004000 (64-bit, prefetchable) [size=16K]

Capabilities: [80] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s <64ns, L1 unlimited

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0W

DevCtl: CorrErr- NonFatalErr- FatalErr- UnsupReq-

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x4, ASPM L0s L1, Exit Latency L0s <1us, L1 <2us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 5GT/s (downgraded), Width x2 (downgraded)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Not Supported, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt+ EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- 10BitTagReq- OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete- EqualizationPhase1-

EqualizationPhase2- EqualizationPhase3- LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [e0] MSI: Enable- Count=1/1 Maskable- 64bit+

Address: 0000000000000000 Data: 0000

Capabilities: [f8] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot+,D3cold-)

Status: D3 NoSoftRst+ PME-Enable- DSel=0 DScale=1 PME-

Capabilities: [100 v1] Vendor Specific Information: ID=1556 Rev=1 Len=008 <?>

Capabilities: [108 v1] Latency Tolerance Reporting

Max snoop latency: 0ns

Max no snoop latency: 0ns

Capabilities: [110 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=10us PortTPowerOnTime=10us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=0us LTR1.2_Threshold=0ns

L1SubCtl2: T_PwrOn=10us

Capabilities: [128 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 0

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [200 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES- TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Kernel driver in use: hailo

Kernel modules: hailo_pci

We can verify the Hailo-8 run-time with the “hailortcli” command, as shown below:

root@zub1cg-sbc-2023-2:~# hailortcli fw-control identify

Executing on device: 0000:01:00.0

Identifying board

Control Protocol Version: 2

Firmware Version: 4.19.0 (release,app,extended context switch buffer)

Logger Version: 0

Board Name: Hailo-8

Device Architecture: HAILO8

Serial Number: HLLWMB0214600101

Part Number: HM218B1C2LA

Product Name: HAILO-8 AI ACCELERATOR M.2 B+M KEY MODULE

We have successfully detected the Hailo-8 AI Accelerator M.2 B+M Key module !

We can already run some benchmarks with the run-time.

First download some pre-compile model from the Hailo model zoo:

If your embeded hardware is connected to the internet, this can be done directory on the embedded platform with the “wget” utility:

wget https://hailo-tappas.s3.eu-west-2.amazonaws.com/v3.30/general/hefs/resnet_v1_50.hef

Then run some benchmarks with the “hailortcli” utility:

root@zub1cg-sbc-2023-2:~# hailortcli benchmark resnet_v1_50.hef

Starting Measurements...

Measuring FPS in HW-only mode

Network resnet_v1_50/resnet_v1_50: 100% | 20573 | FPS: 1370.97 | ETA: 00:00:00

Measuring FPS (and Power on supported platforms) in streaming mode

[HailoRT] [warning] Using the overcurrent protection dvm for power measurement will disable the overcurrent protection.

If only taking one measurement, the protection will resume automatically.

If doing continuous measurement, to enable overcurrent protection again you have to stop the power measurement on this dvm.

Network resnet_v1_50/resnet_v1_50: 100% | 20365 | FPS: 1357.09 | ETA: 00:00:00

Measuring HW Latency

Network resnet_v1_50/resnet_v1_50: 100% | 4559 | HW Latency: 3.09 ms | ETA: 00:00:00

=======

Summary

=======

FPS (hw_only) = 1370.99

(streaming) = 1357.1

Latency (hw) = 3.09044 ms

Device 0000:01:00.0:

Power in streaming mode (average) = 4.02865 W

(max) = 4.06264 W

The easiest way to validate the “pyhailort” package is to import “hailo_platform” within python, as shown below:

python3

>>> import hailo_platform

>>> print(hailo_platform.__version__)

4.19.0

>>> exit()

Another (longer) way to validate the “pyhailort” package is to install and run the “blaze_app_python” application which provides Hailo-8 accelerated versions of the mediapipe models:

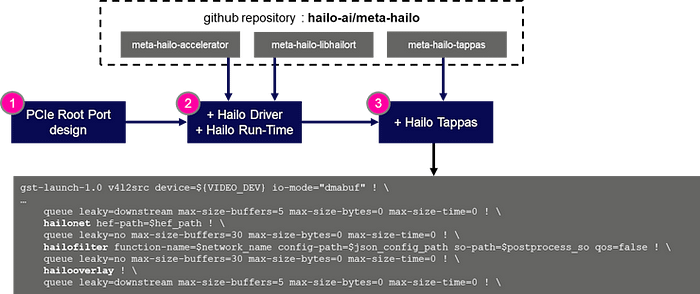

Milestone 3 — Hailo-8 working with TAPPAS

In order to integrate the Hailo TAPPAS, I chose to use the previous “kirkstone” version of the yocto recipes, since the “mickledore” branch did not contain these recipes.

For the TAPPAS, I created symbolic links to the content, and added a modified version of the layer.conf file to add support for the “langdale” yocto version:

project-spec/meta-hailo/meta-hailo-tappas/conf/layer.conf

...

LAYERDEPENDS_meta-hailo-tappas = "core meta-hailo-libhailort"

LAYERSERIES_COMPAT_meta-hailo-tappas = "kirkstone langdale"

One of the included recipes “tappas-apps” attempts to discover if we are targetting the Hailo-8 or Hailo-15 device. Since this has been implemented for an IMX8 target, this discovery will not work for our targets. We need to modify the following recipe to properly handle this:

project-spec/meta-hailo/meta-hailo-tappas/recipes-gstreamer/tappas-apps/tappas-apps_3.30.0.bb

...

IMX8_DIR = "${APPS_DIR_PREFIX}/h8/gstreamer/imx8/"

#HAILO15_DIR = "${APPS_DIR_PREFIX}/h15/gstreamer/"

REQS_PATH = "${FILE_DIRNAME}/files/"

REQS_IMX8_FILE = "${REQS_PATH}download_reqs_imx8.txt"

#REQS_HAILO15_FILE = "${REQS_PATH}download_reqs_hailo15.txt"

REQS_FILE = ""

ARM_APPS_DIR = ""

python () {

#if 'imx8' in d.getVar('MACHINE'):

d.setVar('REQS_FILE', d.getVar('REQS_IMX8_FILE'))

d.setVar('ARM_APPS_DIR', d.getVar('IMX8_DIR'))

#else:

# d.setVar('REQS_FILE', d.getVar('REQS_HAILO15_FILE'))

# d.setVar('ARM_APPS_DIR', d.getVar('HAILO15_DIR'))

# d.appendVar('DEPENDS', " libmedialib-api xtensor")

}

#IS_H15 = "${@ 'true' if 'hailo15' in d.getVar('MACHINE') else 'false'}"

IS_H15 = "false"

...

With these recipes in place, we can integrate them into our petalinux project by adding the following lines to our user-rootfsconfig file:

project-spec/meta-user/conf/user-rootfsconfig

...

CONFIG_libgsthailotools

CONFIG_hailo-post-processes

CONFIG_tappas-apps

With these packages enabled, we can rebuild our petalinux project, boot our new images on the hardware target, and test the TAPPAS.

The presence of the gstreamer plug-ins from Hailo can be validated as follows:

root@zub1cg-sbc-2023-2:~# gst-inspect-1.0 hailotools

Plugin Details:

Name hailotools

Description hailo tools plugin

Filename /usr/lib/gstreamer-1.0/libgsthailotools.so

Version 3.30.0

License unknown

Source module gst-hailo-tools

Binary package gst-hailo-tools

Origin URL https://hailo.ai/

hailoaggregator: hailoaggregator - Cascading

hailocounter: hailocounter - postprocessing element

hailocropper: hailocropper

hailoexportfile: hailoexportfile - export element

hailoexportzmq: hailoexportzmq - export element

hailofilter: hailofilter - postprocessing element

hailogallery: Hailo gallery element

hailograytonv12: hailograytonv12 - postprocessing element

hailoimportzmq: hailoimportzmq - import element

hailomuxer: Muxer pipeline merging

hailonv12togray: hailonv12togray - postprocessing element

hailonvalve: HailoNValve element

hailooverlay: hailooverlay - overlay element

hailoroundrobin: Input Round Robin element

hailostreamrouter: Hailo Stream Router

hailotileaggregator: hailotileaggregator

hailotilecropper: hailotilecropper - Tiling

hailotracker: Hailo object tracking element

18 features:

+-- 18 elements

root@zub1cg-sbc-2023-2:~# gst-inspect-1.0 hailo

Plugin Details:

Name hailo

Description hailo gstreamer plugin

Filename /usr/lib/gstreamer-1.0/libgsthailo.so

Version 1.0

License unknown

Source module hailo

Binary package GStreamer

Origin URL http://gstreamer.net/

hailodevicestats: hailodevicestats element

hailonet: hailonet element

synchailonet: sync hailonet element

3 features:

+-- 3 elements

root@zub1cg-sbc-2023-2:~#

We may want to revisit the examples created in the home “apps” directory, to modify them for our targetted Zynq UltraScale+ device.

Known Issues

The current version of this project has the following known issues:

- The examples in the “apps” directory need to be modified to run on the Zynq-UltraScale+ targets.

Conclusion

I hope this tutorial helped to understand how to add Hailo-8 functionality to your custom platform.

If you would like to have the pre-built SD card image for this project, please let me know in the comments below.

Revision History

2023/11/18 — Preliminary Version