I have trained weights and biases from a CNN network for different layers. I have converted those data into fixed point 16-bit binary format and saved them as .txt files for different convolutional layers. Since the data files are too large to accommodate them into BRAM (On-chip memory), I need to use external PS side DDR4 memory to store those.

However, I don't understand how I can store those data in DDR4 memory for simulation purposes as the Verilog function of $readmemb() says out of memory in the Vivado while loading a single file as I think it is taking the BRAM only; which is too small in capacity.

So, please guide me on how to approach the simulation and then real-time inference operation after making the equivalent CNN using Verilog HDL coding, whether my approach is correct or if I need to approach it another way.

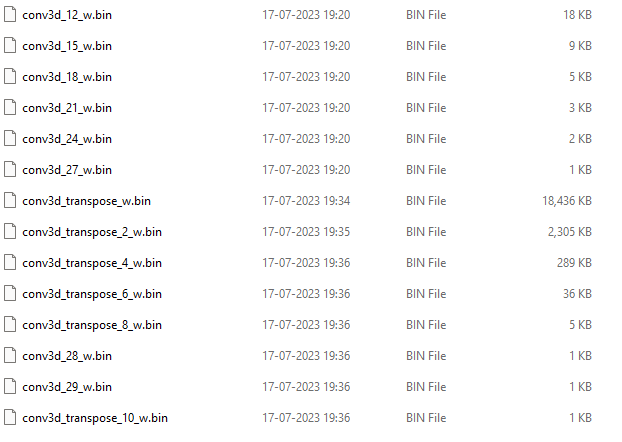

Attached are the file organization and sample contents for reference.