Hi Everyone!

New with the release of BSPs for PetaLinux 2021.1 is a BSP that adds NVMe SSD capability to the UltraZed-EV!

What is NVMe? It is the industry standard for solid state drives (SSDs). The NVMe specification was designed specifically to optimize the performance of SSDs for much higher throughput and lower latency than legacy SATA drives. For more information go to https://nvmexpress.org or this Google search.

Have you ever wanted or needed a way to add high speed and high capacity storage to your embedded Zynq UltraScale+ MPSoC system? This new BSP adds the PCIe transceivers and logic via the Xilinx "DMA/Bridge Subsystem for PCI Express" IP to the programmable logic (PL) to enable the addition of a M.2 form-factor NVMe SSD to the system. This design uses the FPGADrive FMC adapter from Opsero to complete the connectivity to the Delkin 128 GB NVMe SSD used for tests and benchmarking. This particular SSD uses 2 PCIe lanes, but the Xilinx Zynq UltraScale+ MPSoC design can be modified in Vivado to use up to 4 PCIe lanes (usually for larger capacity SSDs). Here is a snippet of the Vivado block design showing the xdma IP and AXI connectivity, etc:

Here is a photo of the FPGADrive FMC installed on the UltraZed-EV:

Instructions for how to install this BSP can be found in Xilinx UG1144.

Ready-to-boot Linux OS binaries are provided in the pre-built folder of the installed BSP (<BSP>/pre-built/linux/images/). Each BSP supports multiple boot methods depending on what the hardware supports. To boot the provided Linux OS image select a supported boot method and read the provided <BSP>/pre-built/linux/images/how_to_boot.txt file for booting instructions.

NOTE You will need the FPGADrive FMC and NVMe SSD installed BEFORE booting the Linux OS. The FMC and SSD are required for boot.

Benchmarking the NVMe SSD Performance

Using the Linux Duplicate Data , or 'dd', command we can test the read and write performance of the installed NVMe SSD.

WARNING! Using the Linux 'dd' command requires root or sudo permissions, which is enabled by default in the Linux OS image for this PetaLinux BSP. Be sure to backup any important data on the SSD before using the 'dd' command.

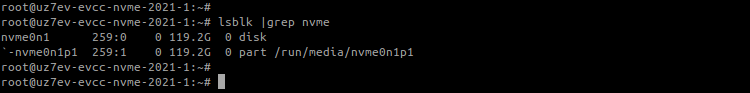

First we need to identify the SSD in the Linux OS. We know that the SSD, when enumerated and mounted by the OS, will have "nvme" in the partition name, so we can grep for "nvme" using the 'lsblk' command.

# lsblk | grep nvme

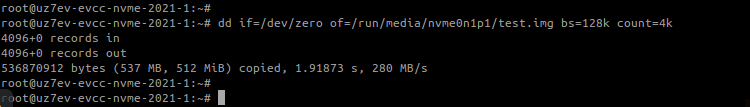

Test 1: Write a large file to the SSD.

The below command tells 'dd' to write a 512 MB file (4 K blocks of 128 K size) to the SSD:

# dd if=/dev/zero of=/run/media/nvme0n1p1/test.img bs=128k count=4k

While the 280 MB/s write speed may seem impressive, it doesn't tell the full story from a true performance perspective. This is just the speed that the 'dd' command *thinks* it wrote a file to the SSD. The whole file hasn't actually been written to the disk yet. When the command exits the OS still quite busy writing the buffers to the SSD. A more accurate benchmark of system performance is to append 'dd' with the command to do a final 'sync' to ensure the file buffers are written to the SSD before exiting.

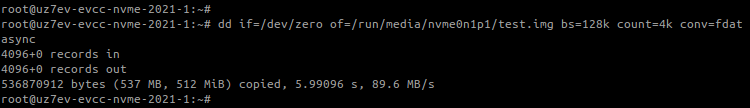

The below command tells 'dd' to write a 512 MB file (4 KB blocks of 128 KB size) and do a file 'sync' right before it exits:

# dd if=/dev/zero of=/run/media/nvme0n1p1/test.img bs=128k count=4k conv=fdatasync

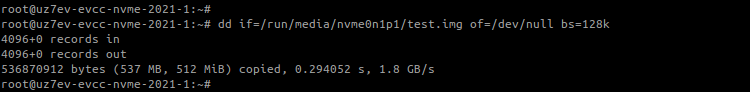

Test 2: Read a large file from the SSD.

The below command tells 'dd' to read a file in 128 KB blocks and direct the file to the system null device. Essentially this emulates copying a file into RAM so the performance is predictably very good:

# dd if=/run/media/nvme0n1p1/test.img of=/dev/null bs=128k

However, like writing a file without completing the buffer writes to the SSD, this doesn't tell the full story. It is perhaps a more realistic performance indicator to write the read file to a new file. This is, after all, what the 'dd' command was always meant to do - duplicate data.

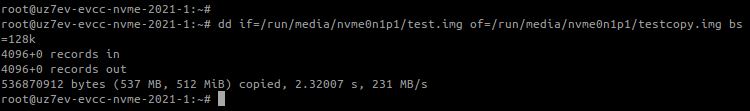

The below command tells 'dd' to read a file in 128 KB blocks and write it to a new file:

# dd if=/run/media/nvme0n1p1/test.img of=/run/media/nvme0n1p1/testcopy.img bs=128k

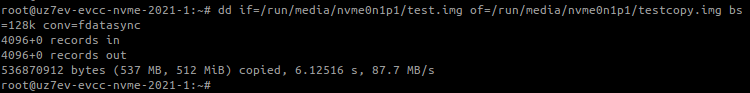

But even this doesn't tell the full story. The file has been read and copied to the new file, but the command exits before the file has been completely written to the SSD. Again, we need to append the 'dd' command with a 'sync' to ensure the file buffers are written to the SSD before exiting:

# dd if=/run/media/nvme0n1p1/test.img of=/run/media/nvme0n1p1/testcopy.img bs=128k conv=fdatasync

The performance of the 'dd' command will vary for file writes and reads, sometimes significantly, especially if the OS is pre-empted with other tasks while writing the buffers to disk. Do not be discouraged if at first the 'dd' performance seems poor. Experiment with running repeated commands to get a better idea of achievable performance over time.

Also, feel free to experiment with changing the block size (bs=<>) and number of blocks (count=<>) in the 'dd' command. This can also significantly impact SSD I/O performance. Keep in mind, though, that the file size (block size * count) to be written also has an impact on 'dd' command performance. Changing the block size or count on the 'dd' command may affect command performance, but that performance change may also be because the file size also changed. It is usually best to keep the file size constant by changing the block size and count in an inverse ratio (e.g. double the block size while dividing the count in half) to get the best benchmark of file SSD I/O performance.

How about you? Have you ever needed or wanted to add a high capacity, high speed NVMe SSD to your UltraZed embedded system? Let us know in the comments below.

Buy the 128 GB Delkin NVME SSD

Additional Resources

Delkin Devices:

Opsero:

NVMe

-

bhfletcher

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

-

narrucmot

in reply to bhfletcher

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Comment-

narrucmot

in reply to bhfletcher

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Children